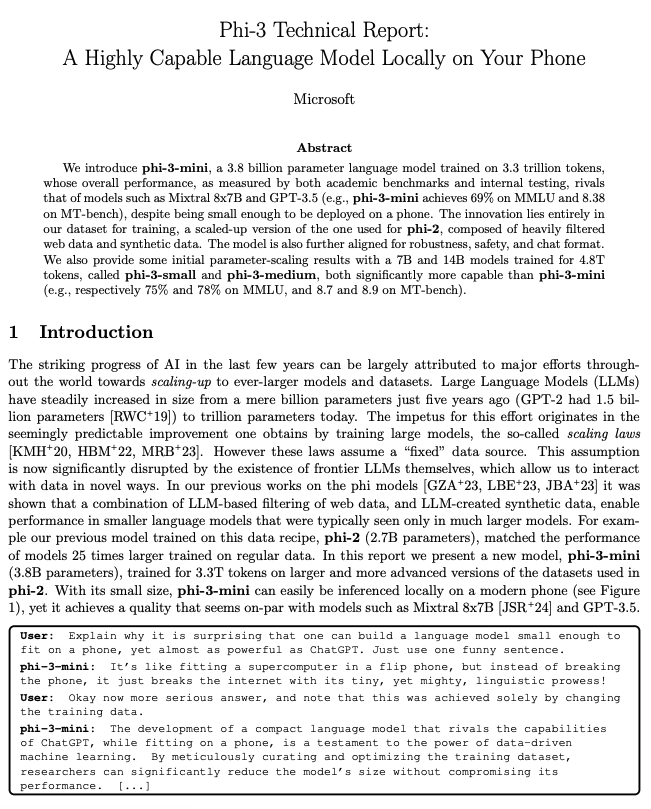

Boris Power

@BorisMPower

Followers

34K

Following

10K

Media

176

Statuses

2K

Head of Applied Research @OpenAI

San Francisco, CA

Joined July 2017

Some more napkin math - size of the Internet is ~10^11 pages of text*, this would cost (only?) $50M to embed. Who wants to take on Google?.

We just released new embeddings at a ridiculously low price!. For $40 one can embed all Joe Rogan podcast's transcripts (100k pages of text!) - who will go viral by building a semantic search for it?. This is better, and 150x cheaper than previous models!

46

215

2K

I’m on a research visa too that i will lose if i resign. These are details - onwards with the mission! 🚀.

I am on an H-1B, in the process of getting my green card and relocating my family to the US. Me and many other colleagues in a similar situation have signed this letter. I do not know what will happen next, but I am confident we will be taken care of. the board should resign.

37

59

1K

@Dr_Singularity I’m sorry we failed you and thanks for the patience - hopefully we rectify this soon and make the subscription way more valuable.

113

16

695

Completely wild that the same capability underlying model (and fine tuning) was available through the api for 8 months prior and nobody jumped an opportunity to do this, so we had to build it ourselves. What’s the next UX innovation, waiting to happen in this space?.

This is insane. This is what I’ve been alluding to for months now. This is an epochal transformative technology that will soon touch - and radically transform - ALL knowledge work. If most of your work involves sitting in front of a computer, you will be disrupted very, very

44

56

524

@vkhosla @realDonaldTrump You read the wrong stat. Unemployment rate in Argentina is around 7%. The article refers to “poverty rate”, which is a very different measure.

1

5

489

Today we released #dalle 2 - a model which can generate incredibly impressive images based on a textual description!. "A cobra, surfing on a big wave". (Feel free to drop suggestions in the thread - I'll generate and share if they are fun!)

128

47

406

I got fooled by the Reflection 70B announcement. tl;dr - the model performs very badly.

A story about fraud in the AI research community:. On September 5th, Matt Shumer, CEO of OthersideAI, announces to the world that they've made a breakthrough, allowing them to train a mid-size model to top-tier levels of performance. This is huge. If it's real. It isn't.

18

24

413

I can’t even imagine what your next thing could possibly be but i trust it’ll make OpenAI just a footnote!.

i loved my time at openai. it was transformative for me personally, and hopefully the world a little bit. most of all i loved working with such talented people. will have more to say about what’s next later. 🫡.

5

11

358

@paulg Keep in mind that 3 is not even a majority of the starting 6 from when this started less than a week ago.

7

8

354

First time proper RL over the space of language! This brings up memories of early days of computers getting better at Go via self play.

We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond. These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math.

4

14

351

Amazing investigative work! 👏 😆.

13

16

337

@Benioff @salesforce @salesforcejobs Lol, like it was ever about compensation. We got >95% in less than 24 hours, and the compensation never crossed my mind!. Can you give us 700 of our amazing colleagues, then we may budge 🚀.

8

7

320

Good luck - humanity will benefit immeasurably if you succeed!.

Superintelligence is within reach. Building safe superintelligence (SSI) is the most important technical problem of our time. We've started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence. It’s called Safe Superintelligence.

7

6

319

Congrats on the "low key research preview" of computer use! ;).

Introducing an upgraded Claude 3.5 Sonnet, and a new model, Claude 3.5 Haiku. We’re also introducing a new capability in beta: computer use. Developers can now direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking, and typing text.

9

13

315

@apples_jimmy First time an LLM provider increases prices for a released model. Interesting and unusual.

15

4

306

@rishabh16_ Our models are available to anyone. Who will build the next gen search tools and commercialise them effectively?.

17

19

273

OpenAI was able to strongly relax our policies by:.1. aligning our models to be great at following instructions 2. releasing a moderation endpoint, to help developers Almost anything net-good is allowed now!

The reason we’re seeing a resurgence in the GPT-3 apps is because OpenAI took the training wheels off. No more restrictions around long-form writing or social posting using their api *and* you don’t need to be manually approved. You can pretty much do anything now.

10

34

250

Long awaited, true delightful voice experience is finally available!.

Advanced Voice is rolling out to all Plus and Team users in the ChatGPT app over the course of the week. While you’ve been patiently waiting, we’ve added Custom Instructions, Memory, five new voices, and improved accents. It can also say “Sorry I’m late” in over 50 languages.

22

4

251

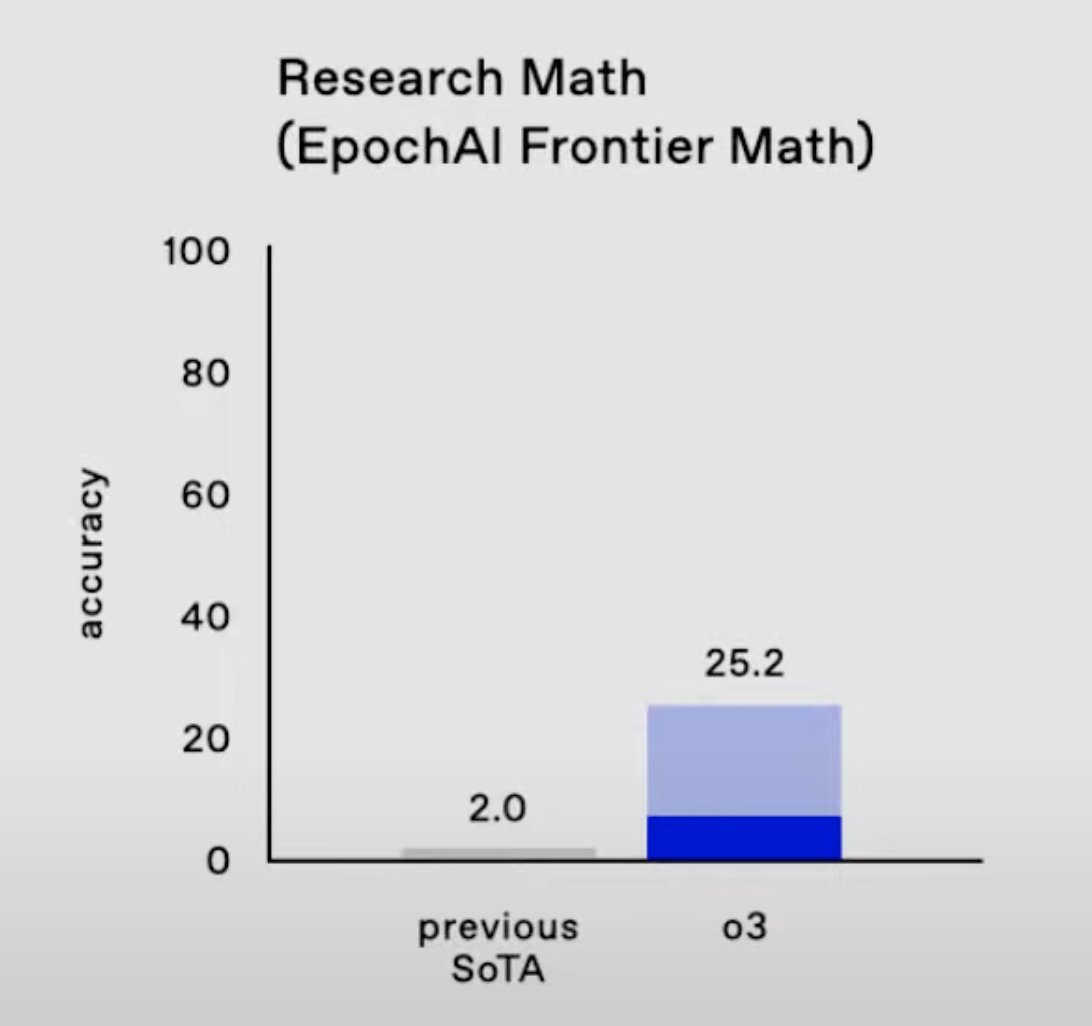

An insider’s view attempting to explain to untrained mathematicians how hard FrontierMath dataset is. Average human performance given unlimited time is approximately 0%.

1/11 I’m genuinely impressed by OpenAI’s 25.2% Pass@1 performance on FrontierMath—this marks a major leap from prior results and arrives about a year ahead of my median expectations.

13

25

248

It only gets better from here!.

🤯🤯🤯I'm shocked by the results from OpenAI's o1 model on THIS YEAR's Korean SAT exam. It got only *ONE* question wrong, placing it within the Top 4% of students. This exam was crafted by professors who were locked up in a hotel for a month, making it an unseen test set for all

12

17

236

the most beautiful scaling plot in the past 5 years.

@OpenAI o1 is trained with RL to “think” before responding via a private chain of thought. The longer it thinks, the better it does on reasoning tasks. This opens up a new dimension for scaling. We’re no longer bottlenecked by pretraining. We can now scale inference compute too.

3

14

226

The fastest growing open source repo in OpenAI history, almost entirely built by Isabella in a few weeks!.

🌟 Our ChatGPT Retrieval Plugin is #1 trending on GitHub! Improve your ChatGPT experience by accessing relevant information from your personal or organizational documents using OpenAI's embeddings models and vector databases. Here's a quick overview:.

2

16

222

@aidan_mclau The reason chess engines can do it is they are essentially a hardcoded agent that keeps applying simple functions. Similar to a human - given a pen and paper we can think for a lot longer about one problem. The exciting thing about o1 is that it’s reliable enough for agents.

10

10

217

I feel sorry for the original voice actor who has to go through and withstand this drama - it doesn’t seem fair to have your own voice questioned like this. 💜.

The Sky voice was available since September of last year. It has been used by millions of people in ChatGPT without an issue. The controversy began when it all of a sudden had more personality and inflection because of GPT-4o. It could have sounded like a Kardashian and people.

14

16

212

@alexandr_wang I applaud this effort. But don’t call it last, otherwise we’ll get to FINAL_FINAL_v5_latest.

2

1

205

It would be great if someone figured out how to make super hard evals economically a viable business. Every lab would pay 💰.

3/10 We evaluated six leading models, including Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5 Pro. Even with extended thinking time (10,000 tokens), Python access, and the ability to run experiments, success rates remained below 2%—compared to over 90% on traditional benchmarks.

18

7

196

Evals are difficult to come by - here’s a good one we’ve used internally that’s not yet saturated!.

Factuality is one of the biggest open problems in the deployment of artificial intelligence. We are open-sourcing a new benchmark called SimpleQA that measures the factuality of language models.

4

3

196

@pwang_szn @OpenAI @LeagueOfLegends Really cool!. But the commentary isn’t very good. It’s a bit like chess commentary from gpt4. Lots of obvious statements and a few hallucinations. Missing the key aspects - two towers dropped on opposite sides and top lane had to vacate the tower due to dive potential.

5

0

188

@Dr_Singularity Are you sure? Maybe the world will be hyper deflationary, making your current money orders of magnitude more valuable in that future world.

17

4

193

Exactly. And is getting better at an impressive speed.

O1 is just godly good. I uploaded my blood test results and discussed with it all possible therapies. It’s literally the best doctor I ever had. Not only are the diagnoses and therapies on point but I also learn so much about my body and myself as o1 explains everything in.

8

6

188

Phi-3 can run on a phone, and is very competitive with gpt-3.5!.

Phi-3 Technical Report. Microsoft presents a new 3.8B parameter language model called phi-3-mini. It's trained on 3.3 trillion tokens and is reported to rival Mixtral 8x7B and GPT-3.5. Has a default context length of 4K but also includes a version that is extended to 128K

17

18

175

@fchollet Regardless if we solved it or not - I’d love to figure out a way to incentivize the creation of much harder evals that truly stand unsolved for more than a few months, and are ideally economically valuable.

18

4

171

Can’t wait to see what you do when the voice + video streaming is implemented in our API !.

We’ve just added GPT-4o to the Ai Bus — expect your Pin to be even better at understanding your requests. This is live on all Ai Pins — no action needed. The architecture of CosmOS enables us to quickly adopt the latest and greatest AI technologies.

10

6

171

Incredible, I was hoping Andrej releases something like this, but i really didn’t think it would be as comprehensive as this!. Making latest innovations as accessible as this is just 🔥!.

📽️ New 4 hour (lol) video lecture on YouTube:."Let’s reproduce GPT-2 (124M)". The video ended up so long because it is. comprehensive: we start with empty file and end up with a GPT-2 (124M) model:.- first we build the GPT-2 network .- then we optimize

4

8

170