Alex Nichol

@unixpickle

Followers

9,406

Following

398

Media

970

Statuses

5,647

Code, AI, and 3D printing. Opinions are my own, not my computer's...for now. Husband of @thesamnichol . Co-creator of DALL-E 2. Researcher @openai .

Joined September 2011

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

オリンピック

• 683524 Tweets

Christians

• 400909 Tweets

#บัสซิ่งไทยแลนด์EP7

• 256354 Tweets

花火大会

• 222787 Tweets

#พรชีวันep1

• 177758 Tweets

1M LOVE LINGORM

• 133813 Tweets

Endrick

• 128477 Tweets

グラブル

• 117351 Tweets

スポーツ

• 114780 Tweets

Itália

• 77181 Tweets

YOKOFAYE WATCH BLANK

• 73795 Tweets

Judo

• 67382 Tweets

永山選手

• 66384 Tweets

#SixTONESANN

• 49478 Tweets

柔道の審判

• 48515 Tweets

Darlan

• 42559 Tweets

#青島くんはいじわる

• 34549 Tweets

Leclerc

• 34367 Tweets

#ジェルくん誕生祭2024

• 31791 Tweets

Arabia

• 30122 Tweets

反則負け

• 28979 Tweets

Checo

• 23734 Tweets

柔道の判定

• 23261 Tweets

ハンドボール

• 20692 Tweets

コラボガチャ

• 20009 Tweets

Equi

• 19948 Tweets

男子バスケ

• 16425 Tweets

Irak

• 16386 Tweets

クラフトワーク

• 14976 Tweets

Babiarza

• 12670 Tweets

ガルリゴス

• 12184 Tweets

girl in red

• 10959 Tweets

タルマエ

• 10370 Tweets

Last Seen Profiles

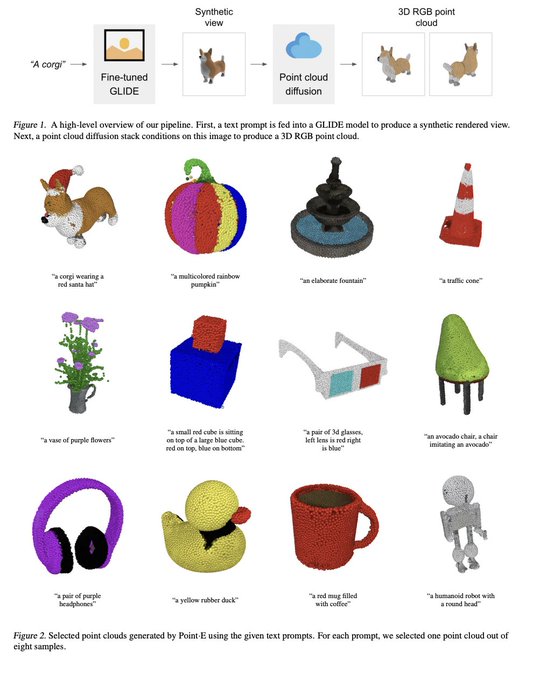

There is a new 3D generative model in town: Genie by

@LumaLabsAI

. This model is surprisingly good and insanely fast! As someone who has worked on 3D generative modeling, here are my observations and guesses about how this system works (without any insider info):

5

34

315

This is something I always wanted to investigate, but never had time to. It would seem that MAML (and presumably Reptile as well) learn useful features in the outer loop, rather than learning how to learn useful features in the inner loop.

Rapid Learning or Feature Reuse? Meta-learning algorithms on standard benchmarks have much more feature reuse than rapid learning! This also gives us a way to simplify MAML -- (Almost) No Inner Loop (A)NIL. With Aniruddh Raghu

@maithra_raghu

Samy Bengio.

9

163

603

1

13

100

@ak92501

This is ridiculous. None of these are novel contributions and they don't reference any of the many past works that apply diffusion to inpainting.

4

5

78

I just read this paper as someone familiar with RL but not so much with video compression. Some thoughts and questions in thread...

1

6

64

I investigated this in 2017 and even at the time it looked encouraging. Glad to see it make a comeback.

2

7

61

Speaking of consistent characters, how about becoming movie stars? Here, the model is able to depict me and

@gabeeegoooh

as detectives in a stunning movie poster. Note how our names and the movie title are rendered properly! 3/8

3

2

53

I have now learned that it's a fallacy to equate occupancy with efficiency! It's possible to fully utilize the hardware with low occupancy by leaning on instruction-level parallelism.

@cHHillee

pointed me to this very elucidating slide deck:

1

4

50

Amazing work! This is a very important moment for RL.

1

6

37

@marwanmattar

Some of the best ML researchers I've ever met do not have PhDs--or any degree, for that matter. It's your choice as a company how you want to filter candidates, but there are likely better ways.

0

3

35

@rasbt

Disclaimer: I was an author on that paper. Yes, diffusion models will really shine for most use cases. GANs might still be better for very narrow domains, but otherwise wouldn't be my first choice.

3

2

34