Shawn Presser

@theshawwn

Followers

8K

Following

15K

Media

3K

Statuses

14K

Looking for AI work. DMs open. ML discord: https://t.co/2J63isabrY projects: https://t.co/6XsuoK4lu0

Lake St Louis, MO

Joined January 2009

I’ve been staring at this chart for three minutes and can’t wrap my head around it. How did openai scale chatgpt so well? The fact that they pulled it off should terrify google. Log10 scales break my brain. I grew up watching Netflix go from 0 to 100m. OpenAI did it in 3 months.

chatGPT : something different is happening. Number of days to 1M and 100M users : .vs.* instagram.* spotify.* facebook.* netflix.* twitter. #chatgpt #ai #openai #google @openai

44

39

415

Yet again, someone with 8 followers (@jonbtow) randomly shows up and points out something awesome (a badass way to plot neural networks). I'm amazed and fortunate that people share stuff like this!

@theshawwn Pretty similar.

5

42

315

Prediction: five years from now, there will be no signs of AGI being anywhere close to the horizon. The technology will get sufficiently more and more advanced, but will remain a tool for humans, rather than a species that are recognizably alive, or achieving superintelligence.

I don't really like talking about AGI timelines. The term "AGI" is vague and varies depending on the speaker. According to my best understanding of the term, I think we'll probably get AGI within 5 years, but I suspect the econ impacts will come later. This confuses people.

26

36

288

@kathrinepandell @angelt18 @redditships I like the phrasing here. It implies that the therapist is claiming personal responsibility for every boner in the world.

1

0

252

Trained a GPT-2 1.5B on 50MB of videogame walkthroughs. The results are *very* interesting. Someone could parse this and generate game levels, or mechanics. Thanks to @me_irl for the dataset, and for giving permission to release everything publicly. I'll post instructions soon.

10

55

253

I discovered long ago that gwern ruthlessly purges bad ML ideas. He’s rarely wrong. If an idea has the slightest flaw, it won’t make it past him. Here’s one he didn’t reject. If you’re looking for a promising idea about GPTs, consider snagging this one before someone else does.

@YebHavinga @JagersbergKnut @robertghilduta I was thinking, the model could output a token and a confidence. The confidence levels are stored, just like the tokens are. When you reach the max context size, go back to the first uncertain token and regenerate, but this time it can see the rest of the tokens. Repeat to taste.

17

12

197

We've been building the largest corpus of freely available training text ever released, called The Pile. @nabla_theta has been doing an incredible amount of work on it. Mainly I like how fun it is. You can join and contribute!

11

35

173

@LilliVaroux @forte_bass I noticed that too. He calculated $0.01 per install when Unity charges $0.20. He also got the floor wrong by saying no charge till $1M rev; Unity charges at $200k rev.

4

1

144

Holy crap. This is the first time that I've seen one of my discoveries in print, almost word for word. (Independent discovery.). Side by side: Lion vs mine, from ~days ago. I almost posted a big twitter thread about it, but wanted to do more tests.

Symbolic Discovery of Optimization Algorithms. Discovers a simple and effective optimization algorithm, Lion, which is more memory-efficient than Adam.

5

8

145

@YTSunnys @GilvaSunner In fairness, it's Nintendo's music. From it seems like he just uploads their music without transforming it. That's clear-cut infringement of business IP. Some of them don't mind. Others do.

28

1

137

Success: My first published paper! 🎉 I wrote zero words of it, but I made one of the core datasets so my name is on it. Also apparently I'm notorious enough to be mentioned explicitly here: "by Leo Gao et al. including @theshawwn", ha.

The Pile: An 800GB Dataset of Diverse Text for Language Modeling.by Leo Gao et al. including @theshawwn.#Computation #Language.

13

7

143

Ahem. ATTENTION ML TWITTER: *grabs megaphone*. The Russians trained their own DALL-E (ruDALL-E 1.3B), and it looks amazing. Googling "ruDALL-E" brings up nothing whatsoever. Apparently @l4rz is the only one who noticed it was released a few hours ago!.

5

17

113

I made a library for visualizing tensors in a plain python repl:. pip3 install -U sparkvis.from sparkvis import sparkvis as vis.vis(foo). "foo" can be a torch tensor, tf tensor, numpy array, etc. vis(a, b) will put 'a' and 'b' side by side. I like it. Here's the FFT of MNIST:

This is the stupidest hack I've ever come up with, and it works great. My local pytorch isn't working (thanks, M1 laptop) so I had to do my REPL work on a server. I didn't want to install jupyter, because lazy. But I needed to visualize a tensor. Solution: sparklines!

4

12

111

I used GPT-4 to draft a DMCA counterclaim for Does anyone know any lawyers that could weigh in on whether this looks reasonable to submit?. (Happy to pay for counsel, too; we're funded. But I'm not sure who to contact.)

An anonymous donor named L has pledged $200k for llama-dl’s legal defense against Meta. I intend to initiate a DMCA counterclaim on the basis that neural network weights are not copyrightable. It may seem obvious that NN weights should be subject to copyright. It’s anything but:.

13

10

96

@EGirlMonetarism How do you make videos like this? I'd like to present some AI arguments in similar form.

6

1

77

You don’t need interconnected A100s. Just average the parameters periodically. Think of it this way. Gradients are small. That means if a model is trained without interconnect, the copies can’t drift very far. So average them periodically and everything works out fine.

LLaMA was trained on 2,048 80gb A100s. Currently the most you can get interconnected is about. 20. This is unlikely to change soon as demand ramps hard. Only a few entities have capacity to train these models let alone compute for R&D.

10

6

86

This data was so striking that I made a graph. JAX accounts for 30% of all models uploaded to @huggingface hub. PyTorch: 63.2%.JAX: 29.6%.Tensorflow: 7.3%

@Sopcaja @tunguz This is not necessarily representative data, but it is interesting to note that among the models in @huggingface hub, 1,186 were written in TF, where JAX was used in 4,830 models. PyTorch had 10,322 models, btw.

7

7

81

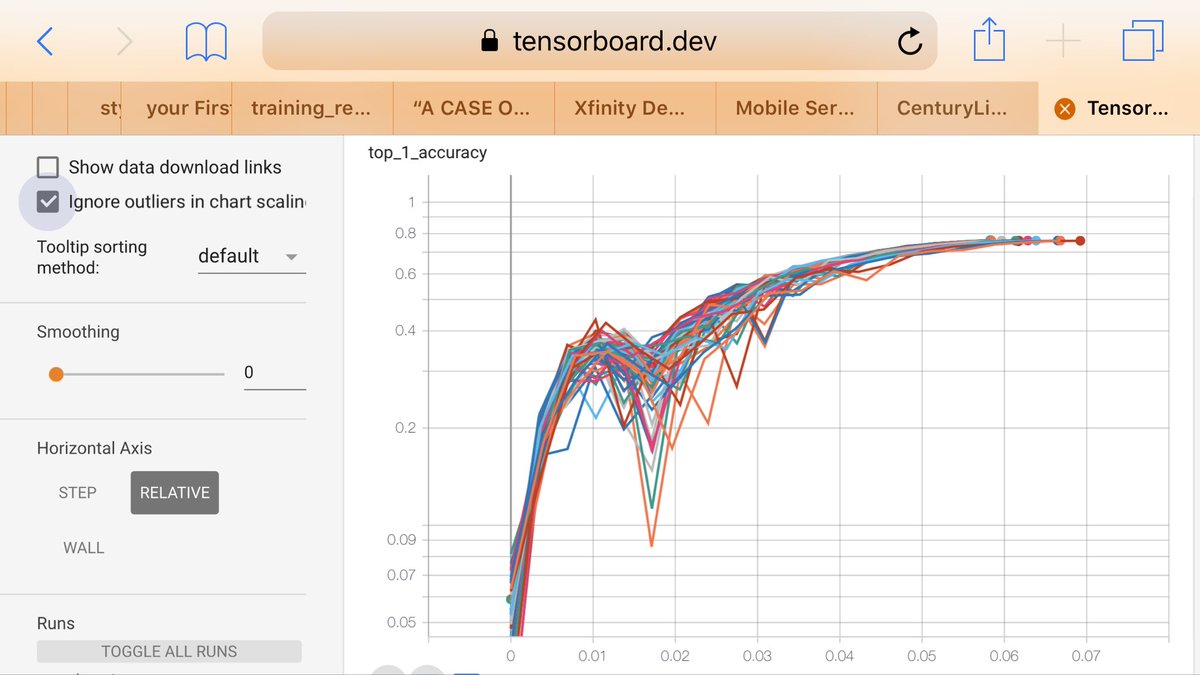

This is one of the stablest BigGAN runs I’ve seen. @gwern’s idea of “stop the generator if the discriminator is too confused” seems to work beautifully. It’s a cooperative GAN rather than competitive. - D stops if D < 0.2.- G stops if G < 0.05 or D > 1.5

6

13

78

Why optimizing ML is harder than it seems: I've been fortunate to learn a lot from @jekbradbury, and I wanted to pass along some of the lessons. notes:.- simplicity wins.- the XLA optimizer wins.- don't worry (much) about reshapes.- sharding is key.

2

20

78

Hi @ID_AA_Carmack! I once emailed you as a freshly-minted 18yo programmer who had just landed his first gamedev internship, asking you for advice on how to be impactful. Your reply was, essentially, that it matters very much how you use your time. Thank you for that. It was true.

1

1

79

One reason I never seriously pursued any full time ML job is that I’m a high school dropout. I don’t regret becoming a full time software professional at 17. It wasn’t a flaw. The reason I work to make ML popular is, partly, to get rid of this elitism. You can do ML. Today.

Research recruiter: We *love* your background. Tell us about your recent work. Me: Explains years of published projects. Recruiter: Sounds amazing. But when did you get your PhD?. Me: Don't have one. Recruiter: lmfao smh nevermind want to work on product? How's your leetcode?.

2

5

78