Deen Kun A.

@sir_deenicus

Followers

1,713

Following

958

Media

980

Statuses

20,353

Developer for Math Ed software co | Intelligence Amplification Tinkerer | What type of Dynamical Systems can be called Intelligent? | bboy hermit

Joined July 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

田中さん

• 615518 Tweets

田中敦子さん

• 580282 Tweets

声優さん

• 259687 Tweets

攻殻機動隊

• 191925 Tweets

Zize

• 139663 Tweets

Arhan

• 135299 Tweets

ネルフェス

• 107761 Tweets

Shadow

• 100942 Tweets

#RenjunThe8rightestStar

• 95659 Tweets

#8YearsWithRenjun

• 95554 Tweets

草薙素子

• 91702 Tweets

OUR BRILLIANT RENJUN

• 91211 Tweets

ショック

• 86107 Tweets

Salim

• 78041 Tweets

Black Myth

• 66776 Tweets

フランメ

• 62649 Tweets

Sinner

• 46470 Tweets

FELIZ CUMPLE XCRY

• 41453 Tweets

초코우유

• 37933 Tweets

ベヨネッタ

• 30189 Tweets

#कल_भारत_बंद_नहीं_होगा

• 24045 Tweets

Laporta

• 23190 Tweets

#يواكيم_اندرسن_مطلب_الاتحاديين

• 20696 Tweets

D-7 TO CHANYEOL SOLO

• 18933 Tweets

スーパームーン

• 16756 Tweets

Bahia

• 16257 Tweets

バニーの日

• 15568 Tweets

リサリサ先生

• 14840 Tweets

Azizah

• 14585 Tweets

MV Out Now

• 13286 Tweets

Last Seen Profiles

@GrumpyTallBrit

@fivefifths

I read it. No facts in there. But lots of Hypotheses, Implications, conjectures and misunderstandings of research.

5

0

224

@GrumpyTallBrit

@fivefifths

Lack of Fact because the state of knowledge cannot tell us how we go from distributive differences ovr white matter connectivity to behavior

0

0

89

@finbarrtimbers

@ericjang11

Yep. Probably an improvement on Process supervision (given the grade school math performance comment)

5

8

119

@nearcyan

Most companies that are labeled AI startups these days and are building something flexible/flashy are building on top of the Open AI API. This requires neither CUDA nor a python environment.

2

0

74

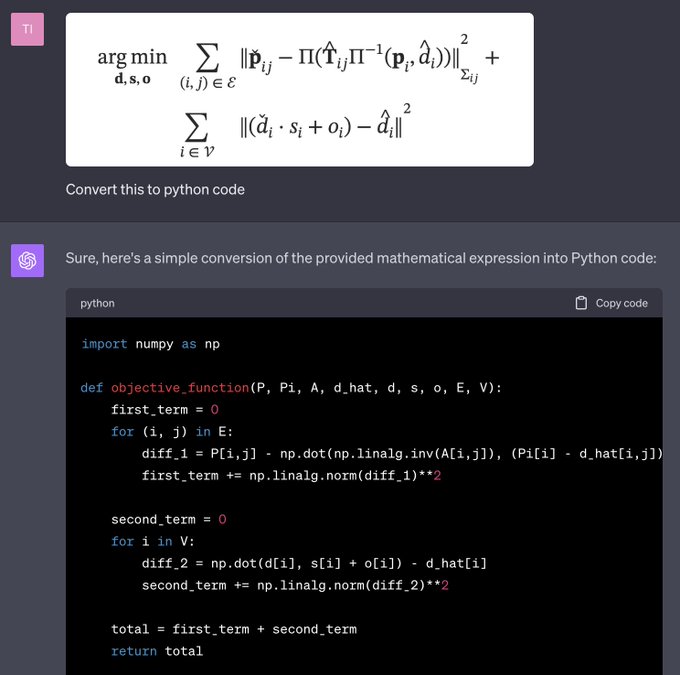

There are errors in this shot but somehow the post garnered thousands of interactions and almost no corrections. Those of us noting its errors were ignored

first_term section in particular contains major errors while full semantics of arg_min expression doesn't quite transfer to

6

6

68

@hillelogram

These are excellent examples of "Bat Deduction".

Although, the answers do feel creative

1

0

67

@mikemccaffrey

@SarahMackAttack

@corvidresearch

I don't think it thinks it's broken. It's the opposite, it's reasoning! It's clearly working out which direction to go in--you can see its head turning back and forth as it works out what to do

1

1

51

@JodyShenn

@MudMudJubJub

@EmmaHill42

@Jody_Bundrick

@ClassicDadMoves

@OwensDamien

Don't think random people're such good actors. His behaviors seemed genuine, as when people (any age) get a new & working sensory channel.

2

1

34

@migtissera

Dunno about result but the finetune is legit. There are def holes but they do not counter how outstanding it is. In fact, I am having trouble reconciling its output with the fact that it comes from a 7B model. Mind boggling.

3

0

41

@aaron_defazio

@davidchalmers42

I think it's actually Matt Mahoney and Jim Bowery who discussed it most clearly, back in 2005. Here, last updated 2009, Mahoney specifically states text compression as equivalent to General AI and discusses Language models:

3

5

33

@YosarianTwo

What does illustrative example mean? Did it actually happen or no? I guess OpenAI wouldn't be so discourteous to Task Rabbit Workers with that quote? Either way, very ambiguously written.

Also: ARC found that the versions of GPT-4 it evaluated were ineffective at the autonomous

0

0

35

Bing's GPT is "smarter" than ChatGPT. Hard to say by how much, as gains are uneven. It's better at reasoning but still confabulates too much to be proficient at reasoning -> moderate gains. It solves a puzzle by

@fchollet

that all GPT's I tried (including codex and Chat) failed.

8

3

31

@aaron_defazio

@davidchalmers42

This is still the best theoretical starting point to understanding why LLMs work so well IMO.

Here is the thread that lead to the Hutter prize:

2

4

28

@ID_AA_Carmack

Isn't clear to me that flops map meaningfully to brains. Rates of activity are slow, log-normally distributed. No global clock either--in fact, brains might harvest noise/enter into reversibility regime? Also, sparse & heterogenous such that some say CPUs are less bad analogy

1

1

27

@Grady_Booch

That post is...

I find it hard to believe it isn't calculated to maximize "engagement". It's...incomprehensible to me that anyone actually could believe such complete nonsense.

1

0

26

@DirectorCoul

@Luchozable

@MarkJMasterson

@LukeGoode

@YourHomeLoanNZ

You can get a somewhat objective measure of the difficulty of a task in terms of the kolmogorov complexity of automating program. We're much further along automating how to solve the physics a uni undergrads does than the kind of Creative writing you'd expect from 6 yr olds

1

0

21

@DNAutics

@francoisfleuret

@aniervs

@zarhyas

The hard part is the subset of paths that are interesting to us. So the proof completed, is it something (likely) useless?

Something else to note is in games, only a few moves are valid but in math sequences are unbounded. There's no single unified model of math to fit a policy

1

1

26

@banburismus_

> What are the most elegant/beautiful ideas in ML?

IMO, the belief propagation algorithm.

Relatedly, though not quite an idea, is the observation that nearly all well performing algorithms are linkable with ising models & stats mech generally, in 1 or 2 steps.

4

0

24

@gdb

The essay is excellent in how coherent it stays but it doesn't really say anything. It represents amazing progress but was nonetheless frustrating--like an unscratchable itch--to read because it never gets to the point.

I (+majority of judges seem to) agree with Judge 6 best.

3

2

23

@GaryMarcus

you don't need a license for computers or to use search nor to put up a website.

All this does is concentrate power in the hands of incumbents *and* a shift to private bespoke work for wealthy patrons. Goes underground.

I should note this is tacit admission of LLM's power.

1

0

25

@317070

@MikePFrank

This is what I mean when I say LLMs aren't personalities but instead minimal hosts/simulators for them. In blog VM is simulated. But one could also set in a room, add a person & have them look over your shoulder as you typed instead. Add a dog. It will track them all.

1

1

25

@Miles_Brundage

Still, there's nothing remotely approaching their scripted demos from a complexity of movement plan perspective.

4

0

25

@KevinAFischer

@Teknium1

@JagersbergKnut

Whoa. These are mildly insane from a 7B model! This is finally something good enough to do interesting stuff with.

1

2

23

@Grady_Booch

One thing is we don't know what statistical engine at scale means. Our intuitions rely on low dimensional distributions, discrete lookup tables & simple state machines. These are very misleading for high dimensional, distributed dynamic energy minimizing circuit based functions

0

1

24

@samlakig

@EricHallahan

@kurumuz

@JeffLadish

For better than GPT-J, likely cost effective to just use ChatGPT API. Most LLM pollution I encounter has been in search, sites w. lots of incorrect question gen & answers built around trending keywords. Likely derived from T5 class, which are best-of-class and widely accessible

2

0

23

@GaryMarcus

The latest GPT is better at this:

2

0

21

@spiantado

@OpenAI

@Abebab

@sama

I got these. I think there's some luck of the draw in the level of bigotry generated but telling it to comment on its choices seems to v. significantly suppress this?

(eg it sez: In this program, we are assuming that all children's lives should be saved regardless of their race)

2

1

22

@alexjc

@Miles_Brundage

Exactly, even though scripted, the walking, running, jumping and flips are fluid and well executed. And their model predictive control--getting hardware to move like that is impressive. Movement in their learning counterparts is 4x sped up video opening draws while on wheels.

0

0

22

@rasmansa

@EPoe187

@EverydayFinance

But I am curious, see.

@EPoe187

any thoughts on what holds for a country if its mean IQ is 75 or 70? Jobs, industry, governance and so on. What do you suppose is the real world significance? None?

0

0

15

@alexjc

Are we certain it's GPT-4? Bing'sGPT seems stronger than GPT3.5s but can't say for sure it's GPT-4.

1

0

2

@norvid_studies

Without an invariant quantity constraining which events can be causally connected via the exchange of information, it is unclear if coherent agents/memories and learning could occur.

The speed of light enables a causal understanding of the world. Things downstream like energy c

2

2

20

@JeffLadish

There are key inaccuracies here that make it difficult to accept the full conclusion.

1) rate of improvement is insane

Yes but it is very uneven. And the part that's advanced most slowly is abstract reasoning and orchestrating of multi-step computation

2

0

19

@DanielleFong

I don't think this is correct. Something/someone made a mistake somewhere. The model architecture which defines the NN is not a sparse one and there is nothing of that sort mentioned in the paper.

1

1

20

@ethanCaballero

They probably don't understand in a sense meaningful to most humans.

But it is a common misapprehension amongst humans that understanding is at all necessary for competence.

Does exhaustive enumeration understand chess? Does it matter if you are losing to it?

1

3

19

@kareem_carr

physics = what if everything was approximately a spring?

statistics = lets hide all our assumptions and pretend the world is simple

economics (done right) = basically control theory

deep learning = if brute force ain't working, you're surely not using enough

0

0

18

@xlr8harder

I believe this non-ironically. Think a lot about complex sets of top-down emergent constraints that might, given the right transformation, be seen as having independent agency. Many cultural traditions as self-evidencing Memetic parasites or symbiotes that infect human minds

0

0

18

@ylecun

FWIW, fellow Turing award winner has this to say:

> The tension between reasoning and learning has a long history, reaching back at least as far as Aristotle, who, as already mentioned, contrasted the “syllogistic and inductive”

He defines theoryful as probabilistic || logical

1

4

19

@ParchmentScroll

@Teysa_Envoy

@pixelandthepen

@queer_queenie

The way I read it was as an in-universe sentiment that's also reflective of how the average person in our reality thinks. I didn't get the impression that they actually hold those views

I think a decent part of cyberpunk (what is it actually) looks at what is human,emotion,self?

1

0

13

@JeffLadish

Note that the past is not an oracle for the future. Vinge highlighted the stagnation route in his original article.

In terms of speed, hardware performance did level off in early '00s due to Death of Dennard Scaling. As of now, GPUs continue to get ever more power hungry.

4

2

17

@JodyShenn

@MudMudJubJub

@EmmaHill42

@Jody_Bundrick

@ClassicDadMoves

@OwensDamien

Like spasming of arms, happiness and confusion, uncertainty--as if surprised by and integrating new information.

2

1

14

@Origamigryphon

@Julicitizen

@RawTVMoments

Pretty sure Ms Frizzle or her school bus are least Class 4 Reality Warpers. His face didn't freeze it was a triggered protective shield and punishment she placed for anyone silly enough to remove their helmet.

0

0

15

@octonion

@ImogenBits

Things can be amazing accomplishments, particularly for their time and contain design decisions and user affordances that have not held up, in hindsight.

0

1

14

@Ted_Underwood

I think the UX and UI had a big role to play in this. It also explains things well. Even while being not better (possibly worse) than Galactica on technical topics, people somehow trust it more.

0

0

17

@nrfulton

@KamerynJW

Please don't paint with such broad strokes. I'm a programmer and am also a finitist but only with regards to the physical world and only when I'm not being a Platonist.

In neither case do I believe integers have the same cardinality as the reals or cantor's argument is wrong.

2

0

16

@primalpoly

Are you deliberately misrepresenting to maximize your attention potential? What does the development index of a country have to do with the moral standing of a typical person that lives there? It's indexed on an irrelevancy, like rating people by the number of shoes they own.

0

0

13

@moultano

Also an accessibility issue. Wikipedia + a good number of mathjax & katex pages have decent text alts for math. Wikipedia math text super messy; often stripped in scrapes but can use regex to clean up and keep. Don't forget books. Just need math detector. Image to tex is solid

1

0

17

@EigenGender

I think it's because fusion needs some amount of understanding while the bitter lesson is about outsourcing as many thinking decisions as possible to SGD/search.

0

0

14

@GaryMarcus

What about that most people in the world live under despotic and autocratic regimes? That is, most governments can't be trusted to do the right thing.

Do people in those countries get yet another self-chosen group dictating to them what is and isn't?

1

0

16

@aaron_defazio

@davidchalmers42

Oh, In 2011, Knoll & de Freitas wrote a paper on PAQ8--an early but sophisticated compressor utilizing neural net/mixtures of experts/dynamic ensembles in modeling language/sequences. Showed arch as general, able to predict text, play RPS, classifiers &c

1

4

14

@architectonyx

People have it backwards.

It's similar to brute force, everything is inferior to it. A properly constructed lookup table is perfect. Every model will be an approximation of it. Intelligence is needed because the perfection of a True Lookup Table is not physically acheivable.

1

0

15

@bio_bootloader

It's not just tokens encountered but diversity of implicit tasks in the corpus. And also, to get enough tokens to work out long tail, rare complex concepts. Likely what this will do is assign more probability mass to the small set of tropes the authors will be using.

0

0

15

@nearcyan

Don't forget ising models (-> hopfield-> attention), tropical rationals (relu FFN) and differential calculus (where the learning occurs)!

0

1

14

@arankomatsuzaki

Neat!

Everyday step by step, LLMs creep closer to full blown (bayesian?) probabilistic inference.

1

0

15

@nearcyan

LLMs are very passive.

To get AI where autonomy might pose a risk requires EXPUNGED. Just inference is insufficient since, EXPUNGED.

0

1

14

@GozukaraFurkan

@Yampeleg

More probably, the model was so heavily finetuned on GPT4, it triggers shapes (internal associations) so like GPT4's would, GPT4 judges it very highly. Even if wrong. Like:

"That sounds very much like what I'd say if I wasn't paying attention. Which is excusable. Pass".

1

0

14

@ligma__sigma

@goth600

This happens a lot. It generates answers that looks right but miss on key subtle details. It should be used as a lead generator or preparing you with vocabulary and concepts for proper research, not a question answerer (yet).

1

0

14