shwaytaj raste

@shwaytaj

Followers

929

Following

7K

Statuses

5K

Product @miro. Mentor https://t.co/xXWyV5P34i. Building https://t.co/axzQHxxEK5 https://t.co/0UAfi7wcd6

Dublin City, Ireland

Joined February 2010

Fantastic post. Too many good PMs get overlooked because of this. I think of all the "Invisible Work and micro-decisions" that really good PMs do to make sure the team does right for the customers and the business but these don't get surfaced because it's not a "criteria".

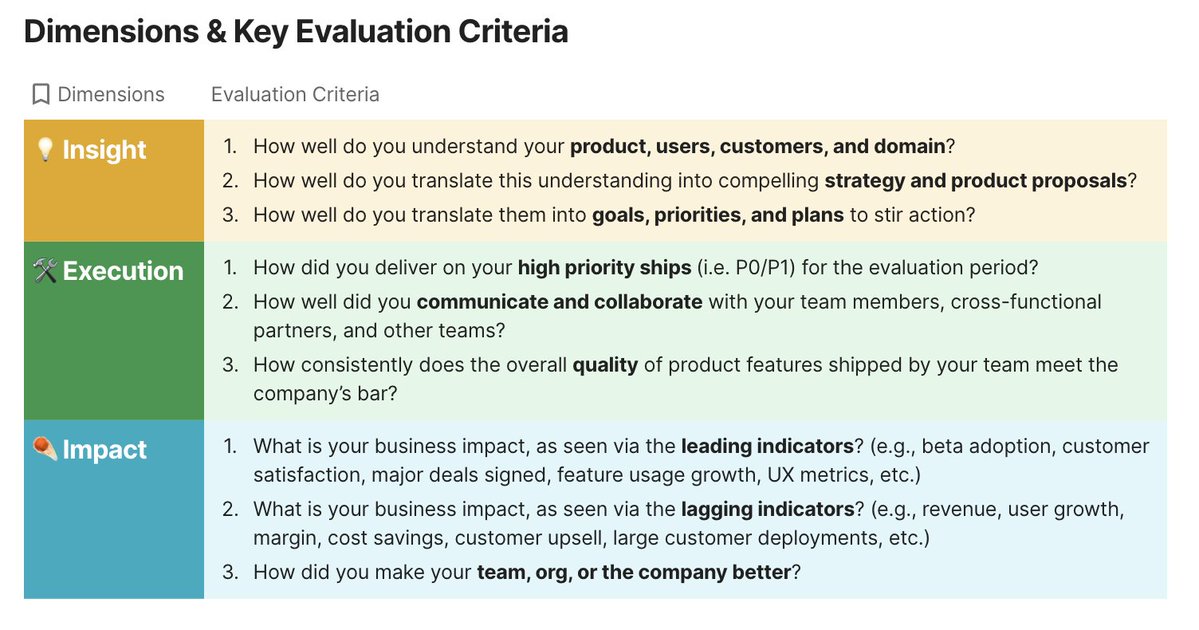

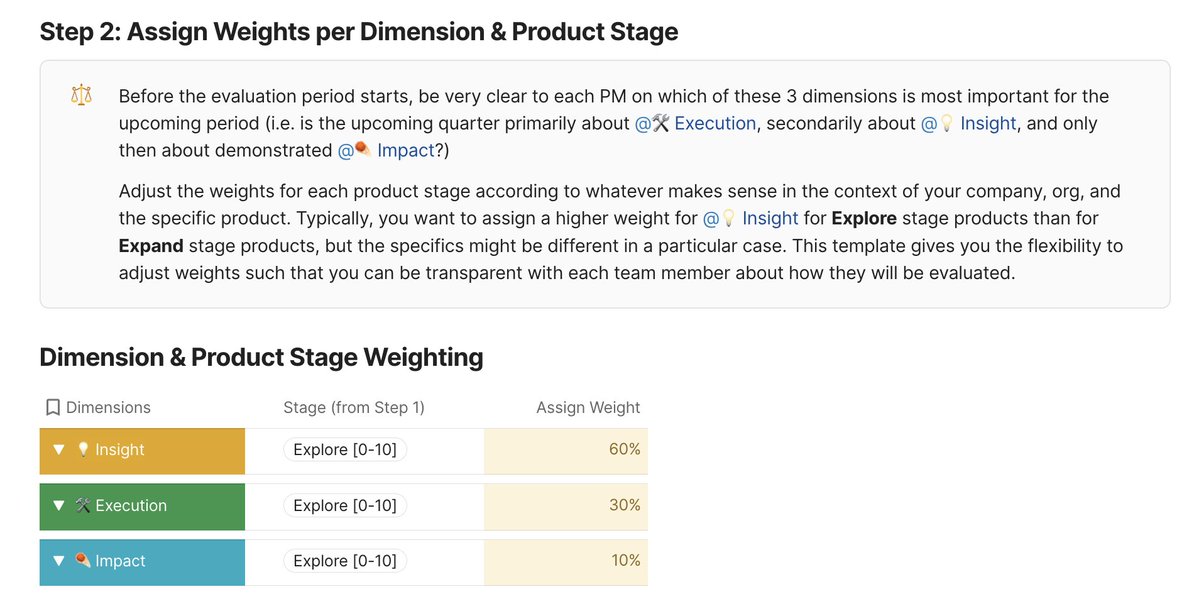

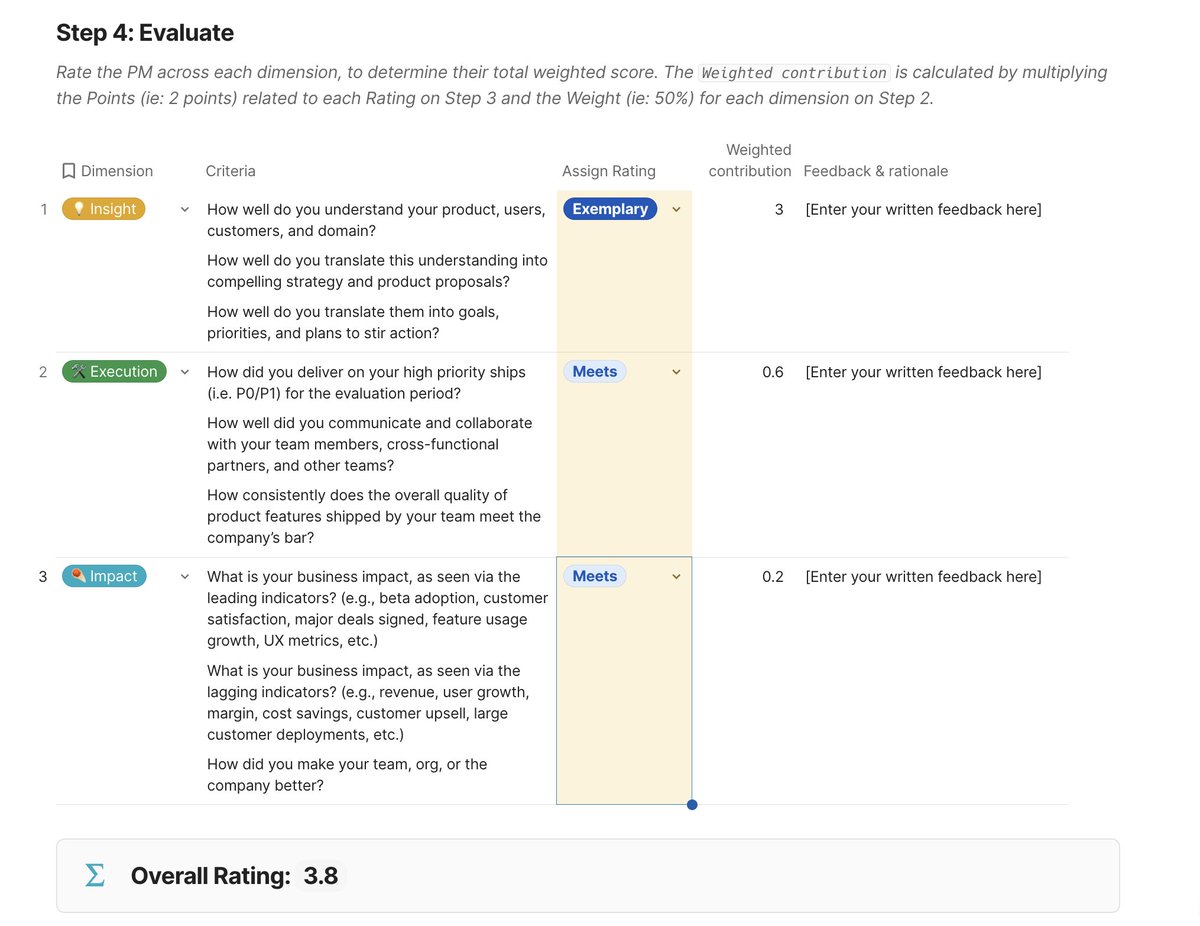

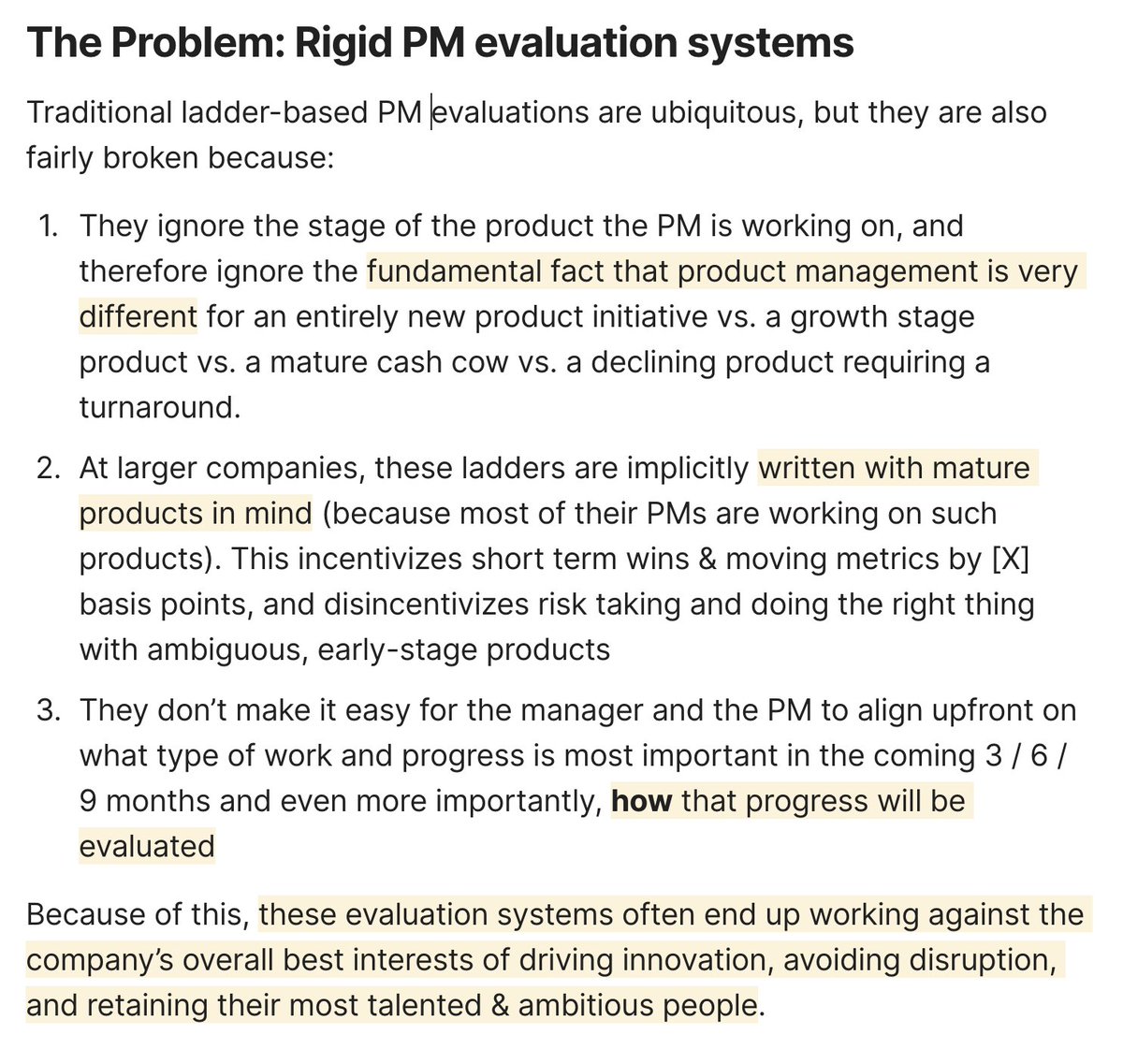

✨ New resource: a PM Performance Evaluation template (along with a calibration meeting story) Throughout my 15+ years as a PM, I’ve consistently felt that ladder-based PM performance evaluations seem broken, but I couldn’t quite find the words to describe why. Early on in my PM career, I was actually part of the problem — I happily created or co-created elaborate PM ladders in spreadsheets, calling out all sorts of nuances between what “Product Quality focus” looks like at the PM3 level vs. at the Sr. PM level. (looking back, it was a non-trivial amount of nonsense — and having seen several dozens of ladder spreadsheets at this point, I can confidently say this is the case for >90% of such ladder spreadsheets) Then at some point, I saw the light. Here’s how (story time): At some point late in my PM leadership career (this must have been year 12 or year 13), I found myself in a calibration meeting. This is a meeting where a select group of senior PM leaders get together to discuss the performance of the PMs in the org (goal being to rate PMs as fairly as possible, and removing any inconsistencies across individual teams). We were discussing the performance of a PM, let’s call him Bob. Bob did not report to me, but I had seen Bob’s work very closely (he worked closely with a couple of PMs who reported to me). Bob had very strong peer reviews, manager review, and I personally thought that Bob was a very strong PM. But the discussion in the calibration meeting (which is largely driven by the ladder spreadsheet) was turning in a direction I wasn’t expecting. Someone in the room said “well, I can see all the strong reviews and the execution, but I am concerned Bob hasn’t shown any strategic insight over the past 6 months [provides more evidence to support the comment]. So accordingly to our ladder, they don’t meet the requirements for an Exceeds or Strongly Exceeds rating”. Some other meeting participants dutifully chimed in with a +1 to that comment. The room was almost ready to move on to the next PM, when someone else in the room asked: “well, but did Bob do what’s right for his product and users, what he was required to do in this situation?”. That was a wonderful question and so I chimed in with my view: “Bob did everything right, in my opinion. Yes, Bob did not check the box in the career ladder for what we expect on strategic insight, but he did what’s right for the company, so it would not make sense for us to penalize him for that just because he didn’t check that one box over the past 6 months”. Bob eventually got a better rating than the group was initially leaning towards and I think Bob is still at that company, so it all worked out I guess. But, this calibration conversation prompted me to really re-think how we do PM performance evaluations. That then led me to develop the Insight-Execution-Impact framework for PM Performance Evaluations, which you can see below: I then used this framework informally to guide performance conversations and performance feedback for PMs on my team at Stripe — and I have also shared this with a dozen founders who’ve adapted it for their own performance evaluations as they have established more formal performance systems at their startups. And now, you can access this framework as an easy to update & copy @coda_hq doc here: This template makes it very easy to create greater clarity & transparency on how your performance is being evaluated as a PM. As you select the stage of the product the PM is working on, this doc updates the weights you’d assign to Insight, Execution, and Impact (e.g. you cannot expect regular business impact metrics to get moved in 3 months for an early Explore stage product) And then this table is where the PM can clearly see how they did along the dimensions of Insight, Execution, and Impact during the evaluation period, along with the weighted contribution of each, and written feedback from the manager with more details. How to use this template as a manager? In a small company that hasn’t yet created the standard mess of elaborate spreadsheet-based career ladders, you might consider adopting this template as your standard way of evaluating and communication PM performance (and you can marry it with other sane frameworks such as PSHE by @shishirmehrotra to decide when to promote a given PM to the next level e.g. GPM vs. Director vs. VP). In a larger company that already has a lot of legacy, habits, and tools around career ladders & perf, you might not be able to wholesale replace your existing system & tools like Workday. That is fine. If this framework resonates with you, I’d still recommend that you use it to actually have meaningful conversations with your team members around planning what to expect over the next 3 / 6 / 9 months and also to provide more meaningful context on their performance & rating. When I was at Stripe, we used Workday as our performance review tool, but I first wrote the feedback in the form of Insight - Execution - Impact (privately) and then pasted the relevant parts of my write-up into Workday. So that’s it from me. Here’s the template once again (you can easily copy it and play around with it [0]): 📈 [0] And if you want more of your colleagues to see the light, there’s even a video in that Coda doc, in which I explain the problem and the core framework in more detail.

0

0

1

@Mick_O_Keeffe I've dozens of Irish friends and colleagues and almost all of them genuinely have a really good sense of humour. Having one yourself and assuming good intent helps.

0

0

0

Loads of gold nuggets in the blog. I remember reading this and the simplicity, though harsh, has stuck with me.

There are 2 harsh truths for startups: 1. Until you have users, you don’t have a product. 2. Until they’re paying you, you don’t have a business. Can't believe this article is now 6 years old, thankfully the advice holds up today.

0

0

3

RT @zmiro: We're super excited to be sharing AskMore on @ProductHunt today: We built AskMore with @arindambarman…

0

5

0

Anyone doing user research knows that they have users who fall out of their funnel , either because they don't have the bandwidth for a synced 1:1, or aren't comfortable with face to face interviews. (This applies to the interviewer as well btw!). You miss out loads of insights.

In just a few days, more than 40 people replied. It would have taken weeks to get that many traditional interviews. And we probably wouldn't have been able to get that many calls anyway. But, were the insights valuable? Could we have gotten the same insights from a survey? (5/12)

0

1

3

This is a big problem. ESOPs, RSUs and ESPPs behave differently and are taxed differently in different parts of the world. This is why I started . Early days, but it'll at least give an idea of your broad taxes if not to the precise decimal.

To fellow tech bros working for Indian startups: ESOPs are taxed twice making them a tricky asset to book profits from. When exercising ESOP, you'll pay tax for a gain you haven't realized yet.

0

0

0

@harsh_vardhhan I struggled with this early on. The concept is quite alien. If it's useful try this (Sorry for the plug)

0

0

0

More tweaks to over the weekend. Added support for Netherlands (slightly complicated system). #buildinpublic

0

0

1