Pantone Cap

@pantone_cap

Followers

7

Following

32

Statuses

49

@iamDCinvestor It did a week's worth of work an assistant did for me in 20 minutes, with the same level of quality. It's my favorite OpenAI product. Learning things in depth is so much easier now.

1

0

3

@StaniKulechov When will be able to deposit AAVE on @base? Really sucks that we’re forced to pay high gas fees on the ETH L1 instance. Arbitrum has support for it but not base and I was confused as to the reason why

0

0

1

@eastdakota @Cloudflare This is better than GPT-4o performance* at ~$0.50 / 1M tokens? *caveat being you need to let the model spend additional time reasoning to get same levels of accuracy

0

0

0

thoughts I had around distilling reasoning models: - From my shit tests on R1-llama7B, I agree with the finding that when distilling reasoning models by training smaller models on the larger model’s “thinking” tokens - we are able to keep a lot of the reasoning capabilities - At the same time, there is still a lot of specific info that is not distilling down well when you lower model size. the 7b model did really well in my shit tests on reasoning (i.e math) but sucked at knowing facts off the top of its head (70b was much better and MOE [671B] even better) if we’re able to maintain reasoning capabilities but lose that specific information - isn’t this similar to something like a human brain? We don’t know everything off the top of our head, but can quickly research and reason through new problems. so is the future distilled reasoning models w/ super long contexts? out: back and forth RAG in: putting as much context as possible in the first prompt and giving it tons of test time compute to reason it out? gemini-2.0-flash-thinking-exp-01-21 (1M context) and r1 (128K context rn, but someone is def gonna take this to 1M context fast) are the best examples of this now distilling these models will make smart AI far more accessible to the masses, but the pros will still want the smartest models very unlikely that compute demand will turn around long term, but short term will it turn more towards inference compute and less towards training compute? if so, how long will it take til the major labs use more of their GPU clusters for inference > training?

0

0

0

I had to sell my $MU pre-market today, while I was still in the profit. The story of HBM is murky with deepseek and distilled models. Fast inference providers like Groq use SRAM (manufactured by Samsung) and we may not need as much HBM as we thought we needed to train models. Need some time to think.

0

0

0

I think the story here is about distilled models, and their impressive capabilities. The market is pricing in the fact that reasoning capabilities seem to maintain even when these models get distilled. Training a smaller model with the tokens from the bigger models works! That doesn't mean the smaller models are better at recalling specific information, but it does mean that given a long enough & relevant context, they can reason through to the final answer. Now a distilled model using 16x more tokens is still less compute & memory vs a larger non-CoT model. R1-llama7B was on par with GPT-4o in reasoning benchmarks! IMO the story here is about distilled models, and the market needs time to digest it. I don't believe this is a flash crash.

0

0

5

@ShardiB2 Nah for real, been following you for the last 3 years on my main account and you are the GOAT!

3

0

1

@crypto_condom The address you posted is Lido Advisors - completely different entity than Lido Finance

0

0

0

@crypto_condom What are you talking about? Huge fan of $LDO but they're based in the Cayman Islands. It's right there in their terms and conditions..

0

0

0

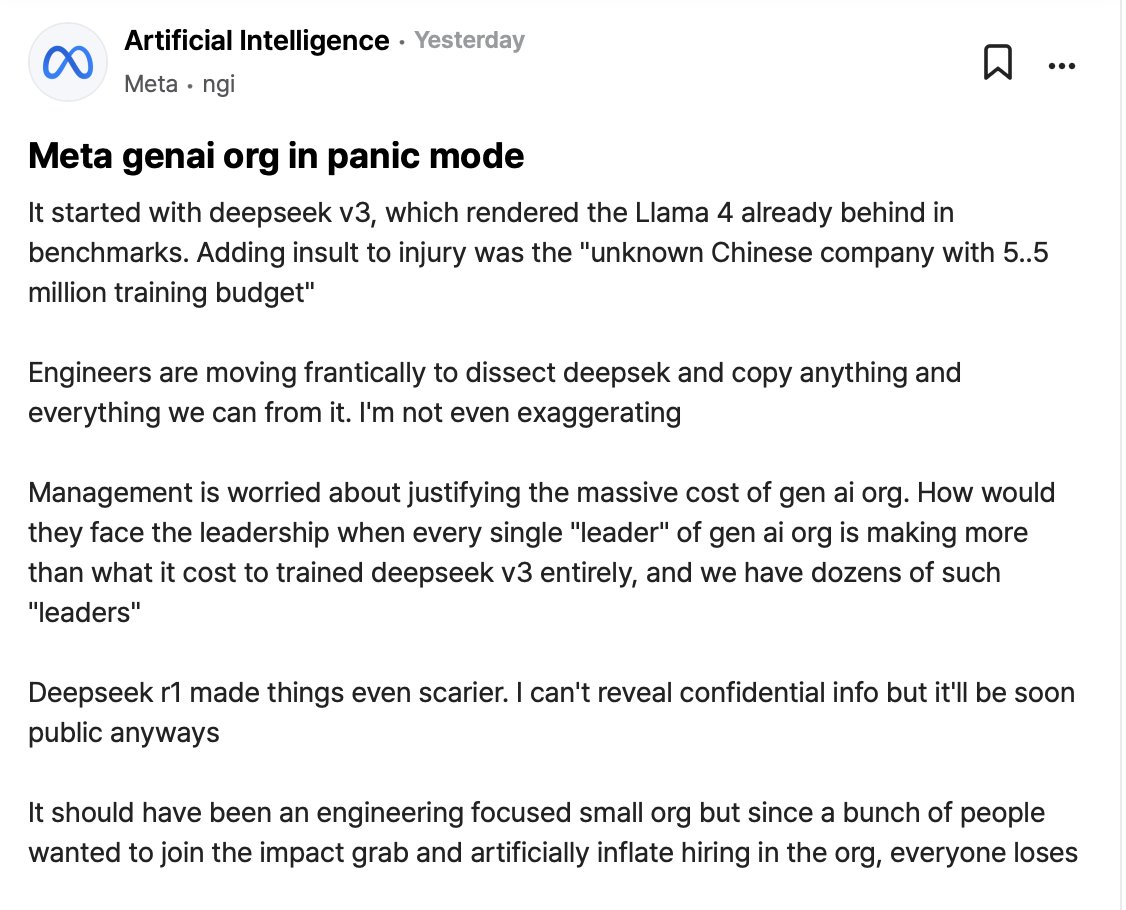

@ShardiB2 did you see this? china & deepseek r1 putting pressure all the major LLM providers (might be why OpenAI made o3-mini free for all users)

3

0

3

@ShardiB2 yes link both eth and solana wallets, but you claim on solana. its a solana token ultimately

6

0

1