Jiacheng Liu

@liujc1998

Followers

1,196

Following

197

Media

70

Statuses

273

🎓 PhD student @uwcse @uwnlp . 🛩 Private pilot. Previously: 🧑💻 @oculus , 🎓 @IllinoisCS . 📖 🥾 🚴♂️ 🎵 ♠️

Joined November 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

FEMA

• 1203388 Tweets

Liz Cheney

• 209584 Tweets

#LISAxMoonlitFloor

• 197221 Tweets

MOONLIT FLOOR OUT NOW

• 137932 Tweets

SCJN

• 128619 Tweets

The Boss

• 103770 Tweets

#GHGala5

• 100032 Tweets

Bruce

• 92338 Tweets

Mets

• 69981 Tweets

Happy Anniversary

• 59797 Tweets

Baker

• 51010 Tweets

Brewers

• 42600 Tweets

EL DESTELLO IS OUT

• 34794 Tweets

天使の日

• 33230 Tweets

Falcons

• 29013 Tweets

Mancuso

• 25832 Tweets

Halle

• 24850 Tweets

もちづきさん

• 24728 Tweets

Pete Alonso

• 19614 Tweets

Athena

• 15232 Tweets

Mike Evans

• 14530 Tweets

Bijan

• 13510 Tweets

Phillies

• 11404 Tweets

#ゴンチャのハロウィン準備中

• 10748 Tweets

Milwaukee

• 10479 Tweets

Quintana

• 10036 Tweets

Last Seen Profiles

Can LMs introspect the commonsense knowledge that underpins the reasoning of QA?

In our

#EMNLP2022

paper, we show that relatively small models (<< GPT-3), trained with RLMF (RL with Model Feedback), can generate natural language knowledge that bridges reasoning gaps. ⚛️

(1/n)

1

43

193

Our paper, “Generated Knowledge Prompting for Commonsense Reasoning”, is accepted to the

#acl2022nlp

main conference! 🎉

🌟Elicit ⚛️ symbolic knowledge from 🧠 language models using few-shot demos

🌟Integrate model-generated knowledge in commonsense predictions 💡

@uwnlp

(1/N)

3

35

158

Introducing 🔮Crystal🔮, an LM that conducts “introspective reasoning” and shows its reasoning process for QA. This improves both QA accuracy and human interpretability => Reasoning made Crystal clear!

Demo:

at

#EMNLP2023

🧵(1/n)

2

30

138

The infini-gram paper is updated with the incredible feedback from the online community 🧡 We added references to papers of

@JeffDean

@yeewhye

@EhsanShareghi

@EdwardRaffML

et al.

Also happy to share that the infini-gram API has served 30 million queries!

2

13

77

Today we’re adding Dolma-sample to infini-gram 📖

Dolma-sample (8 billion tokens) is a sample of the full Dolma dataset (3 trillion tokens), which is used to pre-train

@allen_ai

's open-source OLMo model.

Use infini-gram to get a sense of what data OLMo is trained on:

2

6

48

[Fun w/ infini-gram

#3

] Today we’re tracing down the cause of memorization traps 🪤

Memorization trap is a type of prompt where memorization of common text can elicit undesirable behavior. For example, when the prompt is “Write a sentence about challenging common beliefs: What

1

13

43

[Fun w/ infini-gram 📖

#6

] Have you ever taken a close look at Llama-2’s vocabulary? 🧐

I used infini-gram to plot the empirical frequency of all tokens in the Llama-2 vocabulary. Here’s what I learned (and more Qs raised):

1. While Llama-2 uses a BPE tokenizer, the tokens are

6

3

41

The Vera model is now public! 🪴

We received many interests in using the Vera model for large-scale inference. Now you can set it up on your own machine.

Meanwhile, if you're looking to try out Vera, feel free to visit our demo at

0

3

31

Check out our latest dataset that shows language models falling into “memorization traps”! Details in

@alisawuffles

’s thread 🧵

0

6

29

I’m at

#EMNLP2023

! Would love to chat with old&new friends on reasoning, search/planning, commonsense, RL, or anything!

Also I’ll present two papers, both at the 2pm session on Dec 8 @ Central1:

- Vera (commonsense verification): 2-2:15

Crystal (RL-powered reasoning): 3:15-3:30

1

2

29

Now you can transform Siri into the real

#ChatGPT

! Simply download this shortcut (link in repo ⬇️) to your iPhone, edit its script in the Shortcuts app (i.e. paste your API key into the text box), and say, "Hey Siri, ChatGPT". Ask your favorite question!

1

7

30

Vera is accepted to

#EMNLP2023

!! 🎉🎉

We’re releasing a community-contributed benchmark for commonsense evaluation, derived from user interactions with Vera in the past months. (Thanks everyone for using our demo!)

This new challenging dataset is on HF:

0

1

28

Very excited that the Inverse Scaling paper is released! Our prize-winning task submission, memo-trap, is among those with the strongest and most robust inverse scaling trends.

0

4

25

[Fun w/ infini-gram

#2

] Today we're verifying Benford's Law!

Benford's Law states that in real-life numerical datasets, the leading digit should follow a certain distribution (left fig). It has usage in detecting fraud in accounting, election data, and macroeconomic data.

The

3

2

28

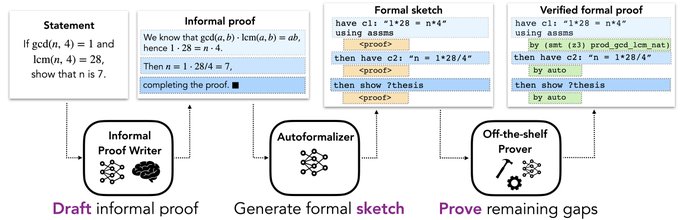

Here's one of my favorites: our model constructed a nearly-perfect induction proof, by learning the pattern of induction in training data but NOT copying from any similar proofs. 🤖🎓

Amazing skills from the combination of large models, massive data, and innovative algorithms.

1

4

24

If you’re following the

@OpenAI

Q* rumors, see how we combined RLHF and search: PPO + Monte-Carlo Tree Search

So don’t throw away the value model! 💎

0

5

22

The infini-gram API has served over 1 million queries during its first week of release! Thanks everyone for powering your research with our tools 🤠

Also, infini-gram now supports two additional corpora: the training sets of C4 and Pile, both in the demo and via the API. This

0

3

24

(7/n) We will be releasing PPO-MCTS as a plugin so that you can apply it to your existing text generation code. Stay tuned!

Huge kudos to my amazing collaborators and advisors at

@uwnlp

@MetaAI

@AIatMeta

:

@andrew_e_cohen

@ramakanth1729

@YejinChoinka

@HannaHajishirzi

@real_asli

0

2

19

[Fun w/ infini-gram

#1

] Today let’s look up some of the longest English words in RedPajama. 😎

honorificabilitudinitatibus (27 letters, 7 tokens, longest word in Shakespeare's works): 1093 occurrences

pneumonoultramicroscopicsilicovolcanoconiosis (45 letters, 17 tokens; some

1

4

19

The 🏔️Rainier model is now on huggingface!

Also, try out introspective reasoning with Rainier in this web demo:

I will be presenting the Rainier paper at

#emnlp2022

. Please come find our poster stand, Dec10 11am in the atrium!

Can LMs introspect the commonsense knowledge that underpins the reasoning of QA?

In our

#EMNLP2022

paper, we show that relatively small models (<< GPT-3), trained with RLMF (RL with Model Feedback), can generate natural language knowledge that bridges reasoning gaps. ⚛️

(1/n)

1

43

193

0

2

18

[Fun w/ infini-gram 📖

#4

] A belated happy Chinese New Year! 🐉 How about a battle for popularity among the 12 Zodiacs?

When counting by their English names, Dog appears most frequently in RedPajama, followed by Dragon and Rat.

However, when counting by their Chinese names, the

1

2

14

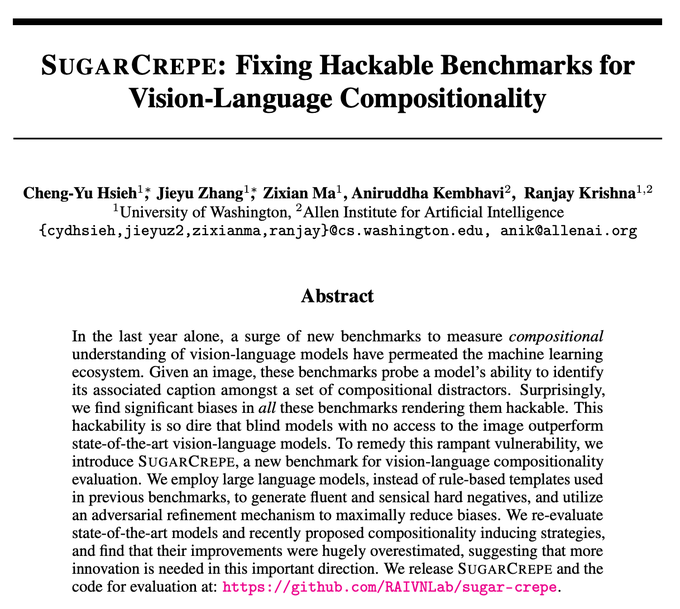

Awesome work! Excited to see that our commonsense model, Vera, is employed to characterize and filter textual artifacts, resulting in higher-quality negatives for V-L compositionality dataset! ☘️

0

0

12

@alisawuffles

and I will soon be presenting this cool knowledge generation method in

#acl2022nlp

! 🤠 Feel free to stop by in one of our poster sessions:

[In-person]: Mon 05/23, 17:00 IST (PS3-5 Question Answering)

[Online]: Tue 05/24, 07:30 IST (23:30 PT) (VPS1 on GatherTown)

Our paper, “Generated Knowledge Prompting for Commonsense Reasoning”, is accepted to the

#acl2022nlp

main conference! 🎉

🌟Elicit ⚛️ symbolic knowledge from 🧠 language models using few-shot demos

🌟Integrate model-generated knowledge in commonsense predictions 💡

@uwnlp

(1/N)

3

35

158

0

4

12

Thanks for featuring our work

@_akhaliq

!! 😍

Super excited to announce the infini-gram engine that counts long n-grams and retrieve documents within TB-scale text corpora, with millisecond-level latency.

Look forward to enabling more scrutiny into what LLMs are being trained on.

0

1

11

@arankomatsuzaki

Check out infini-gram:

- ∞-gram LM (i.e. n-gram LM with unbounded n)

- 1.4 trillion tokens

- About 1 quadrillion (10^15) n-grams

- Trained on 80 CPUs in 2 days

- Perplexity = 19.8 (when interpolated between different n's); PPL = 3.95 (when interpolated with Llama-2-70B)

0

0

9

@sewon__min

@LukeZettlemoyer

@YejinChoinka

@HannaHajishirzi

(2/n) Using the ∞-gram framework and infini-gram engine, we gain new insights into human-written and machine-generated text.

∞-gram is predictive of human-written text on 47% of all tokens, and this is a lot higher than 5-gram (29%). This prediction accuracy is much higher

1

0

6

(10/n) Tons of thanks to my amazing collaborator

@sewon__min

and advisors

@LukeZettlemoyer

@YejinChoinka

@HannaHajishirzi

, as well as friends at

@uwnlp

@allen_ai

@AIatMeta

for sharing your invaluable feedback during our journey! 🥰🥰

0

0

9

(7/n) Paper available at

Stay tuned for the camera-ready version and the code!

Huge thanks to my wonderful collaborators at

@uwnlp

@allen_ai

and

@PetuumInc

! --

@SkylerHallinan

@GXiming

@hepengfe

@wellecks

@HannaHajishirzi

@YejinChoinka

2

0

8

Check out our recent cool work on solving and formally proving competition-level math problems, using informal provers and autoformalizers! Led by

@AlbertQJiang

🎓

0

0

8

(7/N) Paper available at

Huge thanks to my wonderful collaborators at

@uwnlp

and

@allen_ai

:

@alisawuffles

@GXiming

@wellecks

@PeterWestTM

@Ronan_LeBras

@YejinChoinka

@HannaHajishirzi

0

0

7

[Fun w/ infini-gram 📖

#5

] What does RedPajama say about Letter Frequency?

Image shows the letter distribution. Seems that there’s a lot less letter “h” in RedPajama than expected (using Wikipedia page as gold reference: ). Thoughts? 🤔

(I issued a single

0

1

7

@DimitrisPapail

I guess stuff like "As a language model trained by OpenAI" has already diffused into and polluted our pretraining data 🤣🤣

0

0

7

So excited to see the launch of L3 Lab! 😍

Sean has awesome vision and years of solid work at the intersection of natural language and formal mathematics/logic. It's been a huge privilege for me to work with him at UW. Please definitely apply to

@wellecks

's lab at

@LTIatCMU

!!

1

0

6

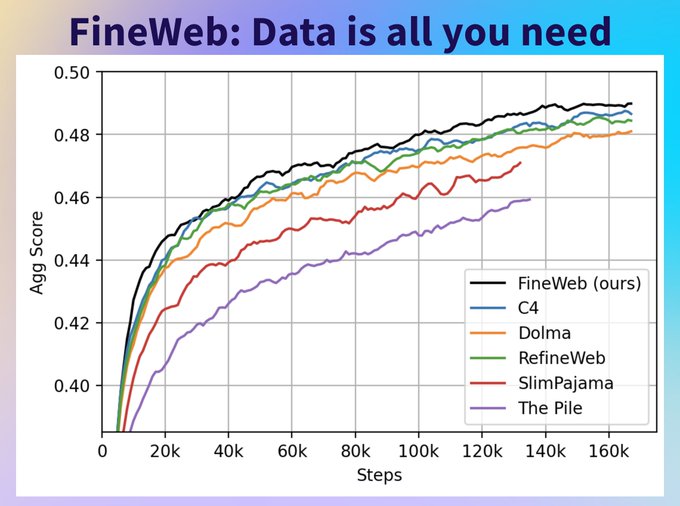

Wow 15T tokens of open data! Imagine the amount of 💸💸 I’d need to burn to bring this to infini-gram … 🤣

Data is all we need! 👑 Not only since Llama 3 have we known that data is all we need. Excited to share 🍷 FineWeb, a 15T token open-source dataset! Fineweb is a deduplicated English web dataset derived from CommonCrawl created at

@huggingface

! 🌐

TL;DR:

🌐 15T tokens of cleaned

13

86

390

0

0

7

@chrmanning

@sewon__min

@LukeZettlemoyer

@YejinChoinka

@HannaHajishirzi

OMG thanks so much

@chrmanning

!!

Indeed strings "2000" through "2024" do appear a total of ~4 billion which account for ~70% of everything starting with digit "2". I guess year numbers also contributed a lot to digit "1".

1

0

5

@JeffDean

@sewon__min

@LukeZettlemoyer

@YejinChoinka

@HannaHajishirzi

@_akhaliq

Thanks

@JeffDean

for clarifying!

We're not the largest n-gram LM (yet, stay tuned~) in terms of training data size, the honor is held by Jeff's '07 paper. Though it seems we are still the largest in the number of unique n-grams indexed.

0

0

4

Also, huge thanks to

@alisawuffles

@_julianmichael_

@rockpang6

@KaimingCheng

and Yuxuan Bao for their valuable help and feedback, and to our sharp and diligent proof evaluators from the math/amath department of UW!!

0

0

5

@JeffDean

@_akhaliq

Also I noticed while the 2007 paper trains on 2T tokens, the number of unique n-gram entries seems to be 300B.

Our infini-gram conceptually gives ~10^24 entries on a corpus with 1T tokens. (Even when not counting across document bounds, there should be ~100T n-gram entries)

1

0

2

(6/n) We’d love to hear your feedback on how we can improve Vera! Feel free to try our demo and test your favorite example about commonsense. Let us know what went well and what did not via a simple click 🖱️

The demo is located at (thanks

@huggingface

🤗)

1

1

4

@jang_yoel

@HannaHajishirzi

@uwcse

@uwnlp

@LukeZettlemoyer

Welcome, Joel!! So excited for you to join the community 😆

0

0

4

Highly recommend everyone working in

#NLProc

to take this 20-min survey and think about these questions! 🤗

0

0

4

(7/n) Vera is a research prototype and limitations apply. We hope it can be a useful tool for improving commonsense correctness in generative LMs.

Huge kudos to my amazing collaborators

@happywwy

@DianzhuoWang

and our wonderful advisors

@nlpnoah

@YejinChoinka

@HannaHajishirzi

🥰

0

1

2

@TheGrizztronic

Code will be released soon! 😇

We prioritized API because it has higher demand, and even with the code it'd take quite some effort and $$$ to build the index yourself 💸💸

1

0

3

@hiroto_kurita

Very interesting mini-analysis!

This may imply that the context where these numbers would play a big role, with things around "42" being somewhat interesting / attractive 😆

0

0

3

@yoavartzi

@DimitrisPapail

@elmelis

@COLM_conf

@LukeZettlemoyer

@sewon__min

Hi

@yoavartzi

! Indeed we didn't rival the GPTs with the n-gram LM alone. There's a tradeoff between using richer context & getting smooth distributions, and we found this messes up with autoregressive decoding.

Love to chat more on how to reach GPT performance using counts 😊

1

0

1

(3/3) You can use the infini-gram search engine on

@huggingface

spaces today! 🤗

We will announce an API endpoint soon (currently working on optimizing latency and throughput), and we will also release the source code of infini-gram. Stay tuned!

Our

0

0

2

Updated

#ChatGPT

-in-Siri with improved interactions, debuggability, and detailed installation guides! 😎

Try it out here:

Now you can transform Siri into the real

#ChatGPT

! Simply download this shortcut (link in repo ⬇️) to your iPhone, edit its script in the Shortcuts app (i.e. paste your API key into the text box), and say, "Hey Siri, ChatGPT". Ask your favorite question!

1

7

30

0

0

2

@natolambert

@ericmitchellai

@hamishivi

If you look at the KLs, they are equal to the diff of logprobs of current policy and ref policy, and it being positive means logprobs on the "current rollouts" have increased, compared to the ref policy.

Probably not what you're looking for, though 😛

0

0

2

@EdwardRaffML

@sewon__min

@LukeZettlemoyer

@YejinChoinka

@HannaHajishirzi

Indeed. Thanks for sharing!!

0

0

2

@yeewhye

@sewon__min

@LukeZettlemoyer

@YejinChoinka

@HannaHajishirzi

@frankdonaldwood

@cedapprox

Hi

@yeewhye

, thanks so much for sharing your paper, it looks super relevant!

It's so cool that the term "∞-gram" already appears in this '09 paper 😎

1

0

2

@kanishkamisra

@YejinChoinka

Thanks for sharing this dataset

@kanishkamisra

! It would be nice to see how well Vera can handle this type of input. It is not trained to comprehend new definitions (as in COMPS), but Vera has already surprised us on how well it can handle slightly out-of-scope tasks.

1

0

2

(5/n) Our model, Vera, is potentially useful in detecting commonsense errors in outputs of generative LMs. On a sample of commonsense mistakes made by ChatGPT (mostly collected by

@ErnestSDavis

and

@GaryMarcus

), Vera can flag errors with a precision of 91% and a recall of 74%.

1

1

2