leloy!

@leloykun

Followers

4K

Following

44K

Media

587

Statuses

8K

Math @ AdMU • NanoGPT speedrunner • soon RE @ ███ • prev ML @ Expedock • 2x IOI & 2x ICPC • Non-Euclidean Geom, VLMs, Info Retrieval, Structured Outputs

Joined November 2018

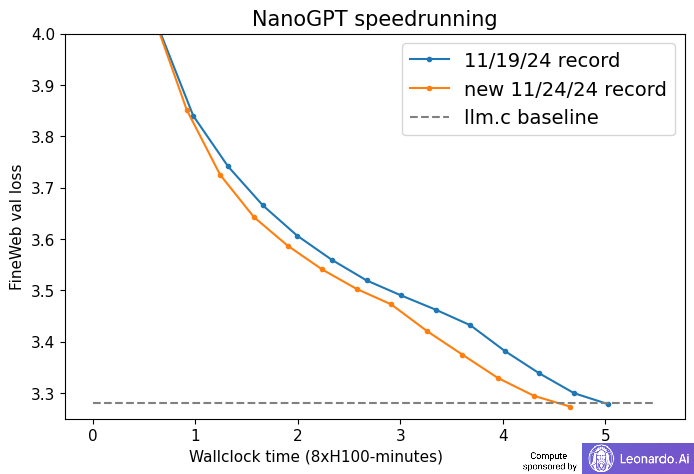

Sub 3-minute NanoGPT Speedrun Record. We're proud to share that we've just breached the 3 min mark!. This means that with an ephemeral pod of 8xH100s that costs $8/hour, training a GPT-2-ish level model now only costs $0.40!. ---. What's in the latest record? A 🧵.

Remember the llm.c repro of the GPT-2 (124M) training run? It took 45 min on 8xH100. Since then, @kellerjordan0 (and by now many others) have iterated on that extensively in the new modded-nanogpt repo that achieves the same result, now in only 5 min! .Love this repo 👏 600 LOC

13

48

364

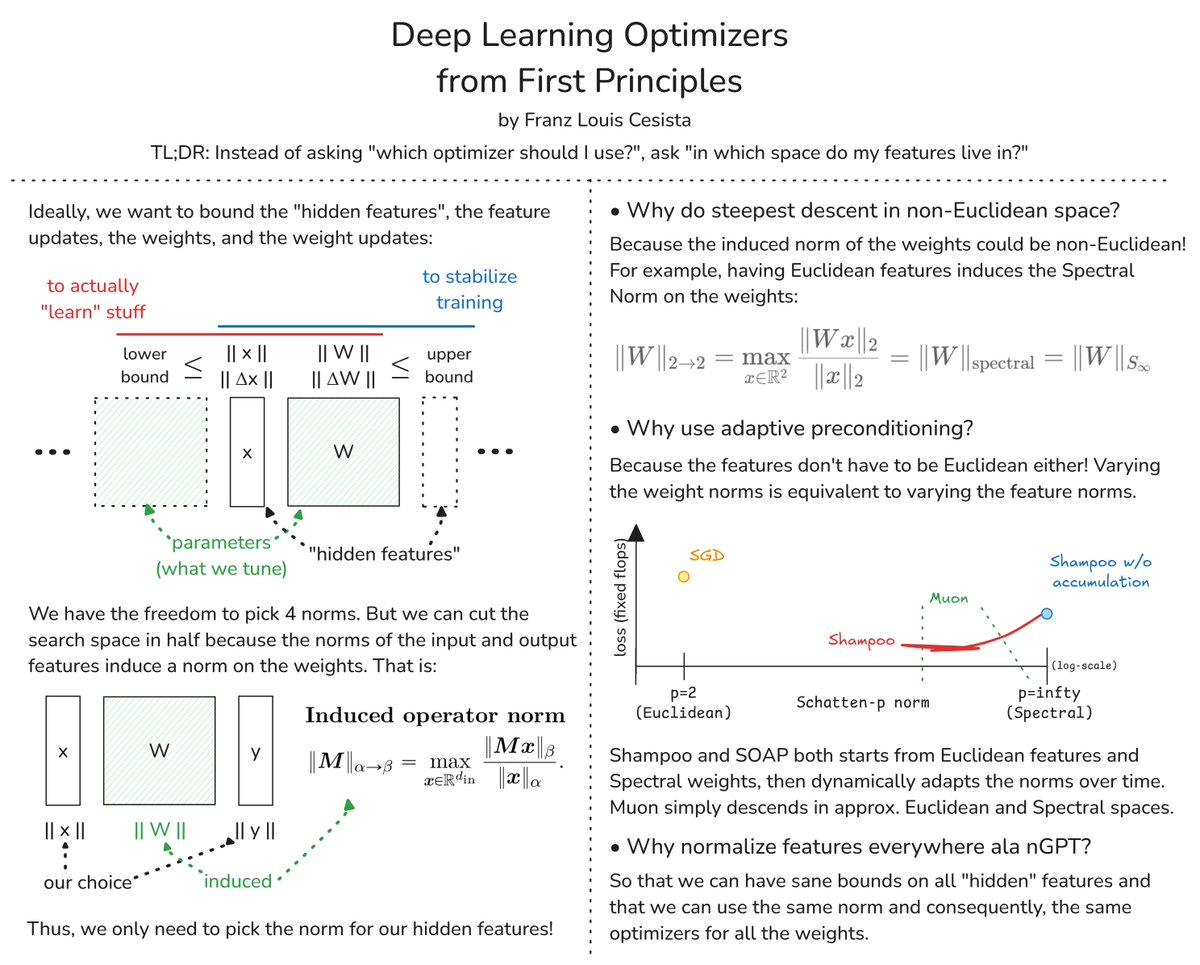

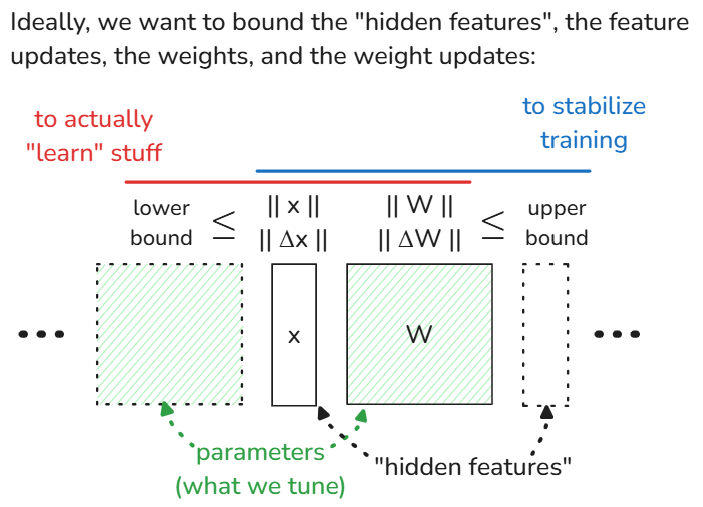

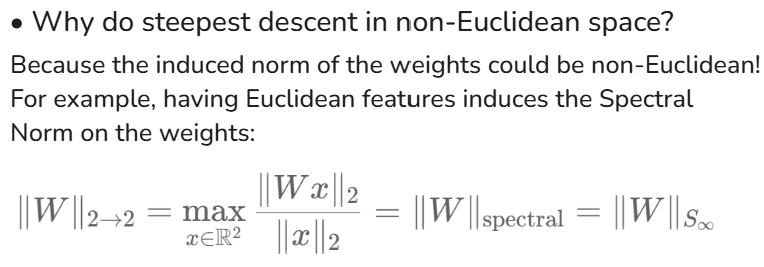

Deep Learning Optimizers from First Principles. My attempt at answering these questions:. 1. Why do steepest descent in non-Euclidean spaces?.2. Why does adaptive preconditioning work so well in practice? And,.3. Why normalize everything ala nGPT?

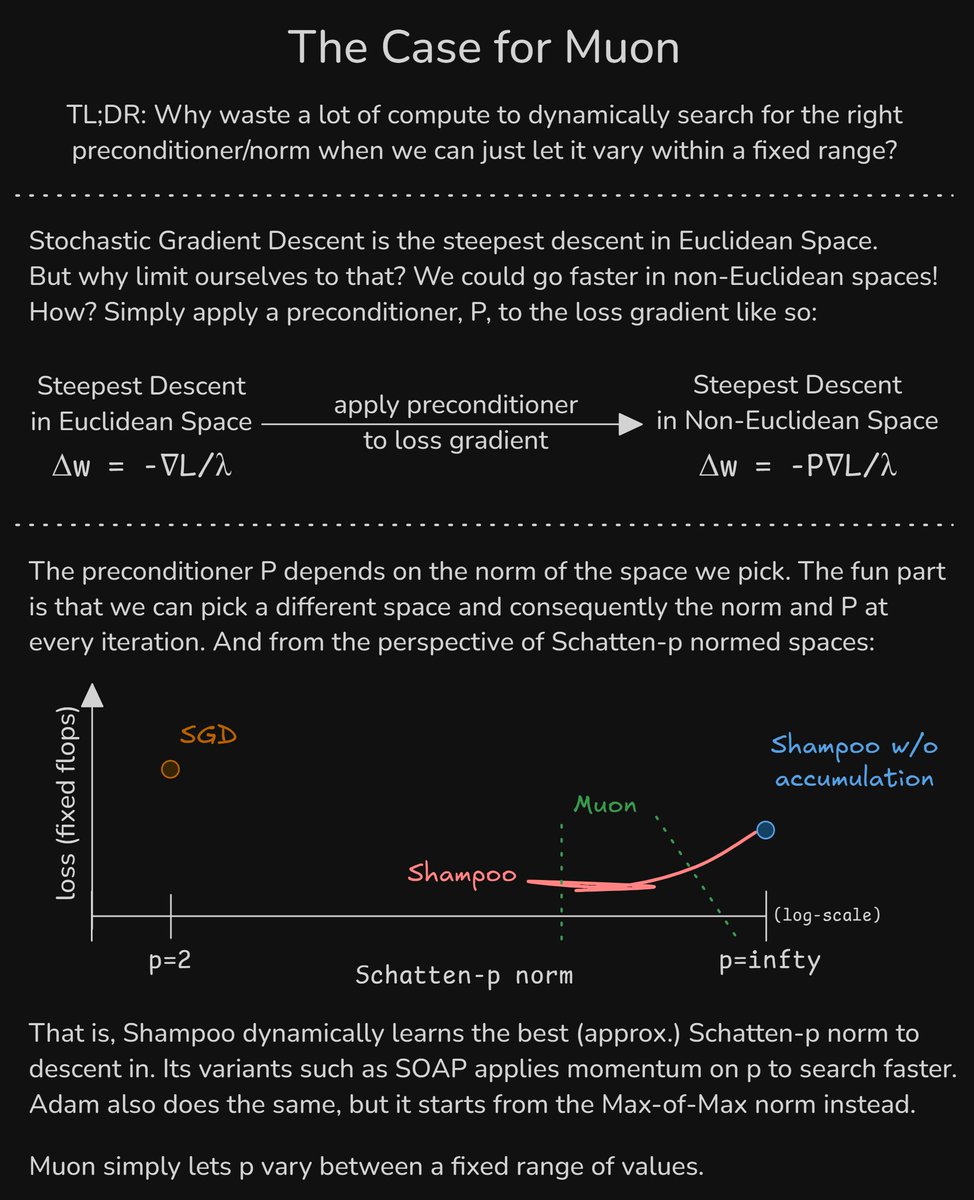

The Case for Muon. 1) We can descend 'faster' in non-Euclidean spaces.2) Adam/Shampoo/SOAP/etc. dynamically learn the preconditioner and, equivalently, the norm & space to descend in.3) Muon saves a lot of compute by simply letting the norm to vary within a fixed range

11

178

1K

Remember @karpathy's llm.c repro of the GPT-2 (124M) training run which took 45 mins on 8xH100s?. We're proud to share that we've just breached the 4 min mark!. A few years ago, it would've costed you hundreds of thousands of dollars (maybe millions!) to achieve the same result.

New NanoGPT training speed record: 3.28 FineWeb val loss in 3.95 minutes. Previous record: 4.41 minutes.Changelog:.- @leloykun arch optimization: ~17s.- remove "dead" code: ~1.5s.- re-implement dataloader: ~2.5s.- re-implement Muon: ~1s.- manual block_mask creation: ~5s

14

95

1K

Deep Learning Optimizers from First Principles. Now with more maths!. In this thread, I'll discuss:. 1. The difference between 1st order gradient dualizaton approaches and 2nd order optimization approaches. 2. Preconditioning--how to do it and why. 3. How to derive a couple of

Deep Learning Optimizers from First Principles. My attempt at answering these questions:. 1. Why do steepest descent in non-Euclidean spaces?.2. Why does adaptive preconditioning work so well in practice? And,.3. Why normalize everything ala nGPT?

8

101

948

Di ka iiwan ng DL certificates mo tho.

Love life first before grades;.Relationship first before acads. Hindi ka naman yayakapin ng DL certificate mo kapag umiiyak ka na. #TipsForPisayFreshies.

3

46

527

The Case for Muon. 1) We can descend 'faster' in non-Euclidean spaces.2) Adam/Shampoo/SOAP/etc. dynamically learn the preconditioner and, equivalently, the norm & space to descend in.3) Muon saves a lot of compute by simply letting the norm to vary within a fixed range

got nerdsniped by @kellerjordan0's Muon and spent time analyzing it instead of preparing for my job interview in <1 hour 😅. tl;dr.1) 3 Newton-Schulz iterations suffice for the non-square matrices.2) I propose a new Newton-Schulz iterator that converges faster and is more stable

9

31

445

have good news to share this week 🤗. for now, a couple of updates:. 1. We used (a JAX-implementation of) Muon to find coefficients for a 4-step Muon that behaves roughly the same as the original 5-step Muon. It's faster, but causes a slight perf drop for reasons I described in

got nerdsniped by @kellerjordan0's Muon and spent time analyzing it instead of preparing for my job interview in <1 hour 😅. tl;dr.1) 3 Newton-Schulz iterations suffice for the non-square matrices.2) I propose a new Newton-Schulz iterator that converges faster and is more stable

6

23

265

Adaptive Muon. @HessianFree's analysis here shows that the current implementation of Muon, along SOAP & possibly also Adam, can't even converge on a very simple loss function loss(x) = ||I - x^T * x||^2. But this issue can be (mostly) fixed with a one-line diff that allows Muon

Alright let's put Muon and SOAP head-to-head with PSGD to solve a simple loss = (1 - (x^T * x))^2, such that x is initialized as a 2x2 random matrix. At high beta both all optimizers fail but, otherwise PSGD is able to reduce the loss down exactly to zero whereas Muon can

8

14

247

got nerdsniped by @kellerjordan0's Muon and spent time analyzing it instead of preparing for my job interview in <1 hour 😅. tl;dr.1) 3 Newton-Schulz iterations suffice for the non-square matrices.2) I propose a new Newton-Schulz iterator that converges faster and is more stable

2

13

241

Here's another reason why we would want to do steepest descent under the Spectral Norm:. To have a larger spread of attention entropy and thus allowing the model to represent more certainty/uncertainty states during training!. ---. First, why do we have to bother with the

Deep Learning Optimizers from First Principles. My attempt at answering these questions:. 1. Why do steepest descent in non-Euclidean spaces?.2. Why does adaptive preconditioning work so well in practice? And,.3. Why normalize everything ala nGPT?

9

24

219

I'm gonna start posting negative results to normalize it (it wouldn't be science if we're only posting positive results). Main takeaway here is that Attention Softcapping improves training stability at the cost of extra wall-clock time (this paper even

New NanoGPT training speed record: 3.28 FineWeb val loss in 4.66 minutes. Previous record: 5.03 minutes.Changelog: .- FlexAttention blocksize warmup.- hyperparameter tweaks

8

15

215

The UNet Value Embeddings arch we developed for the NanoGPT speedrun works, scales, and generalizes across evals. Try it out! It's free lunch!

We are now down to 160 GPU hours for speed running SOTA evals in the 100-200M param smol model class (~31x less compute!) 🚀. Thanks to @KoszarskyB, @leloykun, @Grad62304977, @YouJiacheng et al. for their work that helped to make this possible, and to @HotAisle for their great

3

12

97

Another positive reproduction of our results on the NanoGPT speedrun!. This shows that the architecture we developed (i.e. UNet Value Residuals) generalizes across datasets & evals.

Remember @karpathy's llm.c repro of the GPT-2 (124M) training run which took 45 mins on 8xH100s?. We're proud to share that we've just breached the 4 min mark!. A few years ago, it would've costed you hundreds of thousands of dollars (maybe millions!) to achieve the same result.

1

9

89

We've just opened a new track!. Latest NanoGPT-medium speedrun record: 2.92 FineWeb val loss in 29.3 8xH100-minutes.

Sub 3-minute NanoGPT Speedrun Record. We're proud to share that we've just breached the 3 min mark!. This means that with an ephemeral pod of 8xH100s that costs $8/hour, training a GPT-2-ish level model now only costs $0.40!. ---. What's in the latest record? A 🧵.

5

5

94

11/07/24 New NanoGPT speedrunning record. Changelogs:.1. Cooldown on the momentum & velocity terms in the embedding's optimizer as training winds down (c @jxbz).2. Made the value residual more flexible by splitting the lambda into two (with the sum not necessarily = 1).3.

have good news to share this week 🤗. for now, a couple of updates:. 1. We used (a JAX-implementation of) Muon to find coefficients for a 4-step Muon that behaves roughly the same as the original 5-step Muon. It's faster, but causes a slight perf drop for reasons I described in

4

1

77

Thanks for this @karpathy!. I also decided to port this to c++ cuz why not? haha.

My fun weekend hack: llama2.c 🦙🤠.Lets you train a baby Llama 2 model in PyTorch, then inference it with one 500-line file with no dependencies, in pure C. My pretrained model (on TinyStories) samples stories in fp32 at 18 tok/s on my MacBook Air M1 CPU.

4

6

58

My contributions to the latest record were actually just simple modifications to @Grad62304977's & @KoszarskyB 's prev work on propagating attention Value activations/embeddings to later layers:. 1. Added UNet-like connectivity structure on the value embeddings. This allowed us.

3

4

58

Muon currently has known issues we're still looking into. e,g.:.1. It requires a lot of matmuls. Sharding doesn't help either and even results in worse loss in most cases.2. The number of newton-schultz iterations needs to scale with grad matrix size (and possibly arch.

Imagine if conservatively going with AdamW instead of hot new stuff like Muons is the only reason this 700B/37A SoTA monster wasn't trained for the cost of **a single LLaMA3-8B**.

5

4

53

This is the overhead I'm talking abt btw:. Running the exact same code in the previous record produces roughly the same loss curve, but gets +5ms/step of overhead. While running the new code produces a better loss curve, with the same overhead. My best guess is I'm messing up my

11/07/24 New NanoGPT speedrunning record. Changelogs:.1. Cooldown on the momentum & velocity terms in the embedding's optimizer as training winds down (c @jxbz).2. Made the value residual more flexible by splitting the lambda into two (with the sum not necessarily = 1).3.

6

0

52

I also really recommend this work by @TheGregYang and @jxbz : This one too: super information-dense works!.

2

5

36

@rdiazrincon I totally forgot how sets are implemented internally 😅. and this broke our metrics 💀💀.

6

0

34

ACET this year be like:. Can you pay for the tuition? Yes? You're in. Congrats :).

There is a lot going on and we don’t want to create more unneeded drama and stress. There will be no #ACET for SY 2021-22. Times are evolving but our commitment to academic excellence and formation has not changed. Get #readyfortomorrow, the Ateneo way.

0

2

31

This'll get people killed.

How #FacialRecognition . can Reveal Your #Political Orientation . #fintech #AI #ArtificialIntelligence #MachineLearning #DeepLearning #election @analyticsinme

0

7

32

Also big thanks to @jxbz for his super insightful paper on steepest descent under different norms. @jxbz, @kellerjordan0 please let me know if I got my maths/interpretation wrong & also hoping my work helps!.

1

1

33

It looks like 1 Gram iteration would suffice. I.e., estimating the spectral norm w/ `||G^T@G||_F^{1/2}`. cc @kellerjordan0

Along this direction (tighter upper bound of the spectral norm) I think we might have a free lunch leveraging the "Gram Iteration". Because we will calculate the G^2 and G^4 in the first N-S iteration, we can get a better estimation almost free. @leloykun

2

2

30