Julian Büchel

@jubueche

Followers

99

Following

224

Statuses

174

PhD in AI & Cloud Systems Research @ IBM Research Zurich and ETH Zürich

Joined August 2016

RT @NatComputSci: @jubueche @abuseb @IBMResearch An accompanying News & Views by @anandsubramoney is also available now!

0

2

0

RT @NatComputSci: 📢@jubueche, @abuseb and colleagues from @IBMResearch introduce a viable pathway for the efficient deployment of state-of-…

0

3

0

Performing low-latency inference with billion-parameter LLMs on a device the size of a thumb-drive and a power consumption of <10W is a dream of ours. In a joint project between @IBMResearch and @MicronTech, we lay out a way to achieve this dream: Our latest paper "Efficient scaling of large language models with mixture of experts and 3D analog in-memory computing" published in Nature Computational Science proposes to marry 3D Analog In-Memory Computing (3D AIMC) using high-density 3D non-volatile memory (NVM) with the conditional compute paradigm of Mixture of Experts (MoEs). 3D AIMC is a promising approach to energy efficient inference of LLMs. On a high level, 3D AIMC can be thought of as stacking many 2D AIMC crossbars on top of each other. Each crossbar can be used to perform Matrix-Vector-Multiplications (MVMs) using the weights programmed into the NVM devices of the respective layer (or tier). Due to hardware constraints, performing MVMs in parallel across every tier isn't possible, a constraint we introduce as the One-Tier-at-a-Time (OTT) constraint. This constraint can become a bottleneck for very large layers (10s of thousands of rows/columns). Enter MoEs: MoEs are interesting because during the forward pass, only a subset of the parameters in the model is used to process each token. This makes MoEs much more scalable in terms of number of parameters. Under the hood, MoEs swap out every MLP layer in the transformer with many smaller MLP layers, the so called experts. During the forward pass, each token is then processed by a small subset of these experts. 𝗪𝗲 𝗳𝗼𝘂𝗻𝗱 𝘁𝗵𝗮𝘁 𝘁𝗵𝗶𝘀 𝗰𝗼𝗻𝗰𝗲𝗽𝘁 𝗼𝗳 𝗰𝗼𝗻𝗱𝗶𝘁𝗶𝗼𝗻𝗮𝗹 𝗰𝗼𝗺𝗽𝘂𝘁𝗶𝗻𝗴 𝗻𝗮𝘁𝘂𝗿𝗮𝗹𝗹𝘆 𝗮𝗹𝗹𝗲𝘃𝗶𝗮𝘁𝗲𝘀 𝘁𝗵𝗲 𝗢𝗧𝗧 𝗰𝗼𝗻𝘀𝘁𝗿𝗮𝗶𝗻𝘁 𝗳𝗼𝘂𝗻𝗱 𝗶𝗻 𝟯𝗗 𝗔𝗜𝗠𝗖 𝘄𝗵𝗲𝗻 𝘁𝗵𝗲 𝗲𝘅𝗽𝗲𝗿𝘁𝘀 𝗮𝗿𝗲 𝘀𝘁𝗮𝗰𝗸𝗲𝗱 𝗼𝗻 𝘁𝗼𝗽 𝗼𝗳 𝗲𝗮𝗰𝗵 𝗼𝘁𝗵𝗲𝗿. Our main contributions include: ◆ We demonstrate that – compared to standard models – MoEs scale very well on 3D AIMC for an increasing number of parameters. ◆ When compared to GPUs we get more than 2 orders of magnitude higher energy efficiency. ◆ We show that MoEs form the Pareto front in terms of model accuracy against system performance when compared to standard models. 𝗧𝗵𝗶𝘀 𝗺𝗲𝗮𝗻𝘀 𝘁𝗵𝗮𝘁 𝗶𝘁 𝗶𝘀 𝗮𝗹𝘄𝗮𝘆𝘀 𝗯𝗲𝘁𝘁𝗲𝗿 𝘁𝗼 𝘂𝘀𝗲 𝗮𝗻 𝗠𝗼𝗘 𝗼𝗻 𝟯𝗗 𝗔𝗜𝗠𝗖 𝗵𝗮𝗿𝗱𝘄𝗮𝗿𝗲! ◆ We open-sourced a high-level performance simulator, which allowed us to simulate pipelined inference of transformers on an abstract 3D AIMC architecture. ◆ We open-sourced Analog-MoE, a library for training MoEs in a hardware-aware manner. 📝 Paper: 🔗 Analog-MoE: 🔗 Simulator:

0

1

5

RT @ni_jovanovic: SynthID-Text by @GoogleDeepMind is the first large-scale LLM watermark deployment, but its behavior in adversarial scenar…

0

15

0

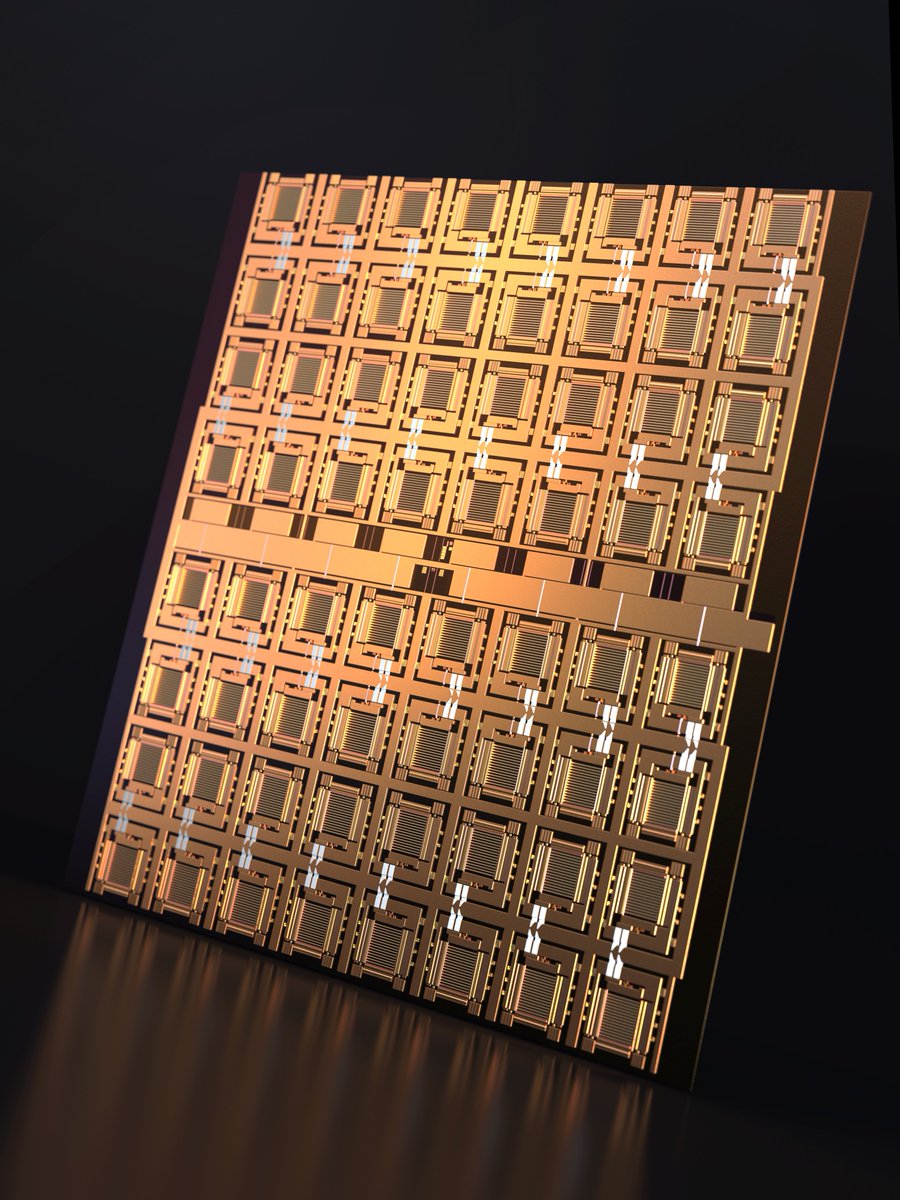

Curious about recent progress in using analog computation for accelerating AI inference? I will be at #NeurIPS to demo our latest chip: The IBM Hermes Project Chip, a 64-core Analog In-Memory Computing Chip based on Phase Change Memory. 🗓️ Dates (all times are in Pacific Time): - Dec. 10, IBM Booth 12:00-13:00 Exhibition Level - Hall A, Booth No. 243 - Dec. 10, Expo from 15:00-17:00 West Exhibition Hall A - Dec. 11, IBM Booth 14:00-15:00 - Dec. 12, IBM Booth 15:00-16:00 🔗 Link to the paper: That's not all! I will also be at the ML with New Compute Paradigms (MLNCP) workshop on Dec. 15. I will be presenting our paper "AIHWKIT-Lightning: A Scalable HW-Aware Training Toolkit for Analog In-Memory Computing". Come by to exchange thoughts about algorithms for Analog In-Memory Computing and other topics! 🔗 Link to the paper: @abuseb @IBMResearch

0

1

6

🍿🍿🍿

Wir sind im HALBFINAAAALEEEEE 🤯🤯🤯 📷 Marco Wolf #GERFRA #WIRIHRALLE #aufgehtsDHB #Handball #TeamD #Olympics

0

0

1

RT @Bundeskanzler: Rechtsextremisten greifen unsere Demokratie an. Wir alle sind gefordert, deutlich Stellung zu beziehen: für unser demokr…

0

1K

0

@jreichelt Selbst wenn Olaf Scholz persönlich die Recherche durchgeführt hätte, was soll man da noch anzweifeln?

0

0

1

@Bundeskanzler Vielen Dank Herr Bundeskanzler. Deutschland kann sich glücklich schätzen so eine kompetente Führungsfigur wie Sie zu haben, trotz eines angespannten Jahres. Guten Rutsch!

0

0

0