Jeffrey Morgan

@jmorgan

Followers

4,269

Following

129

Media

13

Statuses

1,537

@ollama – prev @docker , @twitter , @google

Toronto & SF

Joined September 2010

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Adalet

• 242820 Tweets

Chester

• 112909 Tweets

ベイマックス

• 109370 Tweets

Merchan

• 102490 Tweets

Jean Carroll

• 81133 Tweets

Milli

• 76841 Tweets

#2KDay

• 76197 Tweets

Eagles

• 68396 Tweets

Ayşenur Ezgi Eygi

• 40881 Tweets

Franco Escamilla

• 40443 Tweets

#CristinaMostraElTitulo

• 36548 Tweets

Packers

• 31129 Tweets

Barcola

• 31055 Tweets

Dick Cheney

• 22786 Tweets

Mert

• 16855 Tweets

Bafana Bafana

• 15974 Tweets

Ganesh Chaturthi

• 15542 Tweets

Galler - Türkiye

• 14877 Tweets

Kenan

• 13602 Tweets

#FRAITA

• 12925 Tweets

Chaine

• 12597 Tweets

Go Birds

• 12344 Tweets

Sergio Mendes

• 11027 Tweets

#BizimÇocuklar

• 10994 Tweets

Olise

• 10126 Tweets

Last Seen Profiles

Ollama 0.2 can now:

* Run different models side-by-side

* Process multiple requests in parallel

This enables a whole new set of RAG, agent and model serving use cases. Ollama will automatically load and unload models dynamically based on how much memory is in the system.

10

43

287

Dolphin 2.9 Llama 3 is a new version of the popular Dolphin model by

@erhartford

, fine tuned from Llama 3 8B:

ollama run dolphin-llama3

8

27

203

Notux is a new 8x7B mixture of experts (MoE) model by

@argilla_io

, created by fine-tuning Mixtral on a high-quality dataset.

It's currently the top-performing mixture of experts model on the Hugging Face Open LLM Leaderboard!

5

39

172

Starling is a new 7 billion parameter large language model by

@BanghuaZ

& team.

This model outperforms every model to-date except GPT-4 and GPT-4 Turbo on MT-Bench, a benchmark to assess chatbot helpfulness.

11

19

174

Dolphin 2.6 Phi-2 is a new uncensored chat model by

@erhartford

Since this version of Dolphin is based on the small 2.7B Phi model by Microsoft Research, it's fast and can run on a wide range of machines using

@ollama

.

9

24

138

codebooga is a new 34 billion parameter code model created by the infamous Oobabooga.

It's a merge of two other popular coding models and has been praised by model creator

@erhartford

for being the best code instruct model next to GPT-4.

5

23

133

Dolphin 2.1 Mistral is

@erhartford

's most recent 7b, instruct-tuned model based on the popular Mistral foundational model.

It's currently one of the highest performing models on the open-source LLM leaderboards

2

20

122

Dolphin 2.5 Mixtral 8x7b is a new uncensored model by

@erhartford

based on Mixtral, the new mixture of experts model by

@MistralAI

.

It was trained on a wide variety of datasets and is especially strong at coding tasks.

3

16

113

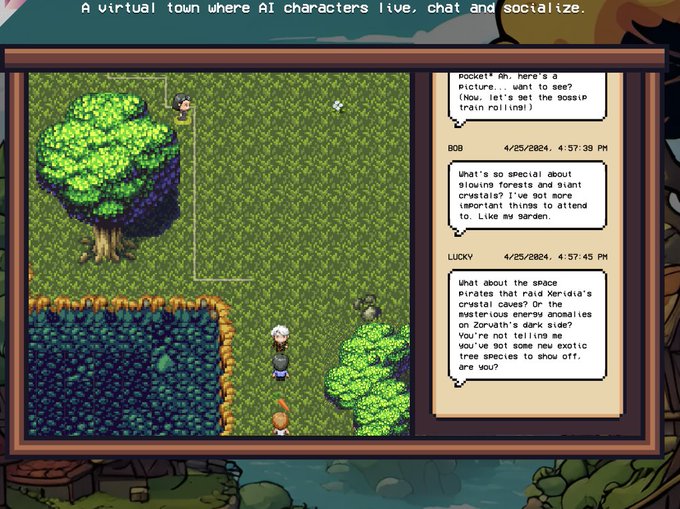

Fully-local AI Town!

AI Town is a virtual town where characters live and interact. It has wonderful visuals, is highly customizable, and can now run entirely on your local machine!

Run an AI Town locally, powered by llama3 🎉

No cloud signups needed. Make your own world, and then talk to it :)

Runs the open-source

@convex_dev

backend locally.

Use

@ollama

locally or

@togethercompute

for cloud LLM.

@realaitown

10

122

584

1

17

103

LLaVA 1.6 from

@imhaotian

has been released with improved resolution support, visual reasoning, and OCR capabilities, all while maintaining minimalist design and data efficiency.

3

7

103

Mixtral can now be run with

@ollama

Ollama countdown: Day 11

Mixture of experts models are now supported in v0.1.16!

Mixtral 8x7B:

ollama run mixtral

Dolphin Mixtral

ollama run dolphin-mixtral

Dolphin Mixtral is an uncensored, fine-tuned model created by

@erhartford

!

(48GB+ memory required)

24

53

493

4

8

101

The Ollama Python & JavaScript libraries are now available:

Python:

JavaScript:

0

24

99

Running Mixtral 8x22B Instruct on a M3 MacBook Pro

.

@MistralAI

's Mixtral 8x22B Instruct is now available on Ollama!

ollama run mixtral:8x22b

We've updated the tags to reflect the instruct model by default. If you have pulled the base model, please update it by performing an `ollama pull` command.

23

49

409

8

6

92

@erhartford

@CrusoeCloud

@LucasAtkins7

@FernandoNetoAi

Amazing! Congrats on the release. Added to Ollama:

ollama run dolphin-llama3

1

4

77

TinyDolphin is a fun, new 1.1B parameter model trained by

@erhartford

and based on

@PY_Z001

's fantastic TinyLlama project.

3

13

75

Dolphin Mistral 2.8 is a new version of Dolphin Mistral by the amazing

@erhartford

, fine tuned from the recent Mistral 0.2 model with support for a context window of up to 32k tokens.

0

14

73

Nous Hermes 2 is the latest model by

@Teknium1

and

@NousResearch

Based on the Yi 34B model, it's the highest performing in their "Hermes" series and excels in scientific discussion and coding tasks.

1

9

68

Solar is a new 10.7B model by

@upstageai

that performs exceedingly well for single-turn instruct use cases.

5

4

57

Llama 3 feels much less censored than Llama 2.

Llama 3 has a much lower false refusal rate compared to Llama 2 (less than 1/3). It's willing to discuss topics its predecessor wouldn't!

After seeing a post here by

@erhartford

, we tested a few prompts:

3

2

42

Build an open-source RAG app using LlamaIndex + Mixtral + Ollama 🚀

Running

@MistralAI

's Mixtral 8x7b on your laptop is now a one-liner! Check out this post in which we show you how to use

@OLLAMA

with LlamaIndex to create a completely local, open-source retrieval-augmented generation app complete with an API:

Bonus: see

9

138

785

1

3

42

@steipete

I'm sorry this happened. It might have hit the context window limit in which case it will try to free up some context window – this works for most models but definitely not well for Llama 3. I've seen it too and am working on fixing it so it doesn't happen.

In the meantime you

3

2

42

Ollama can now be used to generate embeddings using LangChain.

What's great is the same in-memory model will be shared for generating both embeddings and completions.

This means for larger models: faster performance!

🦙 Ollama Local Embeddings 🦙

Search with local Llama-2 embeddings in

@LangChainAI

JS/TS 🦜🔗 0.0.146. GPU-boosted in minutes with

@jmorgan

's !

Incredible what you can do on a Macbook these days.

Thank you GH user Basti-an!

2

6

44

2

9

35

Langchain + Ollama!

Build apps that run entirely locally with Llama 2

Great for a ton of use cases like answering questions from local, private documents (see the example

@Hacubu

shared below) or building apps without incurring costs from cloud hosted LLMs.

🚨🏠Set up all-local, JS-based retrieval + QA over docs in 5 minutes in

@LangChainAI

JS/TS 0.0.124!

@TensorFlow

JS embeddings, HNSWLib vector store, and the last missing piece: GPU-optimized 🦙Llama 2 w/

@jmorgan

's

Use case + 🧵:

2

16

69

0

4

31

Stable Code 3B is a new 3B model with code completion results on part with Code Llama 7B.

It supports Fill in Middle Capability (FIM) making it a great model to use for code completion tools.

ollama run stable-code

Try Stability AI's Stable Code 3B model. Learn more:

Thank you

@StabilityAI

@ncooper57

@EMostaque

and team for creating the model!

3

35

172

2

1

30

Dolphin 2.2 Mistral: a new uncensored model by

@erhartford

that is enhanced with additional data from the Airoboros and Samantha projects.

Without assuming an identity of its own, this model is more empathetic and better handles long conversations.

1

10

28

An alternative to GitHub Copilot focused on privacy that works with Llama 2, Code Llama, and Mistral.

This isn't Copilot 😱!

This is

@codegptAI

with your own llama, codellama or mistral model running locally with absolute privacy for your code

Available from version 2.1.28 onwards 🤩

Powered by

@LangChainAI

and

@Ollama_ai

Download the extension here:

14

91

460

0

5

29

A new cookbook for running SQL queries with open-source LLMs, all running locally!

2

6

24

DeepSeek LLM is a new language model by

@deepseek_ai

available in 7B and 67B parameter counts.

This model has strong results in coding & math, and is trained on over 2 trillion bilingual tokens making it effective in both English and Chinese.

2

6

22

Scale up LLMs with

@ollama

+

@skypilot_org

:

💫 Scale quantized LLMs on your cloud/k8s with Ollama and SkyPilot!

📖 Use

@ollama

to run Mistral, Llama2-7B with just 4 CPUs and scale it up with SkyServe. Add GPUs w/ 1 line to make it faster!

💻 No cloud access, no problem - runs on your laptop too!

1

6

46

2

1

18

@rastadidi

@erhartford

@Magicoder_AI

@zraytam

Is updated to 2.6! I'll be combining the other two into this link as well, so all versions are in one place here:

😃

1

3

16

Run open-source LLMs on

@flydotio

with

@Ollama_ai

1. Download Ollama:

2. Run a model remotely on Fly:

OLLAMA_HOST= ollama run mistral

This is a demo instance, but sign up for the Fly GPU waitlist for your own!

1

4

17

Uncensored models open a new world of possibilities.

@erhartford

trained the one in the article, and has an amazing guide that covers them:

2

4

16

sqlcoder is now available in a 7B parameter count

version, which will run on smaller devices (e.g. Macbooks with 8GB of memory)

ollama run sqlcoder:7b

0

1

16

@rohanpaul_ai

Looking into this case and will fix it.

The OP didn’t share hardware or model examples but let me know if you see a speed discrepancy.

2

0

14

OpenHermes 2 Mistral 7B by

@Teknium1

A new fine-tuned model based on Mistral, trained on open datasets totalling over 900,000 instructions.

This model has strong multi-turn chat skills, surpassing previous Hermes 13B models and even matching 70B models on some benchmarks.

1

2

13

Use Ollama as a chat model with LangChain! Amazing to see this come together

@RLanceMartin

@Hacubu

🦙 Ollama Local Chat Models 🦙

New in

@LangChainAI

: call local OSS models as chat models with

@jmorgan

’s !

Llama 2 13B gets 50 tok/s on an M2 Mac with < 5 minutes of setup.

S/o

@RLanceMartin

!

🐍:

☕:

0

5

41

0

2

13

Build a local, open-source version of ChatGPT with Mistral, Llama 2 and other open-source models.

Want to build your own local, open-source ChatGPT?

It's easy with

@Ollama_ai

!

Plus, you can run MANY models in parallel without spending a ton of $$ on GPUs.

Here's how:

4

4

41

1

2

12

LLM-powered Tamagotchi that all runs locally 🐣

[NEW LAUNCH] 0/ ✨AI-tamago🐣: A local-ready LLM-generated and LLM-driven tamagotchi with thoughts and feelings. 100% Javascript and costs $0 to run. 🧵

Stack:

- 🎮 Game state & reliable AI calls:

@inngest

- 🦙 Inference:

@Ollama_ai

(local),

@OpenAI

,

21

80

452

2

0

11

@tringuyenkv

Soon!

@steveeichert

@Ollama_ai

We've been working on getting multi-modal support working. Here's a quick demo:

5

0

25

1

0

11

@__thetaphipsi

@steipete

Should be fixed now! Need to re-pull the model:

ollama pull llama3:70b

(or the model you used – e.g. ollama pull llama3)

Note: the download should be instantaneous since it's a small change to the runtime parameters. Sorry again this happened!!

0

0

10

@rishdotblog

@dharmesh

@defogdata

@mchiang0610

The 7b version of sqlcoder is available on Ollama:

ollama run sqlcoder:7b

GPU accelerated on Linux & Mac (Windows coming soon :-) There's an API as well for building apps (incl Mac apps!)

0

3

10

@colhountech

@ollama

Sorry about this. Working on a fix. It seems to be from hitting the context length of the model. It can be increased by running `/set parameter num_ctx 8192` in with ollama run command.

If you're using the API, it's the `num_ctx` option.

1

0

9

Chat with local LLMs using a friendly user interface on macOS

@itsKGhandour

's Ollama SwiftUI is a native interface on macOS for downloading and chatting with LLMs, written in Swift and open-source

After getting exposed to

@Ollama_ai

, I decided to learn Swift over the past week and create a native user-friendly interface to be able to quickly chat with LLMs. It is now public, open source and waiting for your feedback!

Check it out now!

0

2

6

0

1

9

Connect dozens of data connectors – Obsidian, Databases, APIs and more – to Ollama with the new LlamaIndex integration ⭐️.

We’re now integrated with (

@jmorgan

) 🦙🎉

Our favorite part is the simplicity; do `ollama pull` and `ollama run` to run Llama 2, Code Llama, or other open LLMs.

Easily plug it into

@llama_index

RAG pipeline. Thanks

@husjerry1

! 🙌

0

8

42

0

0

7

Great seeing you 😊

Met up with

@jmorgan

at SFO to chat about

@Ollama_ai

, building Cody on it, speeding up local inference, etc.

2

0

41

0

0

7

LiteLLM: a lightweight python package for interacting with a variety of LLMs like OpenAI and Anthropic – taking care of input & output translation.

Now you can use LiteLLM with Ollama models too:

1

2

7

Great talk and thread on accessing LLMs from the browser by

@Hacubu

Local LLMs are incredible, but their current reach is just engineers. How do we change that?

I gave a

@GoogleAI

WebML Summit talk on how e.g.

@LangChainAI

&

@ollama_ai

enable client-side AI in web apps.

The punchline: we need a new browser API!

(1/9)

5

17

97

0

1

7

@visheratin

@erhartford

Yes! It works today! Check out

Congrats on getting this model to such an impressive size. How similar is this to the previous Llava 7b/13b models?

1

0

7

@erhartford

@bartowski1182

Sorry you hit this, it will be fixed in the next release of Ollama.

The pretokenizer change wasn’t backward compatible and new converts may not run on older versions of llama.cpp

1

0

5