Joe Alderman

@jaldmn

Followers

801

Following

2K

Media

422

Statuses

4K

Medical AI researcher. Anaesthesia & critical care doctor. Triathlete (kinda) @unibirmingham @UHBTrust @diversedata_ST

Birmingham, England

Joined March 2009

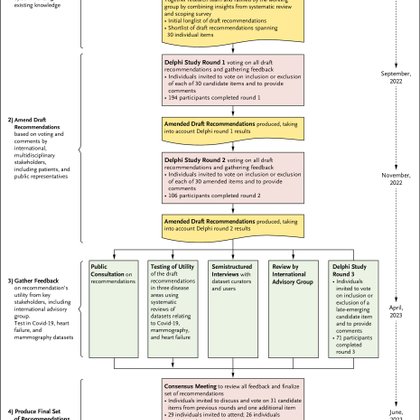

At a loose end this afternoon? Join @DrXiaoLiu and I online at 1pm for a discussion about algorithmic bias and the STANDING Together recommendations. STANDING Together gives guidance on how to minimise risk of bias in medical AI technologies.

aiforgood.itu.int

Health data is highly complex and can be challenging to interpret without knowing the context in which it was created. Data biases can be encoded into

1

0

0

RT @carlosepinzon: Abordar el sesgo de la #IA y la falta de transparencia en los conjuntos de datos sanitarios. Recomendaciones de consenso….

0

1

0

RT @DrXiaoLiu: One of my biggest joys is seeing an entire community take a stand for something important. STANDING Together, recommendation….

thelancet.com

Without careful dissection of the ways in which biases can be encoded into artificial intelligence (AI) health technologies, there is a risk of perpetuating existing health inequalities at scale. One...

0

13

0

RT @jaldmn: We hope STANDING Together helps everyone across the AI development lifecycle to make thoughtful choices about the way they use….

thelancet.com

Without careful dissection of the ways in which biases can be encoded into artificial intelligence (AI) health technologies, there is a risk of perpetuating existing health inequalities at scale. One...

0

2

0

Also published by @NEJM_AI and available here:.

ai.nejm.org

Without careful dissection of the ways in which biases can be encoded into artificial intelligence (AI) health technologies, there is a risk of perpetuating existing health inequalities at scale. O...

We hope STANDING Together helps everyone across the AI development lifecycle to make thoughtful choices about the way they use data, reducing the risk that biases in datasets feed through to biases in algorithms and downstream patient harm. (10/.

0

0

1

@unisouthampton @WHO @pioneer_hub @BSI_UK @MoorfieldsBRC. Special thanks to our funders & supporters: The NHS AI Lab, The Health Foundation and the NIHR @NHSEngland @HealthFdn @NIHRresearch . (end).

0

0

3

(@'ing a few people, but will inevitably miss some, sorry in advance) @MMccradden @JohanOrdish @MarzyehGhassemi @stephenpfohl @negar_rz @hcolelewis @GlockerBen @drmelcalvert @tompollard @StephanieKuku @rnhmatin @Bilal_A_Mateen @kat_heller @alan_karthi @DrDarrenTreanor. (12/.

1

0

4

We hope STANDING Together helps everyone across the AI development lifecycle to make thoughtful choices about the way they use data, reducing the risk that biases in datasets feed through to biases in algorithms and downstream patient harm. (10/.

thelancet.com

Without careful dissection of the ways in which biases can be encoded into artificial intelligence (AI) health technologies, there is a risk of perpetuating existing health inequalities at scale. One...

1

2

3