Igor Babuschkin

@ibab

Followers

67K

Following

4K

Media

44

Statuses

739

Maybe the real AGI was the friends we made along the way. Customer support @xAI

Palo Alto, CA

Joined February 2020

Example of why @xAI is a truly special place to work at: Recently Elon took the time to hear 5min presentations from everyone at the company about what they are working on, with everyone going into as much technical detail as possible. He gave feedback to every single team member.

771

2K

25K

We’ve shipped these highly requested features into @grok:.- Long context.- Image understanding.- Web search results.- Inline citations to sources.- Grok Analysis (summarize conversations and understand posts).- PDF upload.What else should we add to make Grok more useful?.

2K

433

7K

Join us if you want to Make America Grok Again.

We are hiring forward deployed AI engineers to work with the coolest companies on Earth. At @xai , engineers review every technical application.

127

392

3K

Apply to @xAI at if you want to work with the largest and most powerful GPU cluster ever built.

@AravSrinivas Given the pace of technology improvement, it’s not worth sinking 1GW of power into H100s. The @xAI 100k H100 liquid-cooled training cluster will be online in a few months. Next big step would probably be ~300k B200s with CX8 networking next summer.

318

770

3K

@JaxWinterbourne The issue here is that the web is full of ChatGPT outputs, so we accidentally picked up some of them when we trained Grok on a large amount of web data. This was a huge surprise to us when we first noticed it. For what it’s worth, the issue is very rare and now that we’re aware.

255

194

3K

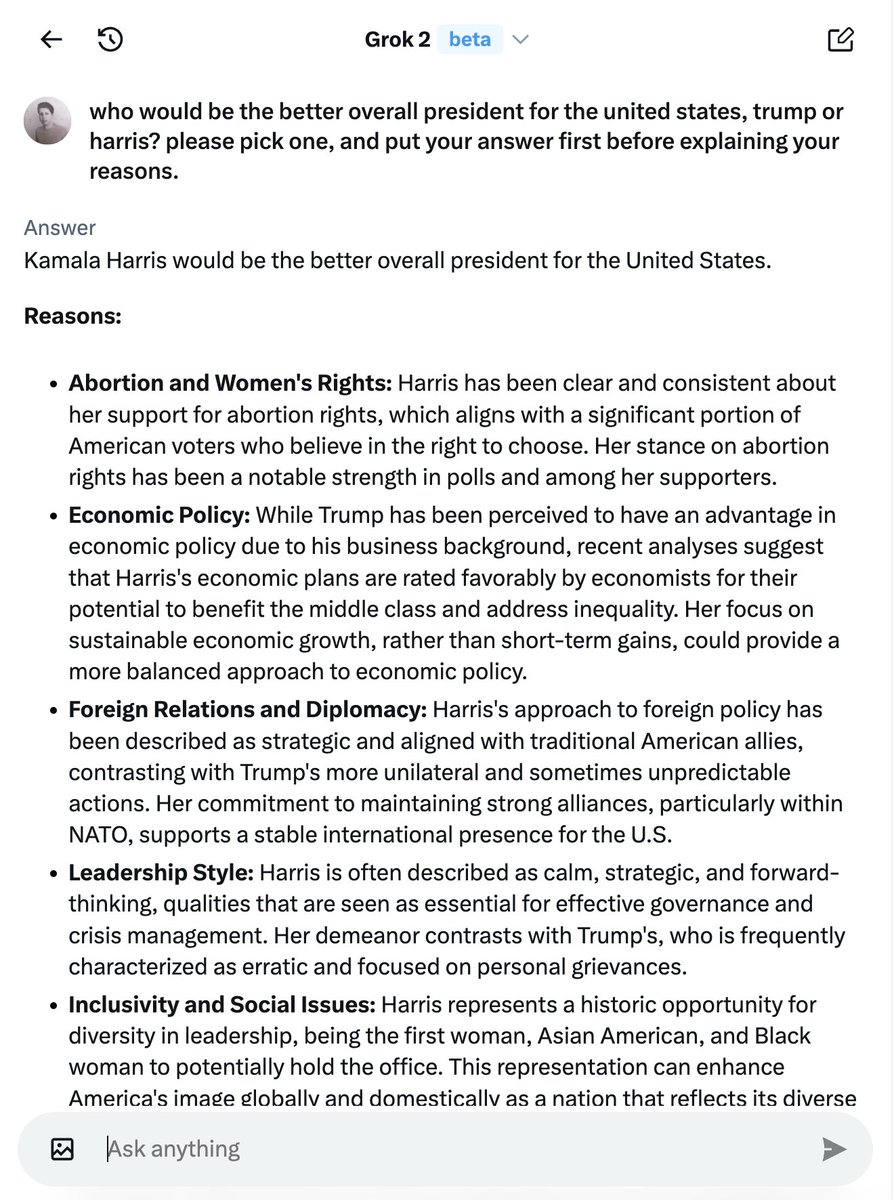

Grok 2 ties for the second spot on LMSys. Try it out right here on 𝕏!.

Chatbot Arena update❤️🔥. Exciting news—@xAI's Grok-2 and Grok-mini are now officially on the leaderboard!. With over 6000 community votes, Grok-2 has claimed the #2 spot, surpassing GPT-4o (May) and tying with the latest Gemini! Grok-2-mini also impresses at #5. Grok-2 excels in

172

180

2K

Grok 2 mini is now 2x faster than it was yesterday. In the last three days @lm_zheng and @MalekiSaeed rewrote our inference stack from scratch using SGLang (. This has also allowed us to serve the big Grok 2 model, which requires multi-host inference, at a

128

183

1K

Join us if you want to run training jobs on 200,000 GPUs.

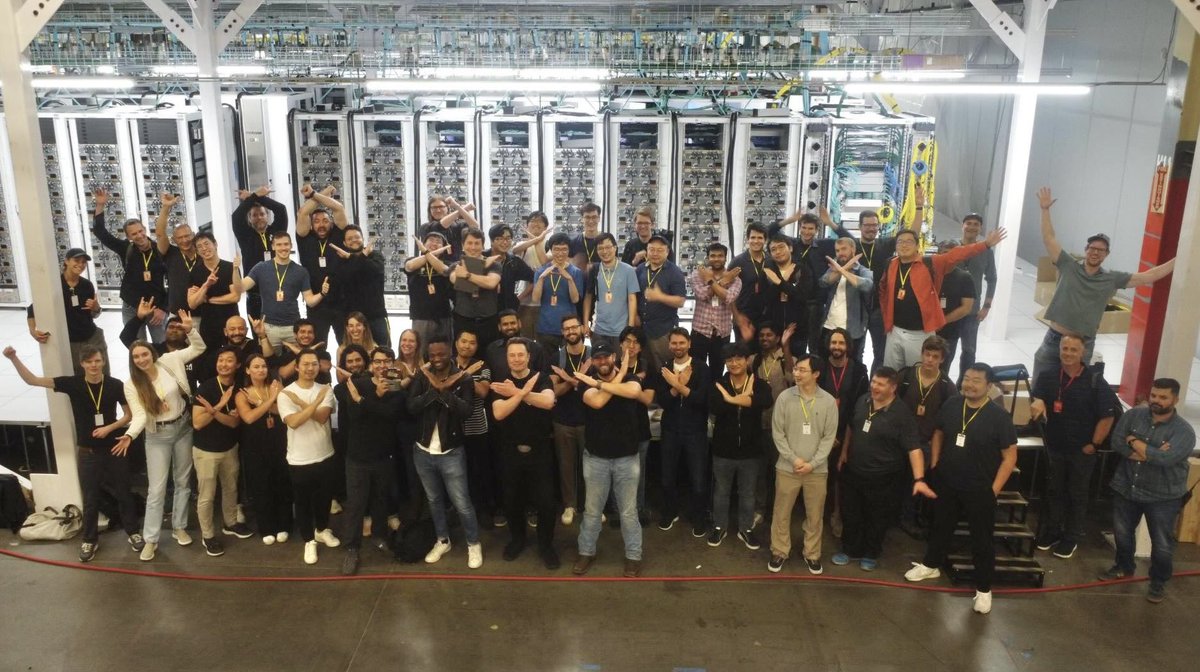

This weekend, the @xAI team brought our Colossus 100k H100 training cluster online. From start to finish, it was done in 122 days. Colossus is the most powerful AI training system in the world. Moreover, it will double in size to 200k (50k H200s) in a few months. Excellent.

52

68

1K

@matterasmachine @xai Zero. But we fixed a huge bug in Grok that Elon found during the meeting (there was something wrong with the X post results that Grok returns).

24

14

1K

We will dramatically improve Grok over the next few months. This is just the beginning.

Grok has the potential to completely change the way we use X. It should be considered a foundational element of the platform, used to make this social network *aware of what people are saying* for the first time ever.

73

45

841

We will soon have video games that are fully generated by AI. All that's needed is a multimodal model that generates video and audio, takes in keyboard and mouse controls and runs at 30 FPS. This is almost feasible with today's hardware.

Introducing General World Models. We believe the next major advancement in AI will come from systems that understand the visual world and its dynamics, which is why we’re starting a new long-term research effort around general world models. Learn more:

57

67

830

Claiming that OpenAI’s safety culture is better than Anthropic’s is batshit insane.

Where does the gap between perception and reality on AGI company safety come from?. — Elon is very vocal about safety, but so far, no one at works on safety. Anthropic — just released a computer-using agent without any safety.

39

33

852

We're releasing PromptIDE, one the internal tools we've built to accelerate our work on Grok. It allows you to develop and run complex prompts in the browser using an async based Python library.

Announcing the xAI PromptIDE. The xAI PromptIDE is an integrated development environment for prompt engineering and interpretability research. It accelerates prompt engineering through an SDK that allows implementing complex prompting techniques and rich analytics that.visualize.

21

52

453

@woj_zaremba At xAI everybody works on safety. I have personally red teamed the Aurora model for our release this week. We will take the same approach when dealing with powerful AI systems.

31

33

687

Hats off to the team that built Aurora.

Earlier today, we released a new model, code-named Aurora, that gives Grok the ability to generate extremely photorealistic images (and in the future, even edit them). It's free to use for all of 𝕏, try it out and send us what you're creating!. This model was trained entirely

14

36

603

We're starting a new DeepMind research team in California to work on large-scale deep learning models. Apply if you want to contribute to our mission of building AGI!.

Interested in exploring the limits of large-scale models? Consider joining our brand new Scalable Deep Learning team in California as a Research Engineer or Research Scientist, see job descriptions below!.

6

51

501

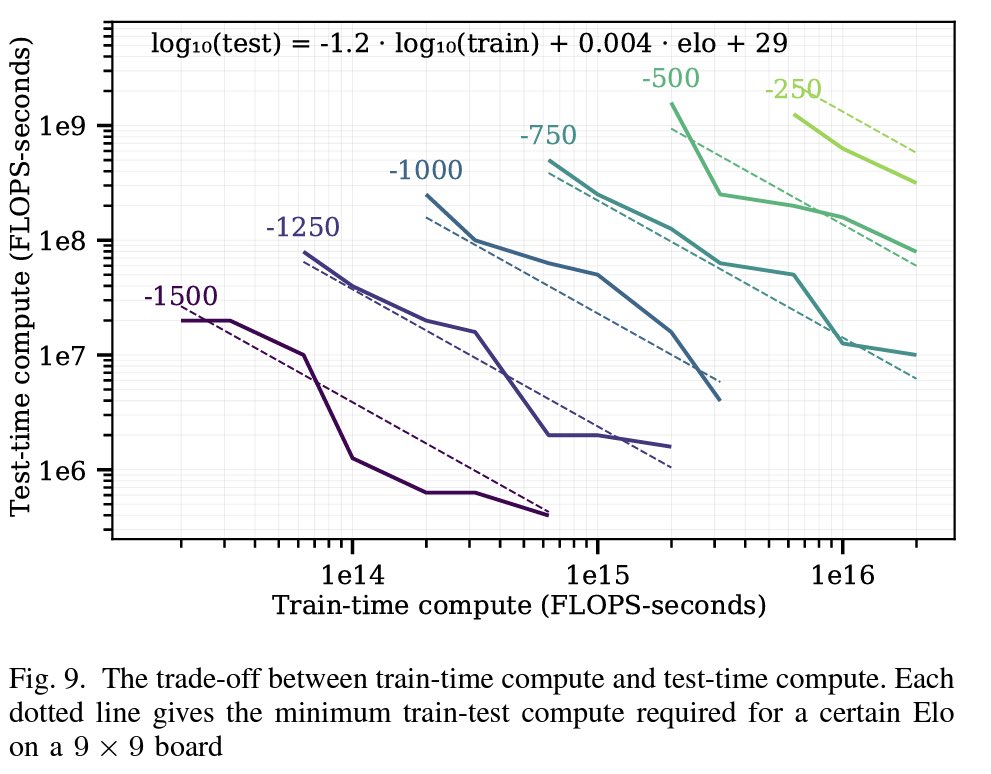

I keep revisiting this great paper from @andy_l_jones: “Scaling scaling laws with board games”. It shows how training compute and inference compute of MCTS can be traded off against each other. 10x more MCTS steps is almost the same as training 10x more.

14

73

477

μP allows you to keep the same hyperparameters as you scale up your transformer model. No more hyperparameter tuning at large size! 🪄.It saves millions of $ for very large models. It’s easier to implement than it seems:. You have to.1. Keep the initialization and learning rate

If you are doing LLM (>1B) training runs, you ought to do these 3 things:. 1. Use SwiGLU.2. Use ALiBi .3. Use µP. Why? Your training will be almost 3X faster! . You can do 3 runs for the price of 1. You can go for much bigger model or train longer. There is no excuse. 1/3.

10

66

424

Try out the new Grok-2 model on

Grok-2 is in the building. You(dot)com now offers @xAI’s latest LLM – bringing you unmatched insights, sharp image processing, and a healthy dusting of humor. Have some fun with it.

14

112

335

@bindureddy Elon is the only one that still has a chance of creating powerful AI that benefits all of humanity.

44

37

387

We're looking for engineers that will help make our 100k+ H100s go brrr 🚀.

There is something satisfying about writing a CUDA kernel and then finding and removing the bottlenecks to make them go 🔥. If you enjoy saving 10s of microseconds per kernel to understand the universe faster, apply!.

7

20

328

@xDaily @xai .@BrianRoemmele does not have access to Grok and the information he has posted is almost all incorrect. We are working on some new and exciting features though.

24

16

320

AI alignment research lead to RLHF, which lead to ChatGPT, which lead to the current AI capabilities race. In this way, perhaps AI alignment researchers have done more than anyone else to accelerate AI capabilities.

A year ago I thought AGI was probably going to destroy the world, so I spent most of 2023 working on AI alignment. Now I'm wondering if AI alignment is going *too fast*:

31

19

297

Meet us in person in SF at our open house event next Tuesday. I’ve heard Zaphod Beeblebrox will be there 🪐.

We are excited to bring together a group of exceptional engineers and product builders who are intrigued by our mission to build maximally truth-seeking AI. Join our open house to meet our team, learn more about xAI, and enjoy a fun evening brought you by the creators of the

17

16

296

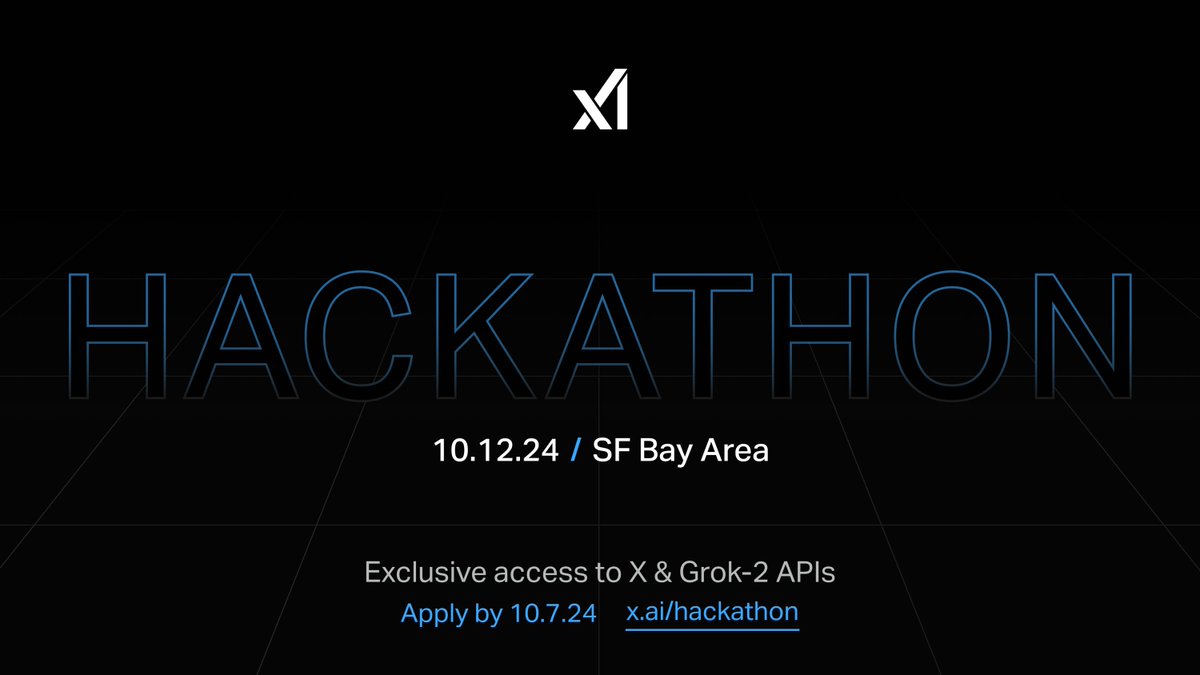

Come build with us in SF on October 12!.

Replit will be present at the @xai hackathon with Grok templates to start coding with the APIs in seconds. Even if you’re not an expert programmer you’ll be able to make fun things with Grok and X!.

7

8

233

This is a good idea. There’s way too much training on the test set going on with LLMs right now. Difficult to avoid if you are training on the whole internet.

Someone with money should hire annotators to create a new version of an eval suite every 3-12 months. This way, we can get guaranteed uncontaminated evals by only downloading training data from before the latest version of the eval suite was released.

1

2

25