Eunjung Cho

@eunjung_cho

Followers

156

Following

604

Media

0

Statuses

131

Zürich, Switzerland

Joined December 2017

Really excited about our recent large collaboration work on NLP for Social Good. The work stems from our discussions at the @NLP4PosImpact Workshop at #EMNLP2024 @emnlpmeeting. Thanks to all our awesome collaborators, workshop attendees and all supporters!

3

26

103

Okay, I'm starting a thread of all the best protest songs/covers I find in the run up to the impeachment vote, because Koreans know how to do protest songs and I think it could get epic. I'll add more as I find them. Let's start with this festive one: https://t.co/PZTGiADRVL

29

4K

15K

Will present this tomorrow, Nov. 14th 2pm at #EMNLP2024 (In-Person Poster Session G, Riverfront Hall). In case you're interested in generating diverse political viewpoints with LLMs, come to the poster session!

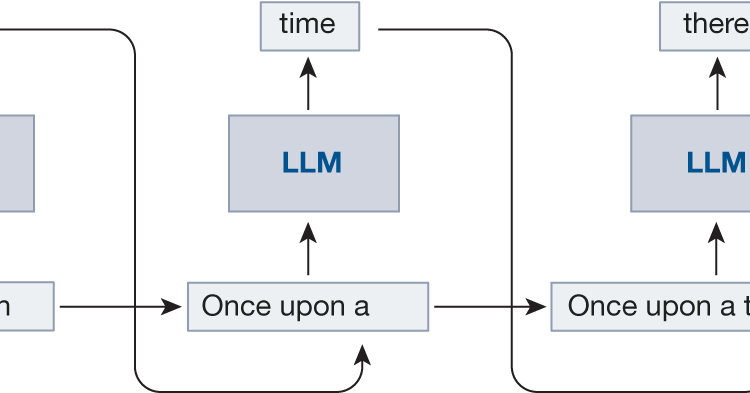

📢 New preprint: Aligning LLMs with diverse political views (with @phinifa @eunjung_cho @caglarml and @ellliottt) https://t.co/CrlJQaavoe More details in 🧵[1/7]

0

3

19

Our article “A Tough Balancing Act - The Evolving AI Governance in Korea”, where we provide an overview of the landscape of AI ethics and governance in Korea, has been published in East Asian Science, Technology and Society (EASTS). Freely available on: https://t.co/C9h7ZMJpSa

tandfonline.com

AI governance began to emerge as a focal point of discourse in Korea from the mid 2010s. Since then, multiple government and public bodies have released guidelines for AI governance, and various te...

0

0

1

📢 New preprint: Aligning LLMs with diverse political views (with @phinifa @eunjung_cho @caglarml and @ellliottt) https://t.co/CrlJQaavoe More details in 🧵[1/7]

3

9

48

Excited to share a unifying formalism for the main problem I’ve tackled since starting my PhD! 🎉 Current AI Alignment techniques ignore the fact that human preferences/values can change. What would it take to account for this? 🤔 A thread 🧵⬇️

7

45

264

🚨 Fascinating paper alert: "Promises and pitfalls of artificial intelligence for legal applications" by @sayashk, @PeterHndrsn & @random_walker is a must-read for everyone interested in AI and the legal profession. Quotes: "Recent instruction-tuned language models (chatbots)

4

79

248

The idea of "machine unlearning" is getting attention lately. Been thinking a lot about it recently and decided to write a long post: https://t.co/Q1bfsF7rxk 📰 Unlearning is no longer just about privacy and right-to-be-forgotten since foundation models. I hope to give a gentle

ai.stanford.edu

As our ML models today become larger and their (pre-)training sets grow to inscrutable sizes, people are increasingly interested in the concept of machine unlearning to edit away undesired things...

23

160

748

In which I review two pieces I like lots: Risky Speech Systems: Tort Liability for AI-Generated Illegal Speech https://t.co/s7LRGVOCHO

@IReadJotwell @JaneYakowitz

cyber.jotwell.com

Jane Bambauer, Negligent AI Speech: Some Thoughts about Duty, 3 J. Free Speech L. 344 (2023). Nina Brown, Bots Behaving Badly: A Products Liability Approach to Chatbot-Generated Defamation, 3 J. Free...

0

9

34

More hallucinations of case law by LLMs used in legal settings, this time in the UK. https://t.co/tazNOxUAxB

1

3

9

What's the best work you know that finds evidence for internal representations of world models in a language model?

15

16

93

My new @Nature paper "Role Play with Large Language Models" was briefly behind a paywall. It's now free-to-read:

nature.com

Nature - By casting large-language-model-based dialogue-agent behaviour in terms of role play, it is possible to describe dialogue-agent behaviour such as (apparent) deception and (apparent)...

17

134

646

About a week ago -- attended #EAAMO2023 and presented our PaperCard paper with @ejcho95 and @kchonyc Truely great people and community :) Thanks for all the constructive comments, discussions, and engaging interactions! arXiv version available in: https://t.co/GlhEdwQlyi

0

5

9

Very helpful analysis of the AI EO, from @_KarenHao and @matteo_wong. "The White House’s Impossible AI Task". https://t.co/IJ0GqHZSrQ

theatlantic.com

President Biden’s big swing on AI is as impressive and confusing as the technology itself.

2

10

29

Zuck @ Lex > Meta has solved human emulation > you can store and emulate your loved ones > Meta trying to figure out what they’ll allow for people who have passed > leaning into one creator -> many fans relationships through AI > the future is parasocial > they’re aiming

54

108

1K

Thread: [1/4] Some MIT/Harvard collaborators and I just finished a project to show that Stable Diffusion objectively succeeds at copying the styles of digital artists with copyrighted work. Why might you care about this if you care about AI safety? https://t.co/MuH5vbq3eD

github.com

Contribute to thestephencasper/sd_cycle_consistency development by creating an account on GitHub.

22

261

881

Cho, Cho & Cho (2023) w/ @ejcho95 & @kchonyc finally online!😆 A short disclaimer for our work-in-progress: submitted to hal far before the recent decision on #FAccT2023 and was unexpectedly disclosed today after long moderation process,,, Next ver. should be much improved :-)

it took us two months to have this preprint archived... can you guess why? a fun project led by Won Ik Cho and Eunjung Cho! [Cho, Cho & Cho, 2023] https://t.co/J1hhfXohQD

0

2

16

I was part of the red team for GPT-4 — tasked with getting GPT-4 to do harmful things so that OpenAI could fix it before release. I've been advocating for red teaming for years & it's incredibly important. But I'm also increasingly concerned that it is far from sufficient. 🧵⤵️

63

638

3K

Absolutely delighted to finally be able share my new paper with @SandraWachter5 & @c_russl "The Unfairness of fair machine learning: Levelling down and strict egalitarianism by default" https://t.co/SsOgzKv0aV

@oiioxford @OxGovTech @FAccTConference

1

15

55

This week in The Algorithm, our weekly newsletter covering artificial intelligence: The EU wants to regulate your favorite AI tools.

technologyreview.com

Plus: Generative AI is changing everything. But what’s left when the hype is gone?

2

8

15