Arslan Chaudhry

@arslan_mac

Followers

610

Following

76

Statuses

64

Research Scientist @GoogleDeepMind | Ex @UniofOxford, @AIatMeta, @GoogleAI

Mountain View, CA

Joined September 2011

RT @Olympics: Believe it, Nadeem. You just became Pakistan's first ever athletics Olympic champion. 🇵🇰🥹

0

21K

0

I will be at the ICML in Vienna. If you are interested in continual learning in foundation models or other forgetting or adaptation related challenges in (V)LMs, come chat to me. I will be at/ around the @GoogleDeepMind booth.

2

0

16

RT @yeewhye: We're looking for an exceptional junior researcher in AI/ML with strong interests in diversity, equity and inclusion to fill t…

0

22

0

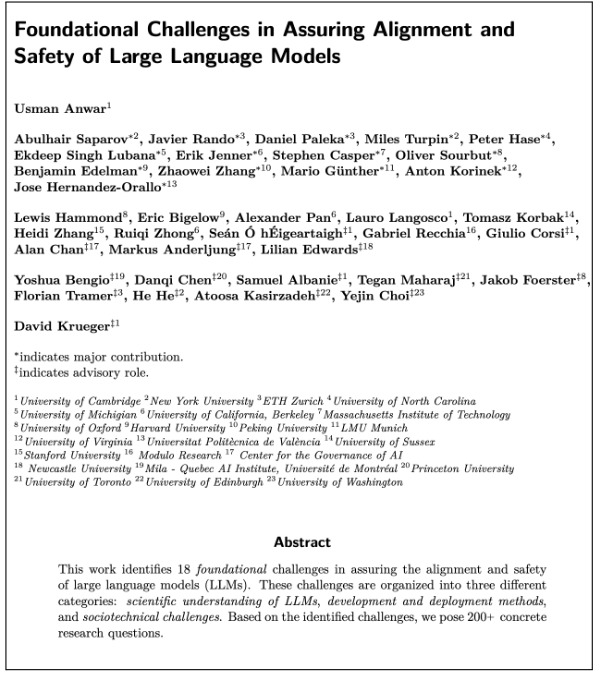

Very well done @usmananwar391 and coauthors.

I’m super excited to release our 100+ page collaborative agenda - led by @usmananwar391 - on “Foundational Challenges In Assuring Alignment and Safety of LLMs” alongside 35+ co-authors from NLP, ML, and AI Safety communities! Some highlights below...

1

0

3

RT @schwarzjn_: @thegautamkamath I have no doubt about the positive intentions behind this, but my fear is that it's yet another policy tha…

0

3

0

@vfsglobalcare Could you please respond to the DM? It’s been more than 6 hours and we are running short on time here.

1

0

0

Gemini has achieved a classification accuracy of 90% on MMLU (with a combo of greedy sampling and a majority vote of 32 COT samples). This is remarkable! For reference, expert-level perf, defined at 95th percentile human test-taker accuracy in *each subject*, would be ~89.8%.

Exciting times, welcome Gemini (and MMLU>90)! State-of-the-art on 30 out of 32 benchmarks across text, coding, audio, images, and video, with a single model 🤯 Co-leading Gemini has been my most exciting endeavor, fueled by a very ambitious goal. And that is just the beginning! A long 🐍 post about our Gemini journey & state of the field. The biggest challenges in LLMs are far from trivial or obvious. Evaluation and data stand out to me. We've moved beyond the simpler "Have we won in Go/Chess/StarCraft?" to “Is this answer accurate and fair? Is this conversation good? Does this complex piece of text prove the theorem?” Exciting potential coupled with monumental challenges. The field is less ripe further down the model pipeline. Pretraining is relatively well understood. Instruction tuning and RLHF, less so. In AlphaGo and AlphaStar we spent 5% of compute in pre-training and the rest in the very important RL phase, where the model learns from its successes or failures. In LLMs, we spend most of our time on pretraining. I believe there’s huge potential to be untapped. Cakes with lots of cherries, please 🎂 @Google has demonstrated its ability to move fast. It has been an absolute blast to see the energy from my colleagues and the support received. A “random” highlight is coauthoring our tech report with a co-founder. Another is coleading with @JeffDean. But beyond individuals, Gemini is about teamwork: it is important to recognize the collective effort behind such achievements. Picture a room full of brilliant people, and avoid attributing success solely to one person. On a personal note, recently I celebrated my 10 year anniversary at Google, and it’s been 8 years since @quocleix and I co-authored “A Neural Conversational Model”, which gave us a glimpse of what was, has, and is yet to come. Back then, that line of work received a lot of skepticism. Lessons learned: whatever your passion is, push for it! Zooming back out, there’s lots of change in our field, and the stakes couldn’t be higher. Excited for what’s to come from Gemini, but humbled by the responsibility to “get it right”. 2024 will be drastic. Welcome Gemini!

2

0

4

Our team in @GoogleDeepMind Mountain View is looking for a Research Scientist/ Research Engineer. If you are interested in working on multimodal foundation models and their adaptability over time, come chat with me. I will be attending ICML and my schedule is quite flexible.

6

18

245

RT @bschoelkopf: Another AI paradox: people are excited about LLMs, some even think that AGI is just around the corner. But some students a…

0

309

0

Our work on a new large-scale benchmark composed of 30 years of computer vision research to explore knowledge accrual in continual learning systems.

Introducing NEVIS’22, a new benchmark developed using 30 years of computer vision research. This provides an opportunity to explore how AI models can continually build on their knowledge to learn future tasks more efficiently. ➡️

0

0

3

@timrudner @NYUDataScience @andrewgwils @yaringal @yeewhye This is such a good news! Congratulations, Tim!

1

0

1

About time!

Doina Precup, @rpascanu and I are very excited to announce the first International Conference on Lifelong Learning Agents (CoLLA)! If you are working in lifelong/continual learning, meta-learning, multi-task learning, or OOD generalization, consider submitting your work to CoLLA!

0

0

0