Zonglin Yang (on Job Market!)

@Yang_zy223

Followers

338

Following

2K

Statuses

537

NLP PhD at @NTUsg | Previous @Cornell_CS | Working on Reasoning & LLMs for Scientific Discovery | Former Intern at @MSFTResearch (x2) | MOOSE & MOOSE-Chem

Joined January 2022

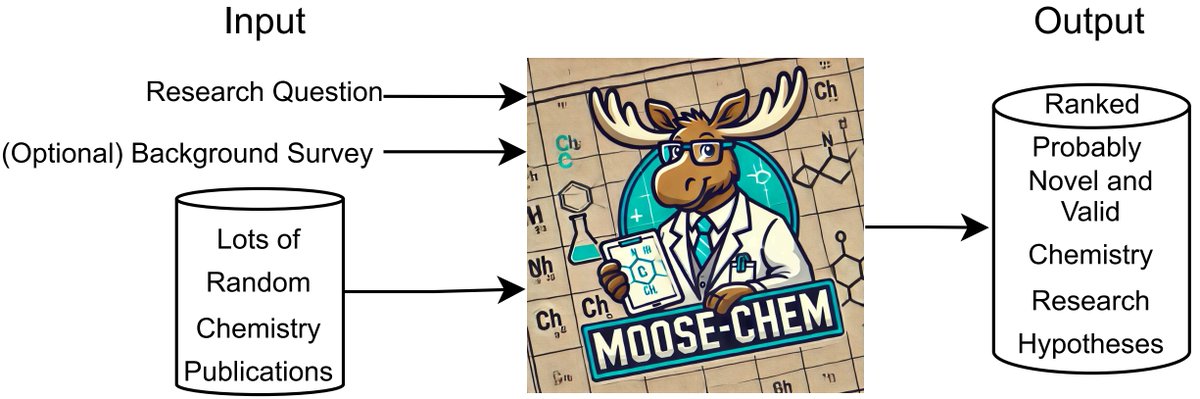

Excited to share that our paper on automated scientific discovery has been accepted to #ICLR2025! In brief, 1. It shows that LLMs can rediscover the main innovations of many research hypotheses published in Nature or Science. 2. It provides a mathematically proven theoretical foundation for automated scientific hypothesis discovery. Grateful to my amazing collaborators: Wanhao Liu, Ben Gao, @0xTongXie, Yuqiang Li, @ouyang_wanli, @soujanyaporia, @erikcambria, and @Schrodinger_t! #ai4research #ai4science #ai4discovery

Given only a chemistry research question, can an LLM system output novel and valid chemistry research hypotheses? Our answer is YES!!!🚀Even can rediscover those hypotheses published on Nature, Science, or a similar level. Preprint: Code:

2

11

28

RT @SGRodriques: If you don't already put your papers on ArXiv/BioRxiv, you should really start doing it. Pretty soon most interaction with…

0

20

0

RT @andrewwhite01: A really nice example of how the reasoning "patterns" like reflection and considering alternatives just arises from RL

0

1

0

RT @arankomatsuzaki: SFT Memorizes, RL Generalizes: A Comparative Study of Foundation Model Post-training Shows that: - RL generalizes in…

0

149

0

RT @denny_zhou: Mathematical beauty of self-consistency (SC) SC ( for LLMs essentially just does one thing: choo…

0

24

0

RT @denny_zhou: Chain of Thought Reasoning without Prompting (NeurIPS 2024) Chain of thought (CoT) reasoning ≠ C…

0

212

0

RT @junxian_he: We replicated the DeepSeek-R1-Zero and DeepSeek-R1 training on 7B model with only 8K examples, the results are surprisingly…

0

667

0

RT @denny_zhou: AGI is finally democratized: RLHF isn't just for alignment—it's even more fun when used to unlock reasoning. Once guarded b…

0

49

0

RT @denny_zhou: What is the performance limit when scaling LLM inference? Sky's the limit. We have mathematically proven that transformers…

0

531

0

RT @deepseek_ai: 🚀 DeepSeek-R1 is here! ⚡ Performance on par with OpenAI-o1 📖 Fully open-source model & technical report 🏆 MIT licensed: D…

0

8K

0

RT @SGRodriques: This short story from 2000 about superhuman scientists is both remarkably prescient and a great warning about why it is cr…

0

6

0

RT @YuanqiD: MolLEO is accepted @iclr_conf! We have made so much progress to show LLMs really have tons of knowledge about science and it’s…

0

5

0

RT @iScienceLuvr: I appreciate DeepSeek providing examples of failure, especially since these are ideas that have been widely discussed for…

0

160

0

RT @SirrahChan: Here's my attempt at visualizing the training pipeline for DeepSeek-R1(-Zero) and the distillation to smaller models. Not…

0

244

0

RT @_akhaliq: Google presents Evolving Deeper LLM Thinking Controlling for inference cost, we find that Mind Evolution significantly outpe…

0

212

0