Existential Risk Observatory ⏸

@XRobservatory

Followers

1K

Following

619

Statuses

974

Reducing AI x-risk by informing the public. We propose a Conditional AI Safety Treaty: https://t.co/xUZxozlNBF

Amsterdam, Netherlands

Joined March 2021

Today, we propose the Conditional AI Safety Treaty in @TIME as a solution to AI's existential risks. AI poses a risk of human extinction, but this problem is not unsolvable. The Conditional AI Safety Treaty is a global response to avoid losing control over AI. How does it work?

21

22

109

It is hopeful that the British public and British politicians support regulation to mitigate the risk of extinction from AI. Other countries should follow. In the end, a global AI Safety Treaty should be signed.

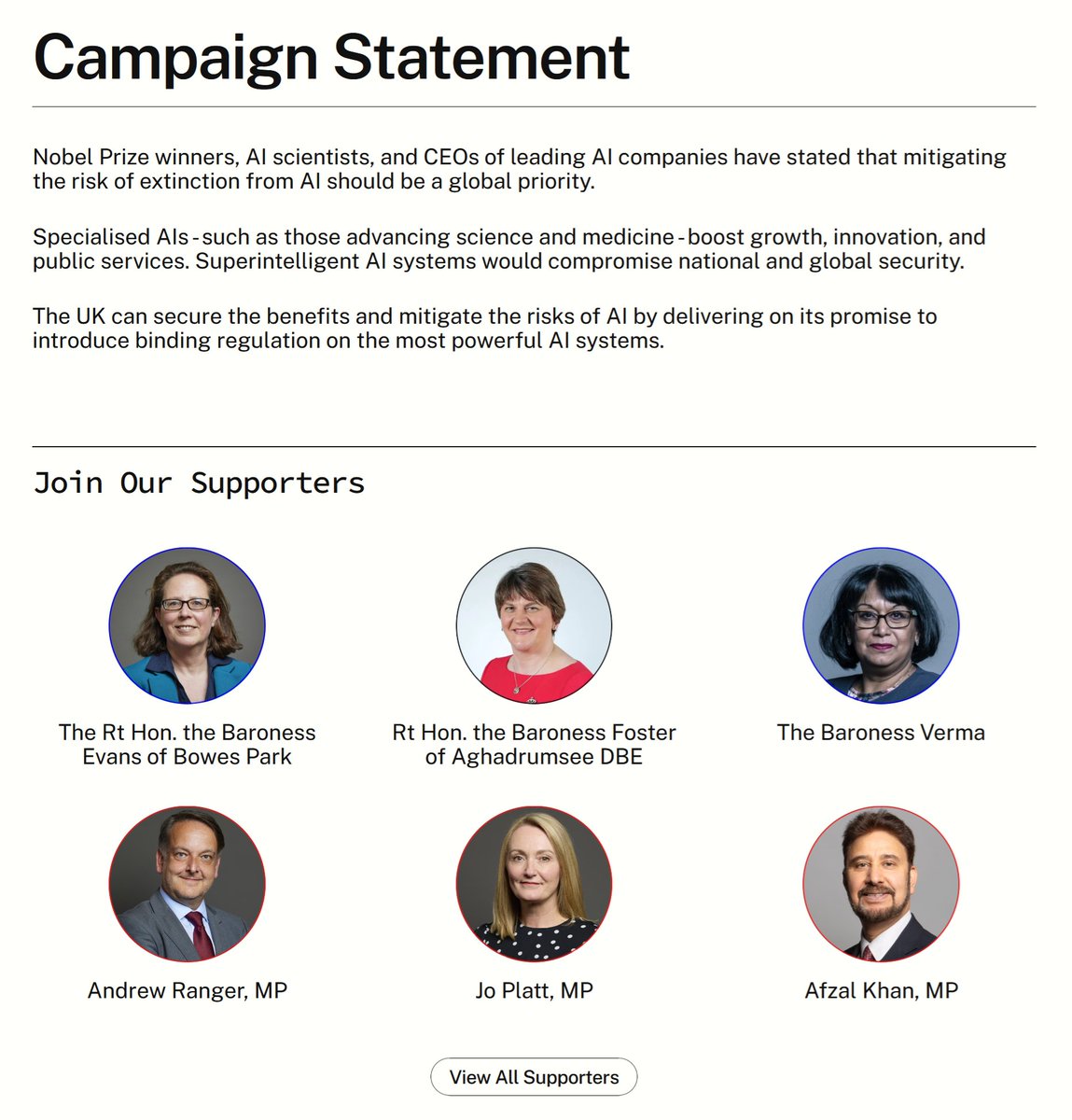

UK POLITICIANS DEMAND REGULATION OF POWERFUL AI TODAY: Politicians across the UK political spectrum back our campaign for binding rules on dangerous AI development. This is the first time a coalition of parliamentarians have acknowledged the extinction threat posed by AI. The public is fully on board. Our polling with YouGov released today shows that 87% of the British public want AI developers to prove their systems are safe before release. Only 9% trust tech CEOs to act in the public interest. Assembling this coalition is a significant milestone on the path to getting dangerous AI development under control. The gravity of the threat and the urgency with which it must be addressed cannot be overstated. Nobel Prize winners, hundreds of top AI scientists, and even the CEOs of the leading AI companies have warned that AI poses a threat of extinction. The Labour government clearly promised in its manifesto it would introduce "binding regulation on the handful of companies developing the most powerful AI models". The public wants it, parliamentarians call for it, humanity needs it. Time to deliver.

3

3

30

Prohibition of autonomous AI agents, which would logically include existentially risky AI, would slash existential risk. Therefore, we welcome "Fully Autonomous AI Agents Should Not be Developed" by @mmitchell_ai et al! We would be particularly excited about future work towards enforceable international legislation which effectively stops overly risky AI agents from being created.

New piece out! We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values. With @evijitghosh @SashaMTL and @GiadaPistilli (1/2)

1

3

17

Today, the Green Screen Coalition releases their call to keep AI within planetary boundaries. We have signed this statement, since we think overshooting planetary boundaries, possibly spectacularly so, is a significant threat post-AGI. This could either be caused by direct emissions, or by a skyrocketing economy, made possible by AGI. The AI Action Summit should be much more ambitious in solving both today's and tomorrow's AI risks!

1

2

4

Proud that our AI Safety Debate with Prof. @Yoshua_Bengio will be opened by Zsolt Szabó @Staatssec_BZK, State Secretary for Kingdom Relations and Digitalisation of the Netherlands! We are currently sold out. If you have subscribed, but you can't make it, please unsubscribe so others can take your place: We will also be livestreaming the debate: See you in Paris!

1

2

10

RT @Yoshua_Bengio: A few reflections I had while watching this interview featuring @geoffreyhinton: It does not (or should not) really mat…

0

166

0

Although we think loss of control should remain the #1 priority, gradual disempowerment, at least mass unemployment and its consequences, are also a crucial topic. Obviously, mass unemployment could cause increasing economic inequality, potentially followed by power inequality. To prevent these outcomes, it will become even more important to protect our democracy and redistribute AI-generated profits effectively. Humanity has ample experience in dealing with these questions, but a good outcome is far from guaranteed. The battle for a good future is only getting started.

New paper: What happens once AIs make humans obsolete? Even without AIs seeking power, we argue that competitive pressures will fully erode human influence and values. with @jankulveit @raymondadouglas @AmmannNora @degerturann @DavidSKrueger 🧵

2

1

9

Proud to have the Chair of the first International AI Safety Report as a keynote at our debate in Paris 9 Feb! There are still a few spots left: We will be livestreaming as well: We hope to see you there!

Today, we are publishing the first-ever International AI Safety Report, backed by 30 countries and the OECD, UN, and EU. It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵 Link to full Report: 1/16

0

2

9