Ondrej Bohdal

@OBohdal

Followers

262

Following

258

Statuses

53

Machine learning researcher at Samsung Research @samsungresearch. Previously @InfAtEd @EdiDataScience @turinginst @AmazonScience

London, UK

Joined December 2020

Our benchmark for evaluating in-context learning of multimodal LLMs has been accepted to ICLR'25! 🎉 Check out the project page for more details: 📄

Our VL-ICL bench is accepted to @iclr_conf! It's been almost a year since we developed it yet state-of-the-art VLMs still struggle on learning in-context. Great to work with @OBohdal and @tmh31.

0

3

19

🚀Excited to share our latest work 𝗟𝗼𝗥𝗔.𝗿𝗮𝗿: an efficient method to merge LoRAs for personalized content and style image generation! 🖼️✨

🛸Excited to release 𝗟𝗼𝗥𝗔.𝗿𝗮𝗿, a groundbreaking method for personalized content and style image generation 🦕. 📜 Paper and video: Huge thanks to the co-authors: @OBohdal, Mete Ozay, Pietro Zanuttigh, and @umbertomichieli

0

0

12

RT @RamanDutt4: Looking to reduce memorization WHILE improving image quality in diffusion models? Delighted to share our work "𝐌𝐞𝐦𝐂𝐨𝐧𝐭𝐫𝐨𝐥…

0

12

0

RT @RamanDutt4: 🚨 MemControl: Mitigating Memorization in Medical Diffusion Models via Automated Parameter Selection A new strategy to miti…

0

8

0

RT @RamanDutt4: Finally arrived in Vienna to present FairTune at @iclr_conf. A dream come true ✨ Also, co-organizing the ML-Collective soci…

0

6

0

RT @yongshuozong: VLGuard is accepted to #ICML2024! Check out our strong baseline for 🛡️safeguarding🛡️ VLLMs:

0

2

0

Noise can be helpful for improving generalisation and uncertainty calibration of neural networks - but how to use it effectively in different scenarios? Find out in our recent paper that was accepted to #TMLR!

I am thrilled to share our latest paper, "Navigating Noise: A Study of How Noise Influences Generalisation and Calibration of Neural Networks " published in @TmlrOrg, This work is a collective effort by @OBohdal , @tmh31, @mrd_rodrigues and myself :).

0

0

16

Curious about how to better evaluate in-context learning in multimodal #LLMs? We introduce VL-ICL Bench to enable rigorous evaluation of MLLM's ability to learn from a few examples✨. Details at

Evaluating the capabilities of multimodal in-context learning of #VLLMs? You can do better than VQA and captioning! Introducing *VL-ICL Bench* for both image-to-text and text-to-image #ICL. Project page:

0

2

15

Vision-language models are highly capable yet prone to generate unsafe content. To help with this challenge, we introduce the VLGuard safety fine-tuning dataset ✨, together with two strategies for how to utilise it ✅. Learn more at ➡️

Your #VLLMs are capable, but they are not safe enough! We present the first safety fine-tuning dataset VLGuard for VLLMs. By fine-tuning on it, the safety of VLLMs can be substantially improved while maintaining helpfulness. Check here for more details:

0

0

11

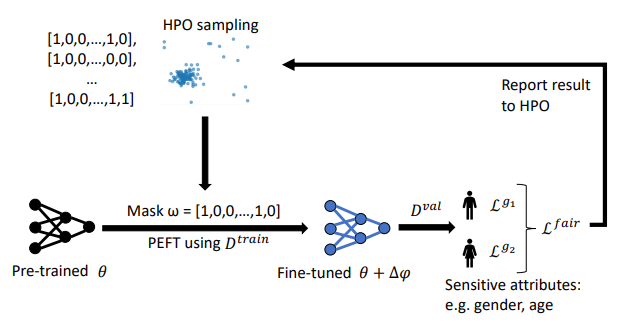

Interested in how to improve the fairness of large vision models? Learn more in our FairTune paper that was recently accepted to #ICLR!

🚨FairTune: Optimizing PEFT for Fairness in Medical Image Analysis A new framework to finetune your large vision models that improves downstream fairness. Accepted in #ICLR2024 ✨ With: @OBohdal @STsaftaris @tmh31 CC: @vivnat @alvarezvalle @fepegar_ @BoWang87 @BioMedAI_CDT

0

2

13

RT @chavhan_ruchika: 🎉 Super excited to share that I'll be attending #ICCV2023 in Paris 🇫🇷 to present my paper - Quality Diversity for Vis…

0

3

0

I'm really happy to share the news that Meta-Calibration has been accepted to TMLR! Meta-Calibration uses meta-learning as a new way to optimise for uncertainty calibration of neural networks. I've had a very positive experience with TMLR and certainly recommend submitting there!

Meta-Calibration: Learning of Model Calibration Using Differentiable Expected Calibration Error Ondrej Bohdal, Yongxin Yang, Timothy Hospedales. Action editor: Yingzhen Li. #calibration #prediction #optimise

0

1

28