Shinji Nishimoto

@NishimotoShinji

Followers

2,366

Following

127

Media

24

Statuses

263

Professor at Osaka University @FBSpr /PI at @cinet_info Systems/Perceptual/Cognitive Neuroscientist Osaka➡️Berkeley➡️Osaka Views are my own

Joined December 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

ハロウィン

• 1064418 Tweets

Valencia

• 878164 Tweets

DANA

• 679424 Tweets

Happy Halloween

• 209302 Tweets

ポケポケ

• 156950 Tweets

Garbage

• 139495 Tweets

#週刊ナイナイミュージック

• 110403 Tweets

RTVE

• 95627 Tweets

DeNA

• 68838 Tweets

フレンド

• 58147 Tweets

自民党会派入り

• 50792 Tweets

Rachel Reeves

• 40204 Tweets

Chancellor

• 36106 Tweets

橋本環奈

• 35458 Tweets

ベイスターズ

• 33602 Tweets

#Budget2024

• 32829 Tweets

ホークス

• 25391 Tweets

#Aぇヤンタン

• 24832 Tweets

#発売日のEMPIRE

• 24601 Tweets

オースティン

• 24592 Tweets

SÃO PAULO TODAY

• 24293 Tweets

ミュウツー

• 23488 Tweets

G-DRAGON ON THE BLOCK

• 22470 Tweets

横浜優勝

• 17319 Tweets

ハマスタ

• 17145 Tweets

Jerez

• 14871 Tweets

ウォーターマーク

• 14488 Tweets

La Palma

• 12681 Tweets

Sunak

• 12189 Tweets

GALA EN LA MEJOR

• 11917 Tweets

ミュージカル組

• 11793 Tweets

Last Seen Profiles

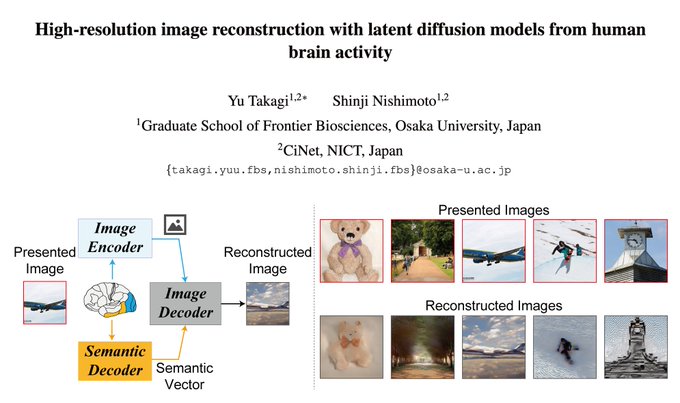

Our paper got accepted at

#CVPR2023

!

(w/

@yu_takagi

)

We modeled the relationship between human brain activity (early/semantic areas) and Stable Diffusion's latent representations and decoded perceptual contents from brain activity ("brain2image").

16

115

422

コンピュータビジョン分野の主要国際会議

#CVPR2023

に論文が採択されました! (w/

@yu_takagi

)

ヒト脳活動と画像生成AI (Stable Diffusion, SD)の潜在表現の関係性を示し、

(1) 脳活動からの知覚内容の解読(映像化)

(2) SD潜在表現の脳活動からの解釈

などを行いました。

2

86

278

基盤LLM学習にGCPでA100GPU 2048枚を21日間課金(536円/A100時)で約5.5億円。

研究用HPCのA100課金は一桁安く、仮に

- 産総研ABCI(960枚x82.5円)

- 東京大学Aquarius(360枚x31.3円)

- 大阪大学SQUID(336枚x22.9円)

の1656枚を26日間利用したら約6100万円。科研費基盤A全力を1ヶ月弱で消費。

1

69

220

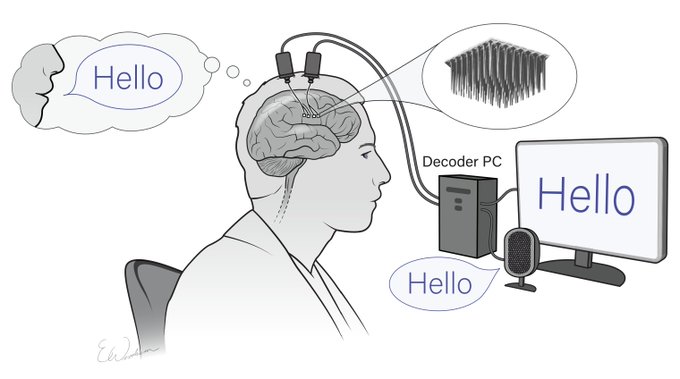

頭の中で想起している映像を脳活動から解読する論文がNeural Networks誌に掲載されました。

NICT小出(間島)さん、QST間島さん

@majimajimajima

が主導した共同研究の成果です。

3

56

184

Transformerを使った強化学習モデルIRIS。2時間相当のゲーム訓練だけでAtariゲームの性能がヒト平均並に。コードも公開。(Micheli, Alonso, Fleuret, 2022 arXiv)

0

28

163

新しい論文が出版されました。103種類の多様な認知タスク遂行中の脳活動をモデル解析し、大脳・小脳・subcortexにわたって認知特徴空間が保存されていること、それぞれの脳活動から認知内容のデコーディングが行えることなどを示しました。

From

@TomNakai

and

@NishimotoShinji

: Modelling based on fMRI data obtained during more than 100 different cognitive tasks reveals that representation and decoding are preserved across the cortex, cerebellum, and subcortex 🧠

0

3

20

1

17

96

言語刺激下ヒト脳活動(fMRI)と言語予測モデル(GPT-2)の対応について、単語群レベルで対応しているだけなのかその並びを含めた意味情報まで保持しているのかを語の並び替えなどの対照を用いて検証。IFGとAGで後者を支持する結果。

0

19

47

脳モデル構築コンテストAlgonauts Project 2023 Challenge。今年は様々な画像を見ているときのヒト脳活動(7T fMRI記録)の正確な予測モデルを作ることが目標。3位までに賞金、1位はCCN

@Oxford

の参加旅費補助。

The Algonauts Project 2023 Challenge is now live!

Join and build a computational model that best predicts how the human brain responds to complex natural scenes 🧠💻

⏲️ Submission deadline: 26th of July

#algonauts2023

@CCNBerlin

@cvnlab

@goetheuni

2

36

91

0

9

46

EEG(脳波)から視覚内容解読(Bai et al., 2023 arXiv)。画像提示下(2000枚x6人)脳波を128-channel EEGで記録(データはKavasidis et al., 2017 ACMを利用)。EEG encoder-decoder、Stable Diffusion、CLIP潜在表現を介して画像生成。

3

14

43

Our paper on mental image reconstruction from human brain activity, led by Naoko Koide-Majima and

@majimajimajima

, was published in Neural Networks.

We combined Bayesian estimation and generative AI to visualize imagined scenes from human brain activity.

2

14

46

最近のLLM関連の話題で個人的に最も衝撃的だったのが、この英語でしか訓練していないのに日本語等も扱えるようになっている(しかも開発者もなぜか判っていない)という話。現象としては、コーパスのpre-trainingだけで文法も意味も含めた他言語への汎化運用が一定精度で実現している。

1

7

37

Vision Transformer is useful in modeling movie-evoked brain activity

Modeling movie-evoked human brain activity using motion-energy and space-time vision transformer features

#bioRxiv

0

1

3

1

10

33

This achievement was made possible thanks to various open source and open data projects, including:

Stable Diffusion:

@robrombach

@StabilityAI

…

Natural Scenes Dataset (NSD):

@cvnlab

Thomas Naselaris

1

7

32

#CVPR2023

で脳活動と画像生成AIの関係を示し視覚体験内容を解読する研究発表を行いました。

また、解読にキャプション生成・GAN・深度推定を組み合わせた場合の定量評価や基盤モデル利用の影響検証を行ったテクニカルペーパーとコードを公開しました。

Follow-up technical paper to our

#CVPR2023

paper (). Investigated how different methods affect the performance of visual experience reconstruction. The figure shows three randomly selected images generated by each method.

1

10

31

1

6

36

@BraydonDymm

@yu_takagi

It is possible to apply the same technique to brain activity during sleep, but the accuracy of such application is currently unknown.

0

0

28

麻痺患者の発話意図を刺入電極記録から解読(Willett et al., 2023 bioRxiv)。62語/分で同種のBCIとして過去最速。電極は64チャネルx4(BA44野(ブローカ野)と6v野(口運動前野?)に2つずつ)埋め込み。

先に筆記意図BCIで90文字/分を達成(Nature 2021)したのと同じスタンフォード大のグループ。

1

6

26

異なる機関・場所にいる著者達がDiscord/GitHub(誰でも参加/閲覧可能)を介して過程も議論もすべてオープンで研究を進めて論文化。資源は公開データNSDやStability AI提供の計算リソース等を利用。今後は神経科学分野でもこのような研究の進め方も増えるのだろうか。

I'm really excited to share

@MedARC_AI

's first paper since our public launch 🥳

🧠👁️ MindEye!

Our state-of-the-art fMRI-to-image approach that retrieves and reconstructs images from brain activity!

Project page:

arXiv:

25

144

671

1

5

25

マウス神経細胞120万個をin situ sequencingして各部位における細胞単位の遺伝子発現(107種類)を同定。似た細胞同士が結合する傾向にある等などを発見。7日間・$3k(約45万円)で実現できるとのこと。

0

6

21

Our new paper regarding external and internal information is out! [w/ Drs Dror Cohen and

@TomNakai

]

0

7

21

阪大ELSIセンターの標葉先生

@r_shineha

が中心になってまとめられた「脳神経科学・脳情報の利用に関する意識調査」速報が公開されました。一般モニター2000人と専門家108人を対象にした調査で、各種脳研究に関する考え方や非専門家/専門家間の認識の比較等が報告されています。

0

6

19

一次運動野(M1)ホマンキュラス的描像(Penfield, 1948)を詳細なfMRI実験により更新。M1内で効果器(足、手、口)特異的な部位と非特異的な部位が交互に現れる等。

2

8

19

NeuroImageは分野の専門家が読む中心的な雑誌の一つであり、個人的にも

- 最も多くの論文を掲載

- 最も多く(たぶん)の査読に関与

- 最初のTop 1%論文を掲載

した雑誌がこのようになり感慨深い。継続性に疑義が生じるのは残念だけど、研究者と出版社の関係性を改善する流れの一つなのだろう。

1

1

15

大規模一人称動画オープンデータセットEgo4Dの続編Ego-Exo4D。5000スキル/1400時間の一人称・三人称動画とアノテーション。

0

4

16

映像由来の脳内意味表現と言語由来の脳内意味表現の比較。部分的に重なるけれど映像由来は皮質上でちょっと後ろに、言語由来はちょっと前によっている。

At long last, Dr.

@sara_poppop

's paper on aligned visual and linguistic semantic representations is out!

I want to briefly explain the context for Sara's work, and why I think this is the most important science that I've ever been a part of ⤵️

6

141

421

0

1

12

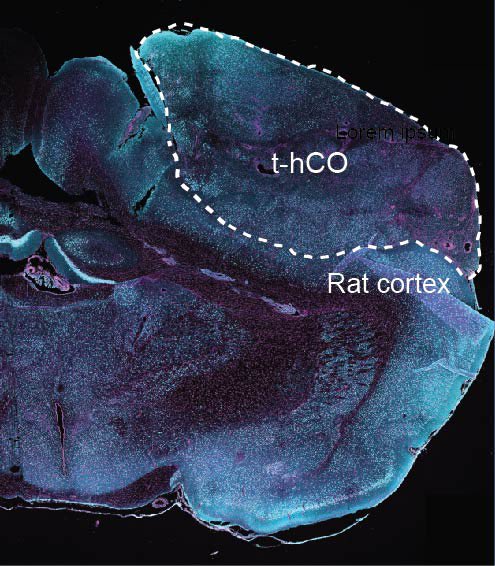

ヒトiPS細胞から脳オルガノイドを作ってラット脳に移植。神経回路に定着して感覚刺激応答や報酬探索行動にも関わるように。(Revah et al., 2022 Nature)

Glad to share our latest work out in

@Nature

today

We show that human cortical

#organoids

transplanted into the somatosensory cortex of newborn rats develop mature cell types and integrate into sensory circuits and can influence reward-seeking behavior

22

285

1K

0

1

10

Story-listening fMRI data (1.5 hours x 112 subjects; 49 English, 35 Chinese, 28 French speakers) with detailed annotations

0

4

10

意思決定課題中のマウス神経活動を(10ラボの分散共同実験で)全脳記録してデータ公開。547回のNeuropixels記録/194領域/32784細胞。(yieldが低めなのは(1)spike sortingを基準厳し目の自動検出としている(2)sparseな領域も含めて全脳記録しているから、とのこと)

0

0

10

Modeling brain activity evoked by playing Counter Strike in MRI

New Research: Voxel-Based State Space Modeling Recovers Task-Related Cognitive States in Naturalistic fMRI Experiments: Complex natural tasks likely recruit many different functional brain networks, but it is difficult to predict how such…

#Neuroscience

0

1

3

0

3

9

育った街のエントロピーとナビゲーション能力の関係を約40万人のゲームプレイ(Sea Hero Quest)から解明(Coutrot et al., Nature 2022)

Short 🧵 on our new

@nature

article with

#SeaHeroQuest

@antoine_coutrot

et al

Entropy of city street networks linked to future spatial navigation ability

Video: footage from two game levels with different levels of entropy in the paths (L: high, R: low)

7

53

195

0

2

7

機能的超音波イメージング(Renaudin et al., Nature Methods 2022; 解像度6.5µm

@1

秒)。すごい。volume撮像や機能構造がどの程度見���れるのか気になる。

How to track local microscopic neurovascular response and systemic blood flow changes over the entire brain at the same time? Functional Ultrasound Localization Microscopy performs such neuroimaging at micron scale,

@PhysMedParis

article in

@naturemethods

10

106

315

0

1

6

自分が撮った大量の過去(〜7年)動画と他人の撮ったそれを見ているときの脳活動を記録。記憶の強さ・時間・内容などに関する機能構造が見られるとのこと。

New preprint w/

@Chris_I_Baker

: we scanned people watching memories they recorded w/

@1SecondEveryday

from up to 7 yrs ago and find a memory content map w/ subregions for memory age, strength, and content (people & place) info in medial parietal cortex!

6

66

269

0

3

7

We should make brain-to-everything (b2e)

3

0

7

@ykamit

はい、一般論としてあまり下がらない方が望ましいのは同意します。ただこの辺りはサンプル・カテゴリの分布・サイズにも依存し、単純比較は難しいと考えます。

また自然刺激は多元的・多層的なためablationが万能とも言えず、より高次の情報を用いた場合の性能比較等の方が筋が良いと考えています。

1

1

7