Thomas Fel

@Napoolar

Followers

1K

Following

9K

Statuses

1K

Explainability, Computer Vision, Neuro-AI. Research Fellow @KempnerInst, @Harvard. Prev. @tserre lab, @Google, @GoPro. Crêpe lover.

Boston, MA

Joined February 2017

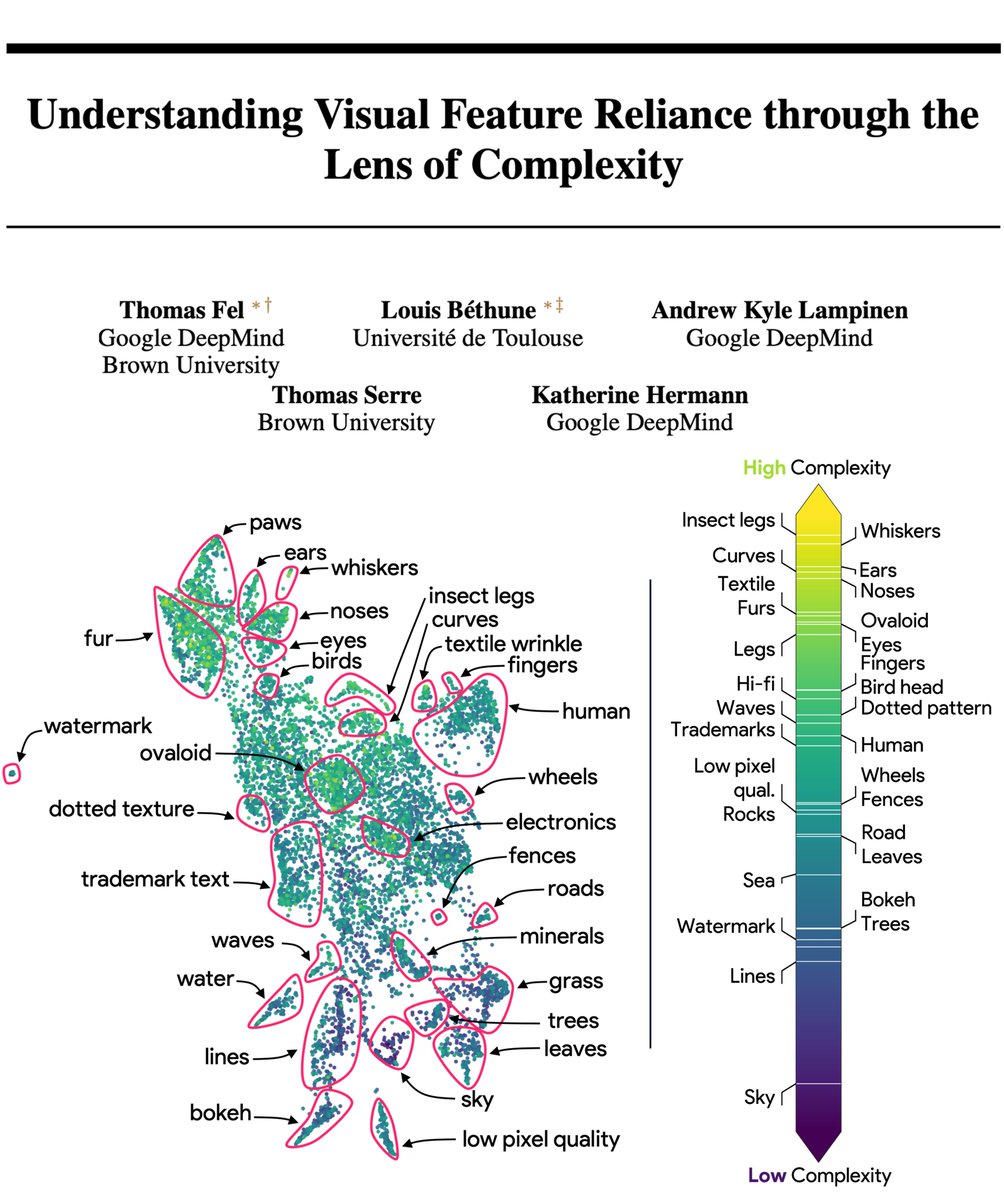

🎭Recent work shows that models’ inductive biases for 'simpler' features may lead to shortcut learning. What do 'simple' vs 'complex' features look like? What roles do they play in generalization? Our new paper explores these questions. #Neurips2024

8

108

513

RT @TimDarcet: These visuals really highlight super well the differences between DINOv2 and CLIP: the latter has these text-induced abstrac…

0

9

0

RT @hugues_va: torchdr 0.2 is out with some cool features, like Incremental PCA for processing very large datasets and support for Faiss fo…

0

19

0

RT @HThasarathan: 🌌🛰️Wanna know which features are universal vs unique in your models and how to find them? Excited to share our preprint:…

0

86

0

@norabelrose Nice trick, in pure Pytorch it's indeed better! The task is to select topk from matrix Z (N, d) then mult with D (d, k), by varying dimension of d (5_000-- 256_000) and varying topk (5--500). k = 768.

0

1

3

RT @KempnerInst: [1/4] Registration for our two-day virtual & in-person symposium in June is now open! See below for the full list of cutti…

0

8

0

RT @AndrewLampinen: New (short) paper investigating how the in-context inductive biases of vision-language models — the way that they gener…

0

16

0

RT @RemiCadene: ⭐ The first foundational model available on @LeRobotHF ⭐ Pi0 is the most advanced Vision Language Action model. It takes n…

0

167

0

RT @drlucylai: 🚨 calling all neuroscientists🧠 and AI researchers🤖: consider submitting an abstract to the 6th International Conference on t…

0

12

0

RT @DimitrisPapail: Prediction: The main limit to recursive self-improvement will be the ability to generate problems at the frontier of cu…

0

46

0

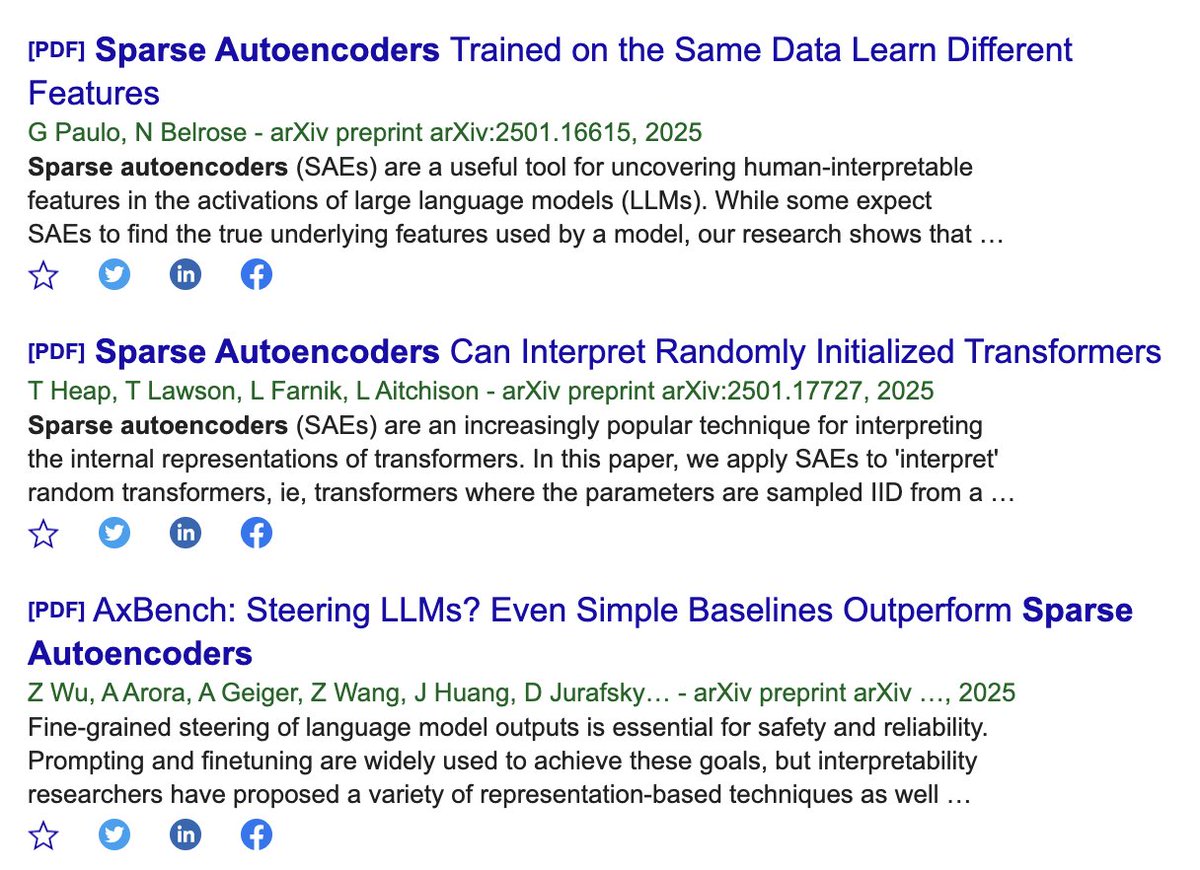

@norabelrose @kzSlider @livgorton @ArtirKel @aryaman2020 @lucyfarnik On arXiv next week. The core idea is that our dictionary is a convex combination of data points, meaning each dictionary element is in conv(data) and the reconstructions in cone(data). Its an old idea called archetypal analysis from Breiman and Cutler

2

0

4

@norabelrose @livgorton @ArtirKel @kzSlider @aryaman2020 @lucyfarnik Same here, and we propose to "stick" to the data a bit more (spoiler: it works)

1

0

1

RT @alon_jacovi: Are you wondering if concept explanations are any good?? Or just interested in how to evaluate them with automated simulat…

0

1

0

@JulienBlanchon @MatthewKowal9 @HThasarathan @CSProfKGD We use the pytorch implem is dispo in Horama (serre lab github), with some tricks on top 🤗

1

0

4