Jiachen "Tianhao" Wang

@JiachenWang97

Followers

173

Following

78

Statuses

50

PHD student @ Princeton Data Attribution, Data Selection, Privacy

Princeton, NJ

Joined August 2022

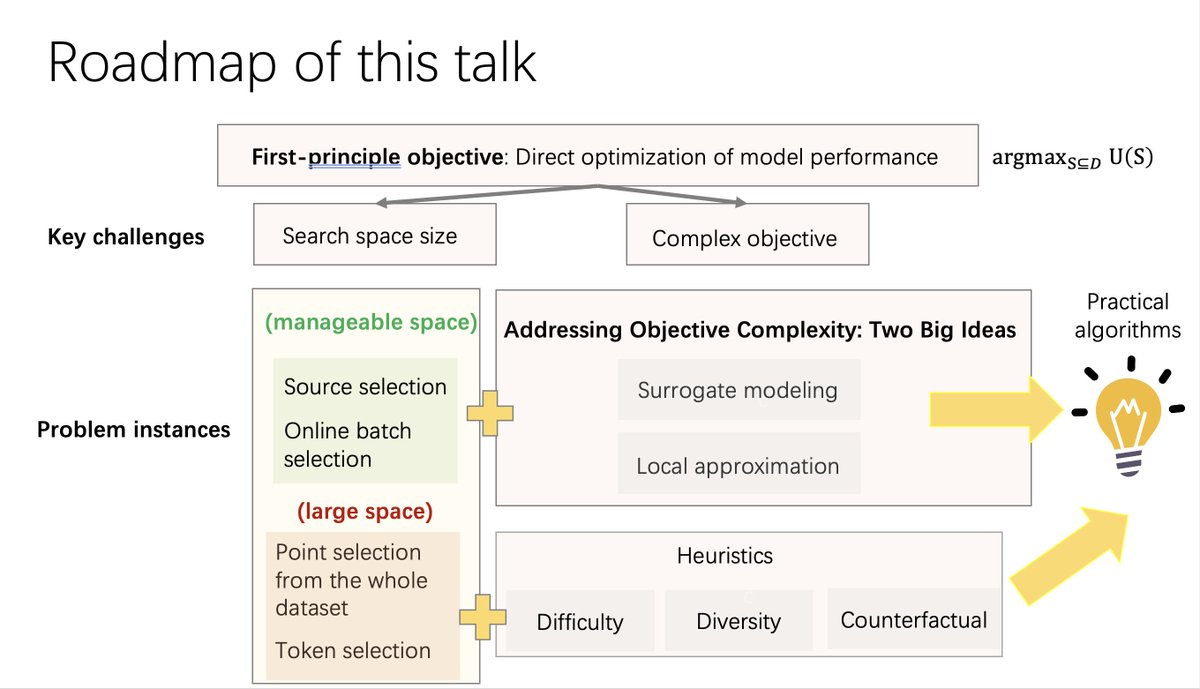

Here are all three parts of the slides for "Advancing Data Selection for Foundation Models: From Heuristics to Principled Methods" tutorial at NeurIPS yesterday! Part 1 (Intro + Empirical Methods): Part 2 (Principled Methods): Part 3 (Foundations): We will also summarize all relevant materials (including those suggested by the audiences) and publish them after the conference. Thanks to everyone who attended yesterday!

Here’s my slide deck from the tutorial. Thanks to everyone who attended yesterday - it was a super rewarding process to prepare for this!

0

1

13

RT @ruoxijia: Submission deadline AoE today for our Workshop on Data Problems for Foundation Models! Look forward to your contributions!

0

3

0

RT @reds_tiger: Announcing the ICLR 2025 Workshop on Data Problems for Foundation Models (DATA-FM)! We welcome submissions exploring ALL AS…

0

2

0

I will be presenting our NeurIPS spotlight work on gradient-based online data selection for LLMs today (12/12) at 4:30-7:30pm PST (East Hall #4400). Big thanks to my amazing collaborators @ruoxijia @TongWu_Pton @dawnsongtweets @prateekmittal_ Please feel free to come by and discuss any data-related research problems! #NeurIPS2024

1

8

54

RT @KoMyeongseob: Excited to attend #NeurIPS2024 in Vancouver 🇨🇦! I will be presenting our work: "Boosting Alignment for Post-Unlearning T…

0

6

0

Just arrived in Vancouver! @ruoxijia @lschmidt3 and I will present a tutorial on data selection for foundation model tomorrow (12/10) at 1:30pm in West Ballroom C. Come by and say hi! And feel free to DM me to discuss any data-related research!

Join us Tuesday at 1:30 PM PT at #NeurIPS2024 for our tutorial on data selection for foundation models! With @lschmidt3 & @JiachenWang97, we'll cover principled experimentation, selection algorithms, a unified theoretical framework, and open challenges. Hope to see you there!

0

0

5

RT @profnaren: Small matter of @virginia_tech pride! Google Scholar turns 20 today 🎉🎉🎉 Kudos to its creators, Anurag Acharya and Alex Ver…

0

9

0

Flying to San Diego for the Rising Stars in Data Science workshop on Nov 14-15! @HDSIUCSD @StanfordData @DSI_UChicago If you are at UCSD, feel free to DM me and chat about data-related research problems! #MachineLearning #DataScience

0

0

9

RT @si_chen0921: If you're interested in LLM fact tracing and information retrieval, join me as I present our work, FASTTRACK at Session F…

0

3

0

Thanks so much for featuring our research on scaling up principled data attribution techniques. Data attribution is important for ML interpretability, data curation, and fairly compensating data providers. New papers on this line are coming soon!

Shapley Values can help you understand how data point contribute to the output. An addition to this principle we have In-Run Data shapley a paper by @JiachenWang97 @prateekmittal_ @dawnsongtweets @ruoxijia Here is my blog ex - inspired by - @joemelko

1

1

6

RT @ruoxijia: New paper led by @feiyang_ml on optimizing LLM pre-training data mix - one of the most fun projects! Takeaways: (1) Optimal…

0

3

0

RT @Fanghui_SgrA: Our fine-tuning workshop@NeurIPS’24 @neurips24fitml has the following amazing speakers and panelists! Welcome to submit y…

0

7

0

RT @hjy836: 📢Announcing the first GenAI4Health Workshop at #NeurIPS2024 where we invite speakers and participants from #health, #AI_safety,…

0

16

0

RT @thegautamkamath: "Position: Considerations for Differentially Private Learning with Large-Scale Public Pretraining," with @florian_tram…

0

30

0

It's in 10min! Check out our poster in today's morning session at #2517!

Excited to be attending #ICML next week! I will give an oral presentation on our work about the theoretical foundation of Data Shapley for data curation. Happy to discuss data curation, data attribution, and all related topics. Feel free to DM for a coffee chat in Vienna! ☕️

0

0

9