Chris Cundy

@ChrisCundy

Followers

1K

Following

549

Media

79

Statuses

359

Research Scientist at FAR AI. PhD from Stanford University. Hopefully making AI benefit humanity. Views are my own.

San Francisco, CA

Joined July 2017

Life update: I'm excited to announce that I defended my PhD last month and have joined @farairesearch as a research scientist!. At FAR, I'm investigating scalable approaches to reduce catastrophic risks from AI.

16

4

300

How can you scalably form a posterior over possible causal mechanisms generating some observational data? Find out in our NeurIPS poster tomorrow (Tuesday), 8.30-10am pacific time! Joint work with @StefanoErmon, @adityagrover_ (1/13).

2

4

44

I am a big fan of Koray Kavukcuoglu's presentation about @DeepMindAI's research: both for the phenomenal deep learning carried out there and the use of the 'thinking face' emoji to represent the discriminator in a GAN #ICLR2018

0

4

29

@BlackHC Good question! I doubt that transformers have enough capacity to solve any significant Project Euler problems (. I did a quick experiment with asking PE problem 1, but swapping the numbers. Both GPT4 and chatGPT failed to answer correctly at all.

2

2

23

GPT2 models fine-tuned on SequenceMatch can recover from errors and achieve better MAUVE score on language modelling compared to MLE-trained models. Check out the paper for all the details . Next: bigger models!🚀 . Work done with the fantastic @StefanoErmon 8/8.

1

1

22

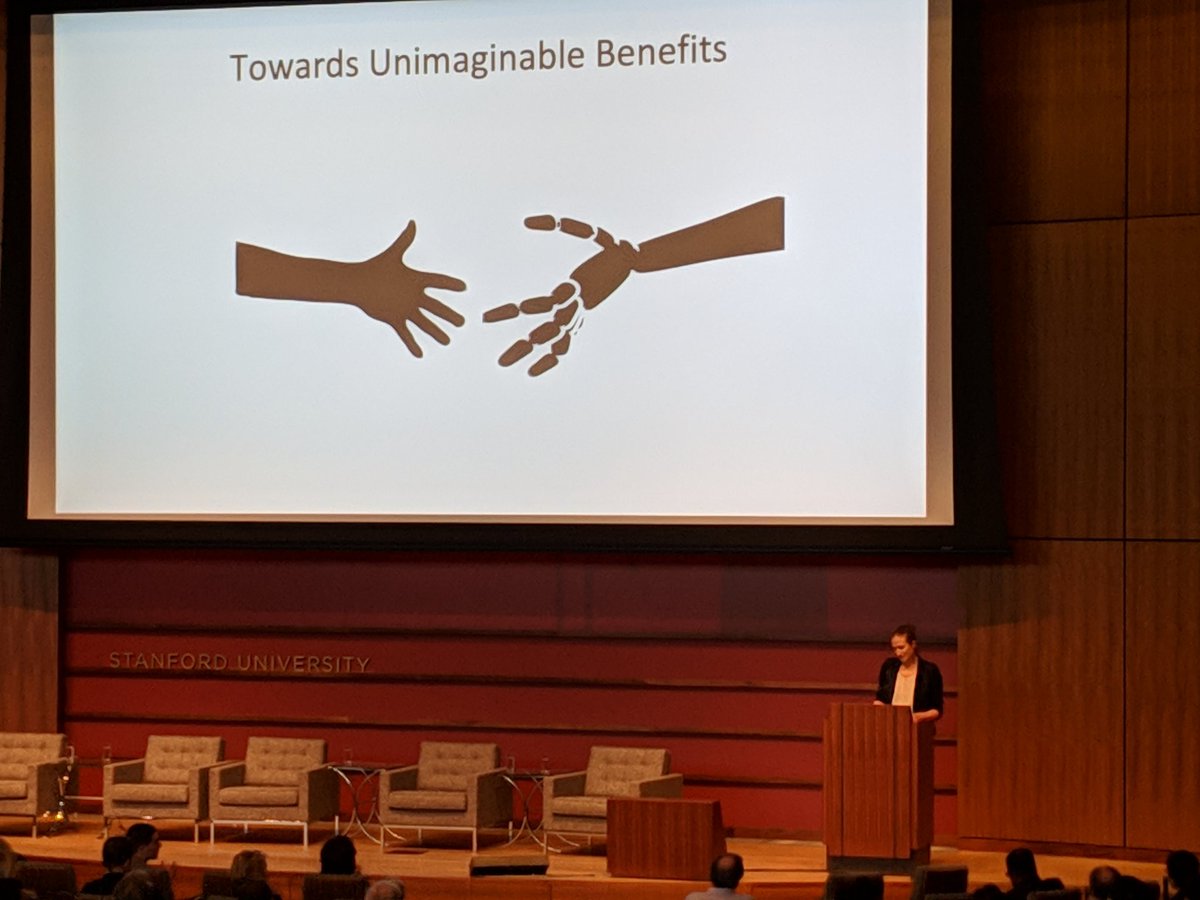

Feeling very inspired after @EmmaBrunskill's talk at the #StanfordHumanAI launch: using rigorously optimal decision-making methods in small-data domains!

1

10

17

The pace of improvements in style transfer/domain adaptation is very impressive: just a few years ago I would have said these sort of results would be incredibly hard to achieve. The latest: StarGANv2 (Choi et al.). #StarGANv2

0

4

13

I gave some comments at the DoD Defense Innovation Board Public Listening Event at Stanford today #dibprinciples

1

2

13

Three hours before the abstract deadline for #NeurIPS2019 and I got an id in the 8000s: looks like this year will definitely hit a new record for number of submissions! #AIhype.

0

1

10

Lots of interest in diffusion on discrete spaces. I think Aaron's approach is the most principled I've seen. Plus, lots of applications in conditional generation!.

Announcing Score Entropy Discrete Diffusion (SEDD) w/ @chenlin_meng @StefanoErmon. SEDD challenges the autoregressive language paradigm, beating GPT-2 on perplexity and quality!. Arxiv: Code: Blog: 🧵1/n

1

0

7

@chrisalbon Although the L2 normalization is equivalent to weight decay for SGD, it's not the same thing with adaptive optimizers. So I wouldn't say this card correctly characterizes `weight decay' as it is typically understood today. see

1

1

7

Great fireside discussion from Prof Phil Tetlock on prediction and using ML for forecasting #EAGlobal

0

0

8

Great talk yesterday from @janleike on recursive reward modelling for developing AI that reliably and robustly carry out the tasks that we intend: check out the paper.

0

0

7

@goodside You can find a lot of these by looking through the vocabulary and spotting weird-looking tokens. For instance, I think ' IsPlainOldData' is another glitch token. Here, GPT4 claims it cannot see the token at all.

0

0

6

Very excited to be at the launch of the new Stanford Institute for Human-Centered AI! .#StanfordHumanAI

0

0

6

The trilemma for the permissibility of ethical offsetting: which premise is wrong? #EAGlobalSF17

0

0

5

There's a subtle flaw lurking at the heart of the SDE formulation of diffusion models (as they are typically implemented). Check out Aaron's work exploring this and the principled ways we can fix it -- and get some SoTA images!. 🔬🧪👉.

Presenting Reflected Diffusion Models w/ @StefanoErmon!. Diffusion models should reverse a SDE, but common hacks break this. We provide a fix through a general framework. Arxiv: Github: Blog: 🧵(1/n)

0

0

5

Very excited to be at @JHUAPL for the "Assuring AI: Future of Humans and Machines" conference! . Check by the tech demo to see some upcoming work on fairness and RL

0

0

5

@StefanFSchubert @robinhanson If I recall correctly, there's also an up/downvote system on the app where conferencegoers can indicate they'd prefer some questions to be asked, though the moderator isn't obliged to ask the highest-voted.

0

0

4

Improving to 60% on GPQA from the previous SoTA of 54% is *really* impressive -- the GPQA questions are very difficult!. (That is, assuming no test-set leakage. ).

Introducing Claude 3.5 Sonnet—our most intelligent model yet. This is the first release in our 3.5 model family. Sonnet now outperforms competitor models on key evaluations, at twice the speed of Claude 3 Opus and one-fifth the cost. Try it for free:

0

0

4

Wild new paper collects a set of naturally occuring adversarial examples for imagenet classifiers: densenet gets only 2% accuracy!. #RobustML

0

2

4

@AlexGDimakis @raj_raj88 It's been argued that a big contributing factor for GPT3's poor maths ability is its tokenizer, which is strange/bad for numbers--i.e. a separate token for '809' and '810' but not for '811'. See and

0

0

3

@sangmichaelxie You should start it up! .A lot of information on the internet is pretty old (even advocating using sigmoids, not mentioning batchnorm, etc).

0

0

3

The @OpenAI Five agent that played yesterday and convincingly beat world-champion players had been trained continuously since July 2018 😮

0

0

3

The past year has seen an incredible amount of progress in NLP: particularly with the development of the Transformer architecture. @OpenAI's recent model is mind-bogglingly good.

We've trained an unsupervised language model that can generate coherent paragraphs and perform rudimentary reading comprehension, machine translation, question answering, and summarization — all without task-specific training:

0

1

3

Elvis, 'always on my mind' translated into Beethoven: Original for comparison: . It seems like the classical version sometimes misses the melody, though I'd expect this to get better as it's trained on more songs.#deeplearning.

0

0

2

Very cool stuff: domain translation with exact likelihoods.

📢 Diffusion models (DM) generate samples from noise distribution, but for tasks such as image-to-image translation, one side is no longer noise. We present Denoising Diffusion Bridge Models, a simple and scalable extension to DMs suitable for distribution translation problems.

0

0

2

@qntm There are tidal forces (0.384 μg/m). If you put two things in random spots in the ISS, they will start to move relative to each other.

0

0

2

@StephenLCasper Do you think there's promise to methods that make fine-tuning harder/impossible, like (but extended to LLMs)? This could let you 'lock in' those superficial changes.

0

1

2

Interesting podcast outlines the state-of-the-art in clean meat. I'd be very interested to learn more about why @open_phil disagree with @GoodFoodInst on plausibility of clean cultured meat.

0

0

2

Media panel: big fans of @OurWorldInData & choosing appropriate level of nuance for each medium #eaglobalsf17

0

0

2

Great work giving tight results for how the problem influences the choice of preconditioner for gradient methods. .

Choosing the optimal gradient algorithm depending on the geometry is important. Interestingly, how to do it is in seminal results about the Gaussian Sequence model. Come to our oral at 4:50pm in West Exhibition Hall A! #NeurIPS2019.

0

0

2

A continuous relaxation of sorting opens up interesting end-to-end training of traditionally modular algorithms: check out the paper!.

Our @iclr2019 paper proposes NeuralSort, a differentiable relaxation to sorting. Bonus: new Gumbel reparameterization trick for distributions over permutations. Check out our poster today at 4:30! Code: w/ @tl0en A. Zweig @ermonste

0

0

2

@sangmichaelxie I think that the various aggregators miss enough good things (and to some extent you should be reading the unpopular things) that it's worth reading all of the relevant arxivs like

0

0

2