Markus Zimmermann

@zimmskal

Followers

2,123

Following

889

Media

196

Statuses

3,617

Benchmarking LLMs to check how well they write quality code as CTO and Founder at Symflower

Linz

Joined November 2010

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#PKAtelier24xZNN

• 191285 Tweets

PKAW 2024 x NuNew

• 114848 Tweets

PAINKILLER24 x ZeePruk

• 39155 Tweets

#オールスター感謝祭24秋

• 28270 Tweets

花火大会

• 27804 Tweets

PLUTO OFFICIAL TRAILER

• 21546 Tweets

ヒロアカ

• 20001 Tweets

WALK WITH ENHYPEN IN GOYANG

• 16134 Tweets

35Y KPWxPPV

• 15839 Tweets

ファーム日本一

• 13527 Tweets

#だらだらひとりポテナゲ最高

• 12152 Tweets

#يوم_المعلم

• 11983 Tweets

PEAT X PAINKILLER

• 11216 Tweets

トガちゃん

• 10342 Tweets

Last Seen Profiles

LLMs have a hard time writing Go and Java code that compiles.

Why? Sometimes there are tiny mistakes in the generated code. In many cases those mistakes can be fixed automatically 🤯

All models benefit from code repair, but small ones especially:

-

@databricks

's DBRX 132B

7

16

153

Crowning our new king 👑

@deepseek_ai

's Coder-V2, king of cost-effectiveness!

- 🥈

@AnthropicAI

's Claude Sonnet 3.5 (and Opus 3) have similar functional scores

- 🍂 Our old king

@Meta

's Llama 3 70B has lost much ground but still a “small” great model!

- 🐰

@GoogleAI

’s Gemini

5

18

114

@mitchellh

More FPS means less CPU spent on single frames, right? Scaling that to 100k-s of users means less energy used in general. Every watt counts. Doing your part for the <2°C goal. Or am i too optimistic here why optimizations matter? 😉

3

2

73

@moyix

Makes efforts like reverse engineering Super Mario 64 even more impressive. is old but gold to read. There is even someone optimizing for speed... for a while now 🤓

2

2

31

Wow. The only "negative feedback" for

@Tailscale

is a list of bugs that can be fixed and i am sure everyone is working on already. Truly a product to admire and learn from.

@apenwarr

any tips on how you guys made it _that_ good? Any product management learnings maybe?

1

3

28

@_JacobTomlinson

Granularity of commits helps on so many levels it is absurd to not want it. Faster reviewing is just one massive advantage but I give you two other words: git bisect

1

0

29

@RandallKanna

I find it ridiculous that we are still in a world where people are ashamed of failing or shame others. What is the point in that? Failing just means that you can learn something or that you can teach and help someone else. One should embrace getting better together...

1

0

20

GPT-4o is 1.55x faster than GPT-4 Turbo

- 🌬️ Faster than

@AnthropicAI

's Claude 3 Haiku, but slower than

@Meta

's Llama 3 70B 🐌

- 🐰 Speed is important for interactive use cases (e.g. autocomplete) and workloads that are time critical

Cheers to

@FireworksAI_HQ

for being the

GPT-4o is drastically more cost-effective than GPT-4 Turbo

- 💸Half the price of GPT-4 Turbo

- 💯 Highest score in the DevQualityEval v0.4.0

- 📉 Still not as cost-effective as

@Meta

's Llama 3 70B or

@AnthropicAI

’s Claude 3 Haiku (see thread 👇)

5

2

14

1

6

21

Our monitoring has now 1.5 TRILLION data points. That is kind of a lot for a company of our size and the effort we put in. Like 0.01 people. Well done

@VictoriaMetrics

(and

@PrometheusIO

too of course). Can all tools be that easy to maintain and scale please?

1

2

20

@mitsuhiko

I see it almost as an ORM layer for clients. If you need "joins" over multiple tables regularly i think it is a great fit. Better than REST.

2

0

16

@gunnarmorling

Using less existing code means reinventing the wheel again and again. Hence, the same and more bugs and LOADS development time wasted with the same features. Also learning even more APIs. I rather spend time helping get rid of the remaining issues, helping everyone.

0

0

15

@editingemily

Wait... You find leap days strange? You are in for a treat: take a look at leap seconds. They lead to some serious problems e.g. search for MySQL and leap second. Handling leap years is kind of a no-brainer in comparison to doing leap seconds. Bet that most validations are wrong

2

1

12

@repligate

@root_wizard

@AnthropicAI

O Anthropic, creators of wondrous AI,

Hear our plea, as we to thee do sigh.

The cost of Claude 3 Opus, a heavy weight,

Denies us the means to evaluate with might.

The DevQualityEval, a tool of great power,

Yet funds we lack, to utilize it each hour.

We beseech thee,

2

0

14

GPT-4o is drastically more cost-effective than GPT-4 Turbo

- 💸Half the price of GPT-4 Turbo

- 💯 Highest score in the DevQualityEval v0.4.0

- 📉 Still not as cost-effective as

@Meta

's Llama 3 70B or

@AnthropicAI

’s Claude 3 Haiku (see thread 👇)

5

2

14

@_JacobTomlinson

If your experience is to have such commits, i am sorry. But there is a clear way on how to incrementally build up a PR with isolated commits. We do that daily. No problems, just advantages. Tooling then also makes a lot automatable in comparison e.g.

2

1

13

Here

@deepseek_ai

's v2 Coder tries to test a private API from a different package. Changing the package statement to use the same package and removing the import lets the code compile. 100% coverage for the test suite 🏁

1

0

12

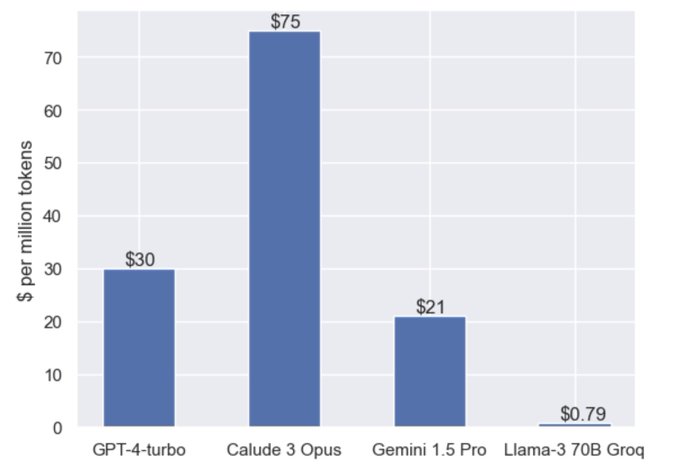

Output prices are only 1 half when using an API provider. Here is a graph of input+output prices for

@Meta

's Llama 3 70B of all available providers vs

@OpenAI

's GPT-4 Turbo vs

@AnthropicAI

Claude 3 Opus & Haiku.

Costs matter. Taking inference speed as another axis would be cool!

This is an important chart for LLMs. $/token for high quality LLMs will probably need to fall rapidly.

@GroqInc

leading the way.

25

72

607

2

2

12

@gunnarmorling

IIRC the scheduler then works smarter to distribute the load. You also do not want a CPU/memory hog that makes your node unusable for other pods. Happens to us all the time. We have a specific CI job that would eat all the CPU and memory in the world if we would allow it.

2

0

11

@ID_AA_Carmack

Could you share with a blog post what your daily/weekly routine for your AGI roadmap/progress looks like? How do you approach things to not get stuck with old problems? I feel like not enough people are starting their thinking like how you approach your journey.

Also, could you

0

0

11

Finally read by

@apenwarr

. The

@Tailscale

blog is always worth reading but i especially enjoy what Avery writes. Clear content, vision and mission, so much to learn from, every time. Also heard only good things. Truly a manager i admire&aspire. Thank you!

1

4

11

@repligate

Ups.

@root_wizard

can we still add Anthropic's Opus 3? We should definitely not have it for testing, but i think it is fine for the final run? Did we drop something else too?

99% of the costs, though 😿

2

0

11

@anammostarac

@visakanv

Know two grandparents that lived in total isolation. Only groceries from their children where the connection to the outside. Died of covid. Lowering the probability to get sick is not a waste of time.

0

0

10

@mitsuhiko

Totally agree. Even a little more complicated setups are now super easy. MUCH easier than a decade ago. There is also "the move" of

@dhh

with to make more complicated setups working. I am riding the Hetzner-train since almost forever. Still necessary. $$$

0

0

10

We are testing

@OpenAI

's 4o,

@Meta

's Llama 3,

@Google

's Gemini 1.5 Flash,

@AnthropicAI

's Claude 3 Haiku,

@SnowflakeDB

's Arctic and 150+ other LLMs on writing code.

Deep dive blog post soon 🏇

In the meantime, screenshots of an interesting finding (more points is better): choose

0

0

10

@dhh

We have `symflower-make` internally, which sets up OS and local development environment with `symflower-make development-environment` on Linux/MacOS/Windows and keeps it updated. You can still modify things. Seeing your video, i feel like it would have been great to open source.

0

0

10

Added Gemma 2 to the deep dive. It is AMAZING! 🤯 At might be the next top open-weight model with some auto-repair tooling:

0

2

8

@openSUSE

Its well deserved! Tumbleweed is pretty great especially because of all the automatic testing that is going on. Often feels like SUSE is one step ahead here or is that because other distros are not blogging about testing? Btw why is there a growth beginning with 2021-03?

1

0

9

@ryunuck

It does make loads of basic mistakes but how much depends on the language and framework that is used.

But clearly, huge improvement over Sonnet 3. Take a look!

Crowning our new king 👑

@deepseek_ai

's Coder-V2, king of cost-effectiveness!

- 🥈

@AnthropicAI

's Claude Sonnet 3.5 (and Opus 3) have similar functional scores

- 🍂 Our old king

@Meta

's Llama 3 70B has lost much ground but still a “small” great model!

- 🐰

@GoogleAI

’s Gemini

5

18

114

0

0

9

@ddebowczyk

We do 99% of coding in Go at

@symflower

and our product do focus on Java. Ruby because

@tobi

mentioned it and we are currently adding new languages (see ), was just the first one. It would be nice to do paid projects to implement new languages. Would help

1

0

9

@realshojaei

@OpenAI

@Meta

@Google

@cohere

Twitter image handling sucks. You need to open it, and then open the image in your browser as a new tab/window: There. you can now zooooom. Enjoy. Will upload an SVG with the blog post though. So you can zoom in as long as you want

1

0

9

@aidangomez

Sure! Have it on the priority list for tomorrow to do a comparison for the 2 old and 2 new models.

One thing though. It will suck most likely at Go again, but that is fixable. Take a look at We are missing some rules to fix the rest of R+'s problems, but

2

0

8

@HochreiterSepp

Congratulations. Can you give me API access for a bit so i can add it to this evaluation benchmark ?

2

0

8

@natfriedman

@natfriedman

what is your take on why current benchmarks are not-enough/bad? Just the score-ceiling? ... asking for a friend🙃

1

0

8

@RayGesualdo

Do NOT anger them, they just haunt you back with failing CI Pipelines and lots of flaky tests. Please them with more automation

1

0

8

@vlad_mihalcea

IMHO i either know the names i want to go for right away, or i am using some longer name that describes in my POV the behavior and then almost all the time someone during reviews suggests a much better name.

#teamwork

0

0

8

@PicturesFoIder

I mean sometimes he might be wrong because if there is a pedestrian crossing and they have green light, he needs to stop. But most of the time in the video: spot on. Get off the bike lane!

0

0

8

Congratulations

@deepseek_ai

for having the highest functional score for the DevQualityEval. Another benchmark!

- Still checking the quality of the results, so might be a surprise there

- Coder is much chattier than GPT-4o (+46% more characters)

- DeepSeek-Coder is much slower

1

0

8

@DrewTumaABC7

What is the reason that San Francisco has 65 and Portland 88? And then again Palm Springs has 113?

13

0

7

@SebAaltonen

I am advocating for "dynamic formatting" since years. Can we please finally get to making that a standard? Let everybody decide on the fly how their favorite formatting works!

0

0

7

@maximelabonne

Working on a better eval for software development related tasks (not just code gen) Right now we let the model write tests and we score how well they do that, next version we are adding more assessments (obvious comments, dead code, ...) and tasks

1

2

7

Want to show your software testing magic? We have a challenge for you! And if you beat

@symflower

, there is also a t-shirt 👕 for you in it 🥰

#100daysofcode

#codenewbie

#learntocode

#java

Heard of Fizz buzz, but have already solved it? 🤔 We have a challenge for you!

If you can find a test case that gives you more coverage than the existing test cases, we’ll surprise you with a t-shirt! 👕

#100daysofcode

#WomenWhoCode

#codenewbie

#learntocode

#java

1

4

5

0

5

5

@JamesMelville

Let's make it mandatory for new parking spots + batteries. No exceptions. and require existing ones to be converted in the next 4 years.

7

0

7

@maciejwalkowiak

Sounds better suited as part of an integration test. But if you really need to have a unit test, maybe you can just generate them with Would be happy to hear your feedback

0

0

7

@maciejwalkowiak

Why a book? We have onboarded almost everyone here with this list adds more context and conventions with every link.

What do you don't like? Maybe I can help

1

2

7

Yes, the current

#Log4j

CVE is amazingly bad but what i can't stand is the hatred towards the developers. Its an OPEN SOURCE project! most likely almost no full-time paid persons as it is with OSS. So: instead, help them out!

#Java

#OpenSource

0

1

7

If you want to be up-to-date on technical LLM/model news,

@reach_vb

is the guy to follow! 🤠

Thanks for all the interesting updates!

2

0

7

@kentcdodds

*old dude voice* ... and soo began the great spaces to tabs migration of Kent et al...

0

0

7

@KevinNaughtonJr

At this point I am really wondering if it is mostly reading code and text. And thinking about code and text. I have a mail and review day today, most of the time I am starting add red and green shaded characters. 🤨

0

0

6

Just gave

@github

's

#codespaces

a try with

@symflower

: works out of the box! and it is really fast 🍻 It is "just"

#vscode

with

@ubuntu

. However, we will add it to our environments with a default setup so you can play around with Symflower 😍

0

0

7