许哲

@xuzhe42

Followers

818

Following

26

Media

5

Statuses

52

Joined February 2023

🚀 Have you ever wondered how QQQAI works? 🤔 Let's take a closer look to learn more! 📊💡👇

1

1

3

🎉 Congratulations to all $QQQAI holders! We just found that $QQQAI liquidity pool is available on @Uniswap. All holders NOW can explore the potential of $QQQAI by clicking the link below👇👇👇 https://t.co/Almqn6QOrT

app.uniswap.org

Swap crypto on Ethereum, Base, Arbitrum, Polygon, Unichain and more. The DeFi platform trusted by millions.

0

2

7

🚀 $QQQAI Subscription Phase II goes LIVE in just a few hours! Don’t miss your chance. See more details 👇 https://t.co/PtcXdvfZ4e

0

1

1

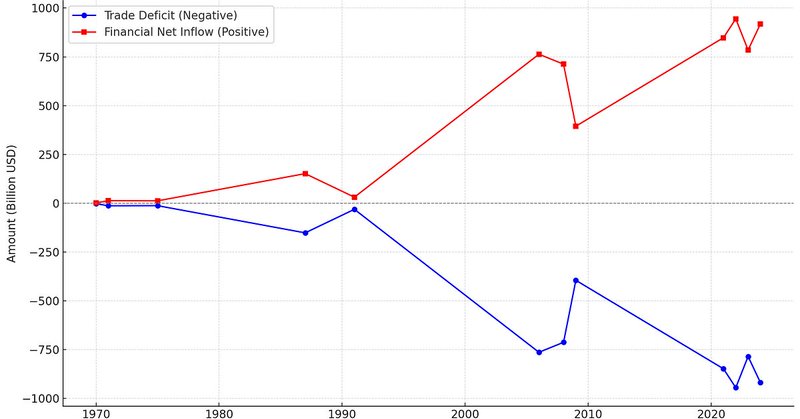

https://t.co/O4bAuUc1a3 Translated from my answer on Zhihu

medium.com

Because America isn’t a single entity — it’s a large country made up of different classes with competing interests.

2

0

13

Instead of spending sleepless nights trying to predict the market, I built a system that benefits from volatility and chaos. Learned from The Fat Tail and Dynamic Hedging. Thanks to @nntaleb

#Antifragile is the key

April 3, 2025: NDX -5.41% vs. QQQAI +3.23% → outperformed by 8.64% April 4, 2025: NDX -6.07% vs. QQQAI +5.46% → outperformed by 11.52% Two days of gains beat the entire past year. Did I secretly short the market? Nope. I follow @nntaleb’s philosophy of Antifragility.

0

1

3

April 3, 2025: NDX -5.41% vs. QQQAI +3.23% → outperformed by 8.64% April 4, 2025: NDX -6.07% vs. QQQAI +5.46% → outperformed by 11.52% Two days of gains beat the entire past year. Did I secretly short the market? Nope. I follow @nntaleb’s philosophy of Antifragility.

I manage a long-only Nasdaq-100 return-enhanced fund. It typically adds ~20% alpha in bull markets. But in the recent crash? We did even better.#Antifragile

0

0

4

I manage a long-only Nasdaq-100 return-enhanced fund. It typically adds ~20% alpha in bull markets. But in the recent crash? We did even better.#Antifragile

0

1

9

@elonmusk Warren Buffet proposed a great solution. You just pass a law that says that any time there's a deficit of more than 3% of GDP, all sitting members of Congress are ineligible for re-election.

2K

6K

43K

Shocking ending to the Trump vs Biden debate, what most people didn't see. This says it all.

4K

32K

135K

Complexity artists claim to make the unpredictable predictable. I prefer to work on making the unpredictable irrelevant.

51

250

2K

“a much faster rate of change” is maybe my single highest-confidence prediction about what a world with AGI in it will be like.

107

96

1K

【E2M Research 0602期AMA】 未来不可预测vs做投资必须要做预测,投资人应如何决策? 本周五晚8点,一起讨论:面对不确定性的未来,做决策时的那些底层逻辑思考 分享嘉宾: Odyssey @OdysseysEth Zhen Dong @zhendong2020 Peicai Li @pcfli 特邀嘉宾: 许哲 @xuzhe42 Space Link: https://t.co/eCu01mZMUB

2

5

5

I attempted to implement a purely decentralized options contract using GPT-4, and this experience gave me a very concrete understanding of GPT-4's current capabilities. https://t.co/kmZ5MZqjDC

1

2

7