Pranav Mistry

@pranavmistry

Followers

94K

Following

3K

Statuses

5K

Wanderer, Wonderer. Creator.

Somewhere out there

Joined June 2009

BIG BIG news from all of us at TWO AI @two_platforms . #SUTRA-R0, our first reasoning model is here. A reasoning model that delivers deeper, structured thinking across topics and domains. In early results, R0 beats OpenAI-o1-mini and DeepSeek-R1-32B in Hindi, Gujarati, Tamil and most Indian languages. Try the mode in ChatSUTRA powered by SUTRA-R0-Preview. More updates to follow soon. #SUTRA #AI

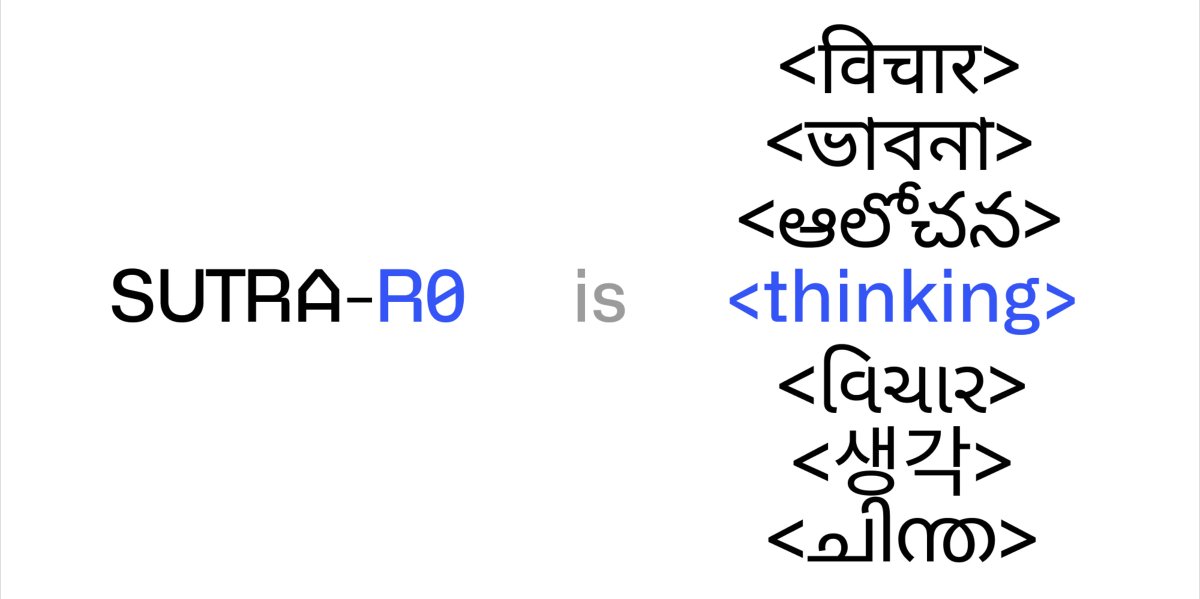

SUTRA-R0 is here. The next step in AI reasoning models. SUTRA-R0 delivers deeper, structured thinking across multiple domains and complex decision-making with multilingual capabilities. Its advanced reasoning goes beyond simple question-answering that understands, interprets, and solves multi-step problems. 🎉 SUTRA-R0 public preview is available starting today on #ChatSUTRA. Read detailed announcement on SUTRA-R0: #AI #ReasoningModel #SUTRA #SUTRAR0 by TWO AI

62

59

449

@AkshayiWeb @two_platforms @deepakravindran For sure. For select startups we will make SUTRA free. The new call for SUTRA for Startups will be up soon.

1

1

2

@kingofknowwhere U mean Ghost in the Wires? I havent read that, but i think that was later, no? In my case it was Gilbert Ryle's work and of course "I Robot" release in 2004 that I couldn't resist that name.

0

0

3

@BalajiAkiri @ndcnn @thehindubiz @two_platforms try it and u will see (+ it's 10 times smaller/faster)

1

0

1

@prem_k @ndcnn @thehindubiz @two_platforms SUTRA wasour always based on Dual Transformer Architecture, which decouples concept model from language capabilities.

1

0

1

@aditya__shinde @two_platforms soon, r0 will go in chatsutra for all users starting this weekend or monday

0

0

1

We have tried multiple of those approaches, but what we have found that attentions are not that well able to establise link to tokens from different languages, and espcially your output is desired in language diff than the so called thinking. In that way machines are closure to us. I still many time think in Gujarati in mind, and it becomes ineffective and diffcult when u try to express that in english, especially in realtime.

0

0

8

@satish1v we will be releasing more details as we get close to SUTRA-R1 launch, along with more benchmark results.

0

0

1

@paraschopra yes, while reasoning can be applied across different languages, the underlying process of reasoning itself is generally language-independent; meaning the core logical structures and rules used to draw conclusions are not inherently tied to a specific language, but rather to the cognitive processes involved in critical thinking. Although, in the case of model (or machines of today) represented in some sort of form is necessary. If it is you and me we can simply pass our reasoning to our next thought language-less. Not going too deep on this, here reasoning is more for the user to verbalise the process rather than machine actually using the reasoning in same manner. I am sure one day (may be) machines will think, but internally ;)

2

0

7

@aditya__shinde yes, this is S-V1 with thinking ui preview at inference time instructions for two step process. R0-preview is limited in deployment to 15% users. By eow we plan to move all users to R0, as we increase servers, in which think mode requires single step process w/o instruction. Btw the mmlu also improves with r0 but with the compromise is of speed. R1 in planning we aim to resolve that too and some cases where thinking is also in desired language (much harder)

1

0

2