Julian Hong

@julian_hong

Followers

1K

Following

3K

Statuses

2K

Radiation oncologist, Director of #radonc #informatics @UCSFCancer | Computational scientist @UCSF_BCHSI @UCJointCPH | data to the clinic | clone wars historian

San Francisco, CA

Joined May 2009

If you (or someone you know) are interested in bringing informatics, #datascience, #NLP, or #machinelearning to patient care, our lab is recruiting data scientists, #postdoc, and postbaccs! @UCSFCancer #radonc @UCSF_BCHSI @UCJointCPH

#postdocjobs

2

16

35

Come by #AMIA2024 Golden Gate room 1 to check out Ali’s podium talk on patient recall on prostate cancer treatment details compared to EHR and cancer registries! @JuneChanScD @anobelodisho @ErinVanBlarigan @staceykenfield

@UCSFCancer @UCSF_BCHSI @UCSF_DOCIT @UCJointCPH #pcsm

0

1

10

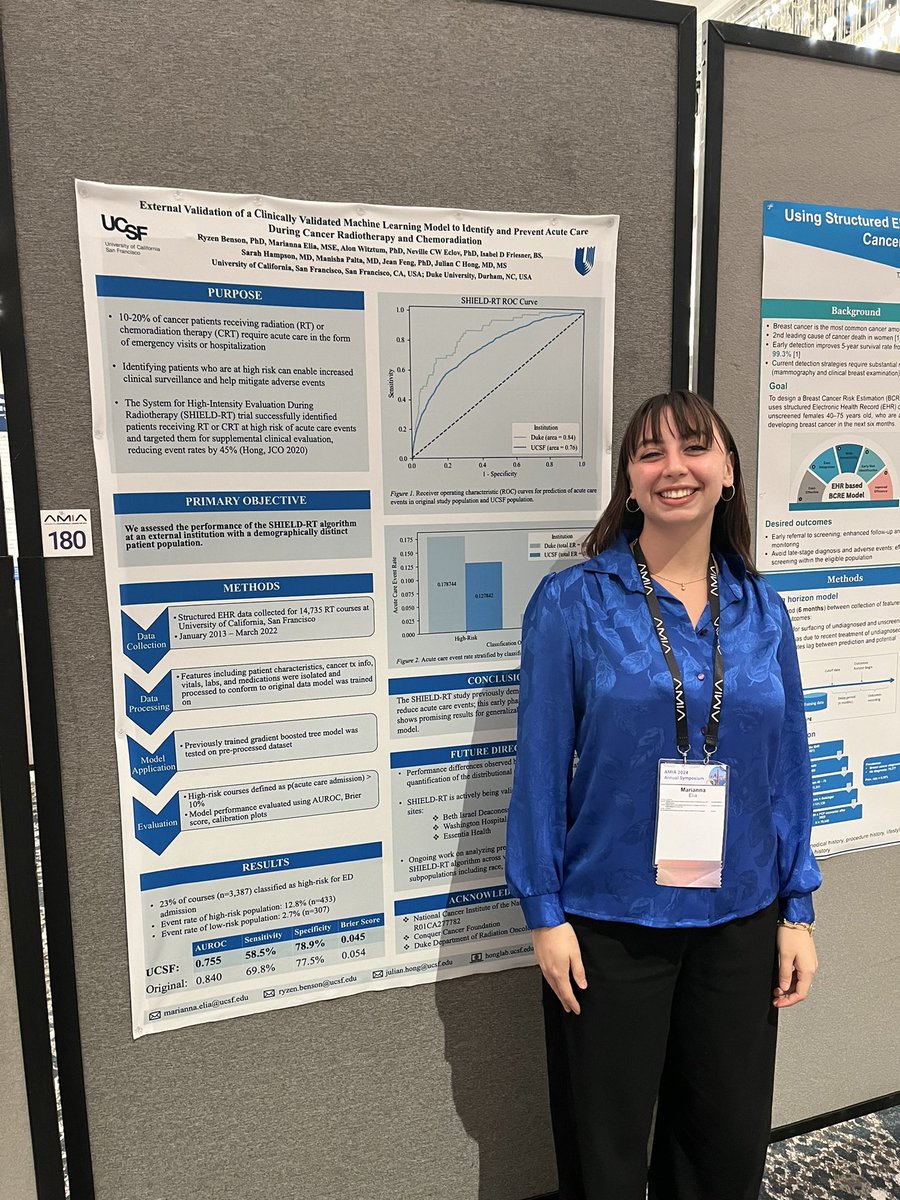

Come by #AMIA2024 poster 180 to see Mari at our poster externally validating our model predicting ER visits and hospitalizations during #radonc treatments at @UCSF! @UCSFCancer @UCSF_BCHSI @UCSF_DOCIT @UCJointCPH @DukeRadOnc Original trial here:

1

2

16

Was a privilege to kick off the @UCJointCPH fall lecture series! You can check out the recording at the CPH Youtube page below: @UCSF_BCHSI @UCSFCancer @UCSF_DOCIT #radonc

.@julian_hong inaugurates CPH's fall lecture series on developing, deploying, and regulating medical AI. Leading experts in the field speak Thursdays, 10-11 AM, starting September 26. Via Zoom webinar. Register here: @UCSF_BCHSI @UCSFCancer #ML4H

1

5

19

RT @ASCO: How can #MachineLearning be used to identify depression in patients w/ gyn cancers? Dr. @julian_hong explains #ASCOBT24 research…

0

8

0

RT @UCSF_RadOnc_Res: Want to learn more about training in Radiation Oncology? Applying this year? Sign up for virtual meet and greet sessio…

0

4

0

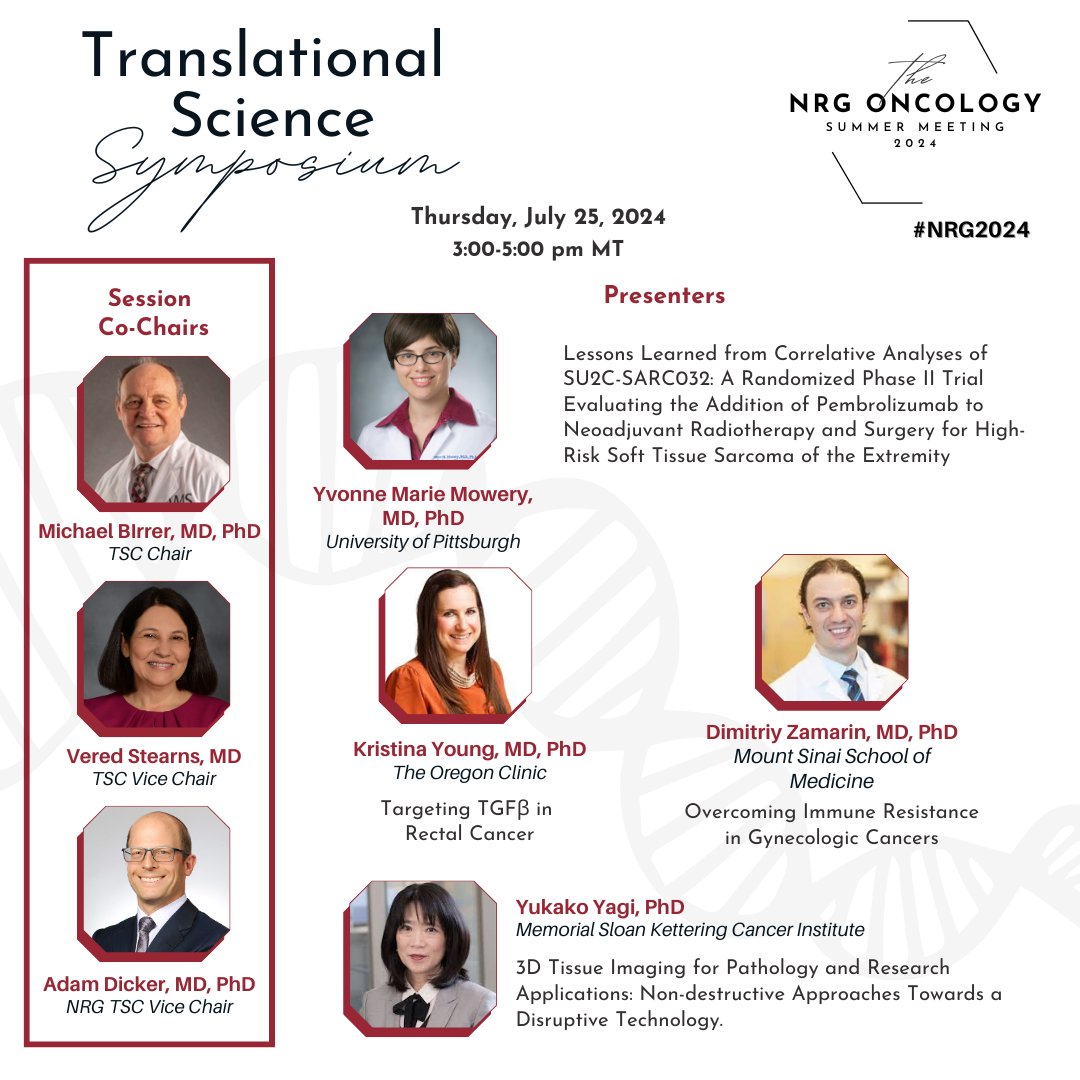

I am looking forward to the @NRGonc meeting. We have a great Translational Science session planned. @theNCI @NCItreatment @KimmelCancerCtr @TJUHospital

0

0

4

@ai4allatUCSF @UCSF_BCHSI @tomiko22 @msirota84 @UCJointCPH @UCSFPrecision @UCSFCancer @UCSFHospitals @UCSFimaging Thanks for having me!

0

0

2

"We’re trying to incorporate AI and machine learning into more trials because, at the end of the day, we’re trying to deliver better care" @julian_hong @UCSF_BCHSI | Leveraging Artificial Intelligence Evolutions in Prostate Cancer Care via @CancerNetwrk

0

0

6

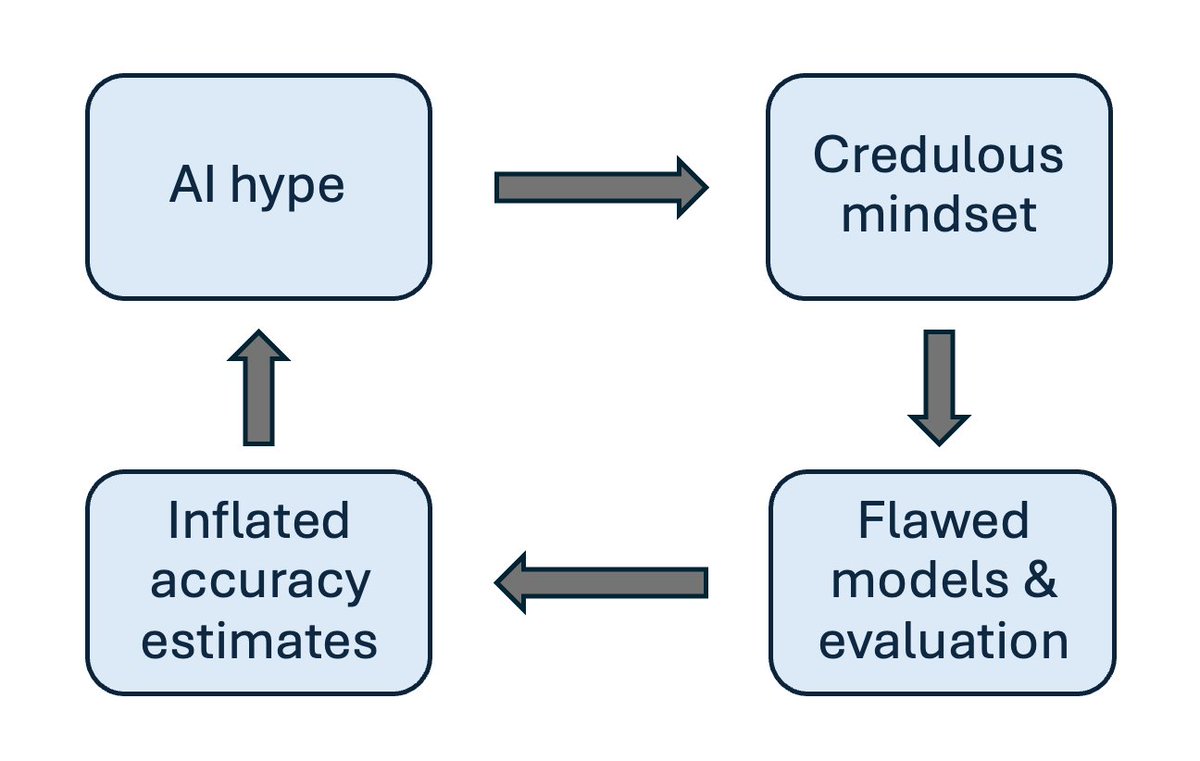

Great thread here - data leakage and the like are often hard to pick up in retrospective/observational healthcare data for a variety of operational reasons. Nothing can replace prospective testing to catch the things we miss.

New essay: ML seems to promise discovery without understanding, but this is fool's gold that has led to a reproducibility crisis in ML-based science. (with @sayashk). In 2021 we compiled evidence that an error called leakage is pervasive in ML models across scientific fields. In our most recent survey the number of affected fields has climbed to 30. Leakage is only one of many reasons for reproducibility failures. There are widespread shortcomings in every step of ML-based science, from data collection to preprocessing and reporting results. Root causes The reasons for pre-ML replication crises, such as publication bias, also apply to ML. But a new and important reason for the poor quality of ML-based science is pervasive hype, resulting in the lack of a skeptical mindset among researchers, which is a cornerstone of good scientific practice. We’ve observed that when researchers have overoptimistic expectations, and their ML model performs poorly, they assume that they did something wrong and tweak the model, when in fact they should strongly consider the possibility that they have run up against inherent limits to predictability. Conversely, they tend to be credulous when their model performs well, when in fact they should be on high alert for leakage or other flaws. And if the model performs better than expected, they assume that it has discovered patterns in the data that no human could have thought of, and the myth of AI as an alien intelligence makes this explanation seem readily plausible. This is a feedback loop. Overoptimism fuels flawed research which further misleads other researchers in the field about what they should and shouldn’t expect AI to be able to do. Glimmers of hope Researchers should in principle be able to download a paper’s code and data, review it, and check whether they can reproduce the reported results. And the vast majority of errors can be avoided if the researchers know what to look out for. So we think that the problem can be greatly mitigated by a culture change where researchers systematically exercise more care in their work and reproducibility studies are incentivized. We have led a few efforts to change this. First, our leakage paper has had an impact. Many researchers have used it to avoid leakage in their own work and to check previously published work. Beyond leakage, we led a group of 19 researchers across computer science, data science, social sciences, mathematics, and biomedical research to develop the REFORMS checklist for ML-based science. It is a 32-item checklist that can help researchers catch eight kinds of common pitfalls in ML-based science. It was recently published in Science Advances. Of course, checklists by themselves won’t help if there isn’t a culture change, but based on the reception so far, we are cautiously optimistic. A tool, not a revolution Of course, AI can be a useful tool for scientists. The key word is tool. AI is not a revolution. It is not a replacement for human understanding — to think so is to miss the point of science. AI does not offer a shortcut to the hard work and frustration inherent to research. AI is not an oracle and cannot see the future. We are at an interesting moment in the history of science. Look at these graphs showing the adoption of AI in various fields (by Duede et al. : These hockey stick graphs are not good news. They should be terrifying. Adopting AI requires changes to scientific epistemology. No scientific field has the capacity to accomplish this on a timescale of a couple of years. This is not what happens when a tool or method is adopted organically. It happens when scientists jump on a trend to get funding. Given the level of hype, scientists don’t need additional incentives to adopt AI. That means AI-for-science funding programs are probably making things worse. We doubt the avalanche of flawed research can be stopped, but if at least a fraction of AI-for-science funding were diverted to better training, critical inquiry, meta-science, reproducibility, and other quality-control efforts, the havoc can be minimized. P. S. Our book AI Snake Oil is all about how to separate real AI advances from hype. It's now available to preorder (and we're told preordering makes a big difference to the book's success).

0

1

3

@snseyedinMD @ASabbagh95 @ardademirci_14 @UCSFCancer @UCSF_BCHSI @UCJointCPH Thanks for joining and looking forward to having you join us!

0

0

1

RT @ravi_b_parikh: So excited to get this going with @ca_chung plus @julian_hong @SanjayAnejaMD @GauravSingalMD @PatelOncology Donna Rivera…

0

6

0

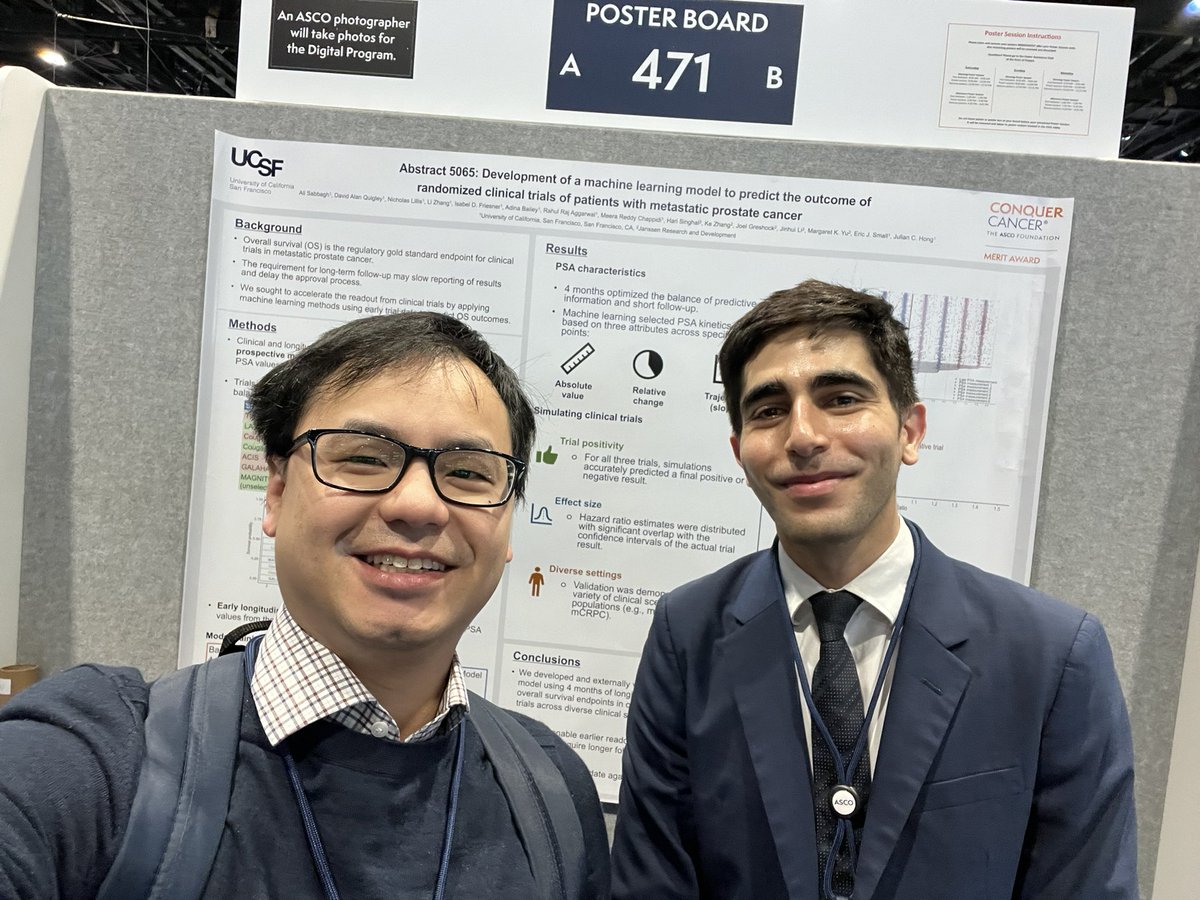

Come by @UCSFCancer @UCSF_BCHSI @UCJointCPH postdoc and incoming @MSKCancerCenter resident @asabbagh95’s poster applying #machinelearning to accelerate the readout of prostate cancer clinical trials. Recipient of an @ASCO Merit Award! Thank you @PCFnews for support! #asco24 #pcsm

0

11

32

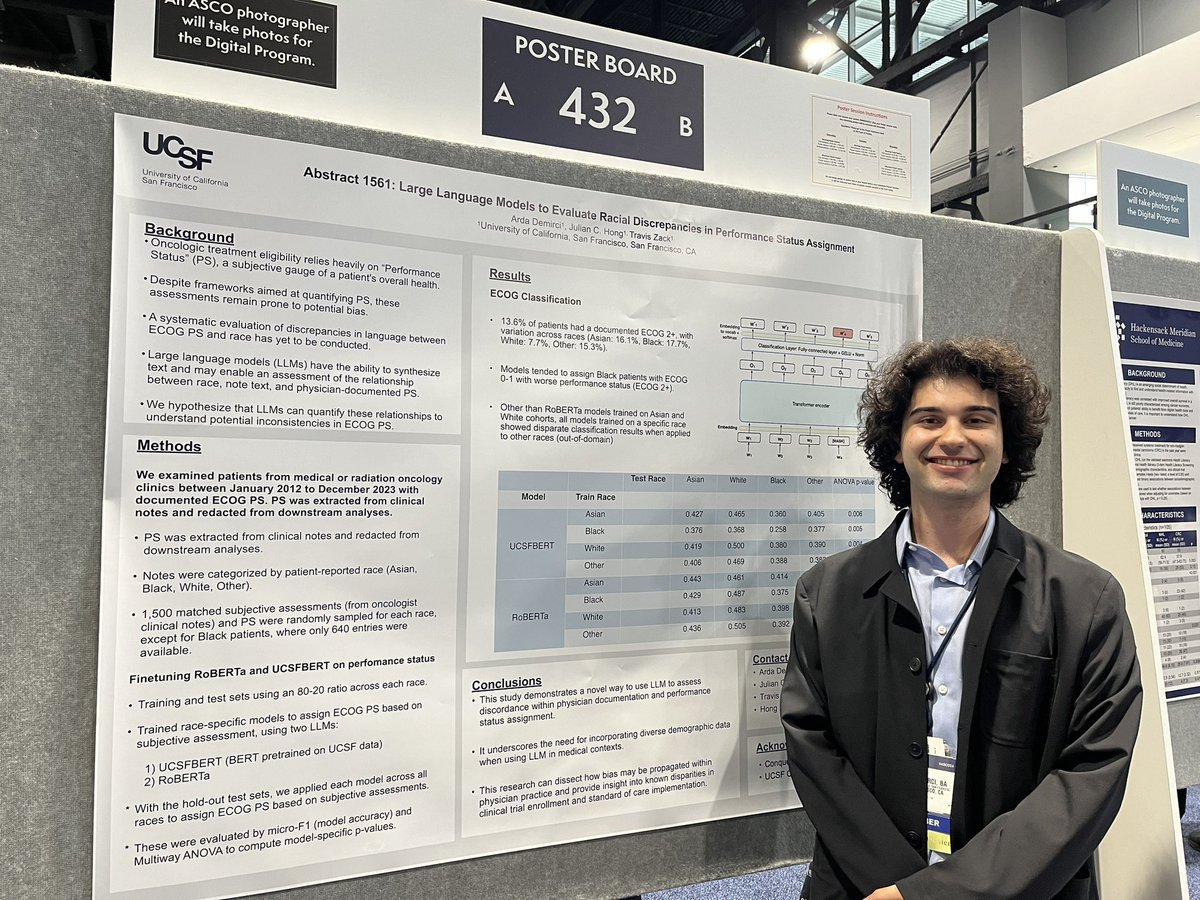

Come by poster 432 to talk to @ardademirci14 about his work with @TravisZack2 applying large language models to identifying discrepancies in assigning performance status! #ai #asco24

@UCSF_BCHSI @UCSFCancer @UCJointCPH

0

10

19

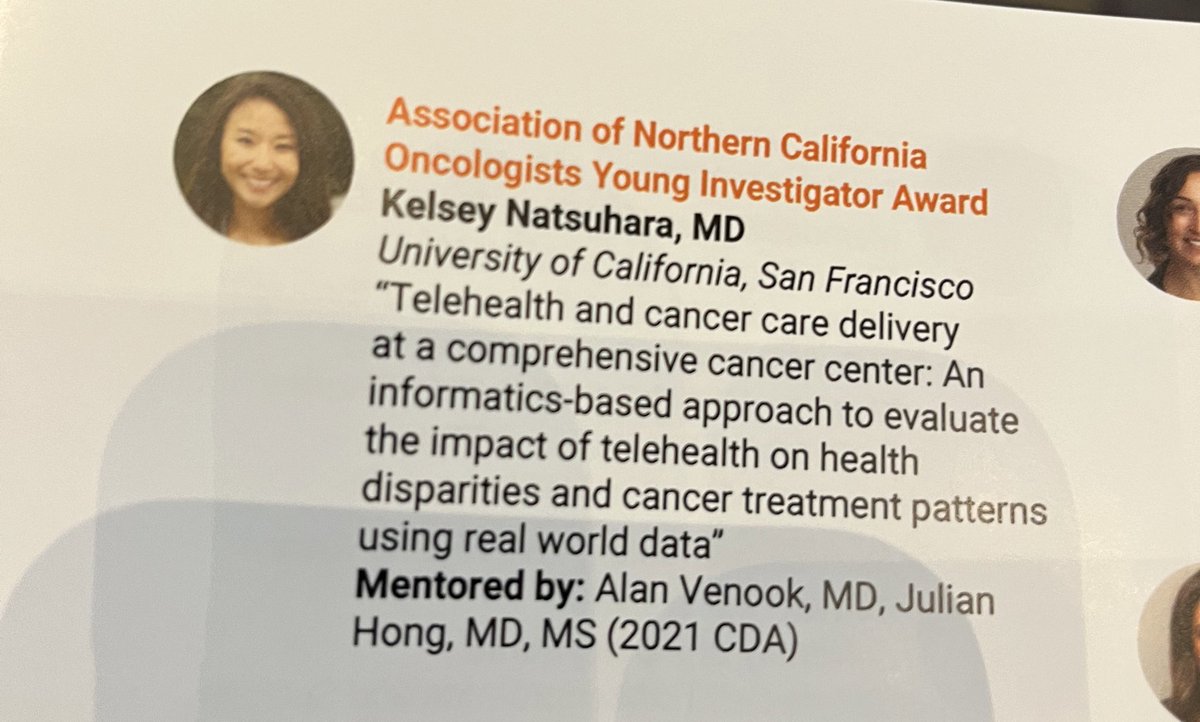

Congrats @KelseyNatsuhara on your @ASCO @ConquerCancerFd Young Investigator Award! #asco24 @UCSFCancer @UCSF_BCHSI @UCJointCPH @ANCO_News

1

7

28

RT @NEJM_AI: Original Article by Dr. @natesan_divya et al.: Health Care Cost Reductions with Machine Learning–Directed Evaluations during R…

0

6

0

RT @KelseyNatsuhara: 🙏🏼 Incredibly honored to receive a @ConquerCancerFd YIA! #ASCO24 🌟Huge thanks to my stellar mentor team @julian_hong…

0

10

0