James Michaelov

@jamichaelov

Followers

349

Following

202

Statuses

70

Postdoc @MIT. Previously: @CogSciUCSD, @CARTAUCSD, @AmazonScience, @InfAtEd, @SchoolofPPLS. Research: language comprehension, the brain, artificial intelligence

Joined September 2017

Also generally interested in chatting about cognitive modeling, scaling, and language comprehension/understanding in humans and machines! @COLM_conf #COLM2024

0

0

6

Excited to present this at COLM this week! Reach out if you want to meet/chat!

New preprint with @linguist_cat and Ben Bergen! We’ve all heard of the new wave of recurrent language models, but how good are they for modeling human language comprehension? Quite good, it turns out! 🧵

1

0

7

This paper is now accepted to be presented at @COLM_conf! Updated version is on arXiv. Feeling excited for the conference, let me know if you want to meet!

New preprint with @linguist_cat and Ben Bergen! We’ve all heard of the new wave of recurrent language models, but how good are they for modeling human language comprehension? Quite good, it turns out! 🧵

0

1

21

@linguist_cat And the current wave of recurrent architectures has just started! As we see more and more new architectures and developments, it will be interesting to see how they compare. One thing does seem clear though: recurrent models are back with a vengeance!

0

0

1

Exciting to see our paper (with @MeganBardolph, Cyma K. Van Petten, Benjamin K. Bergen, and @CoulsonSeana) 'in print' at @jneurolang!

5️⃣Michaelov etal. find surprisal explains N400s to sentence-final words varying in predictability, plausibility, and relation to the likely completion better than sem. similarity. The results support lexical predictive coding accounts. @jamichaelov 7/n

0

2

13

This is concerning, and I wouldn't be surprised if it leads to some students having to withdraw their papers from the conference

NAACL 2024 seems to charge $750 for students to register if they're a presenter (every paper requires at least one registered presenter). @naacl am I reading this right? Seems like a major burden on students, especially if (as is common) only a paper's student authors attend.

0

0

2

Really enjoyed the @babyLMchallenge talks and posters hosted by @conll_conf/@CMCL_NLP at @emnlpmeeting last year! Looking forward to seeing what people come up with this time round!

👶 BabyLM Challenge is back! Can you improve pretraining with a small data budget? BabyLMs for better LLMs & for understanding how humans learn from 100M words New: How vision affects learning Bring your own data Paper track 🧵

1

0

5

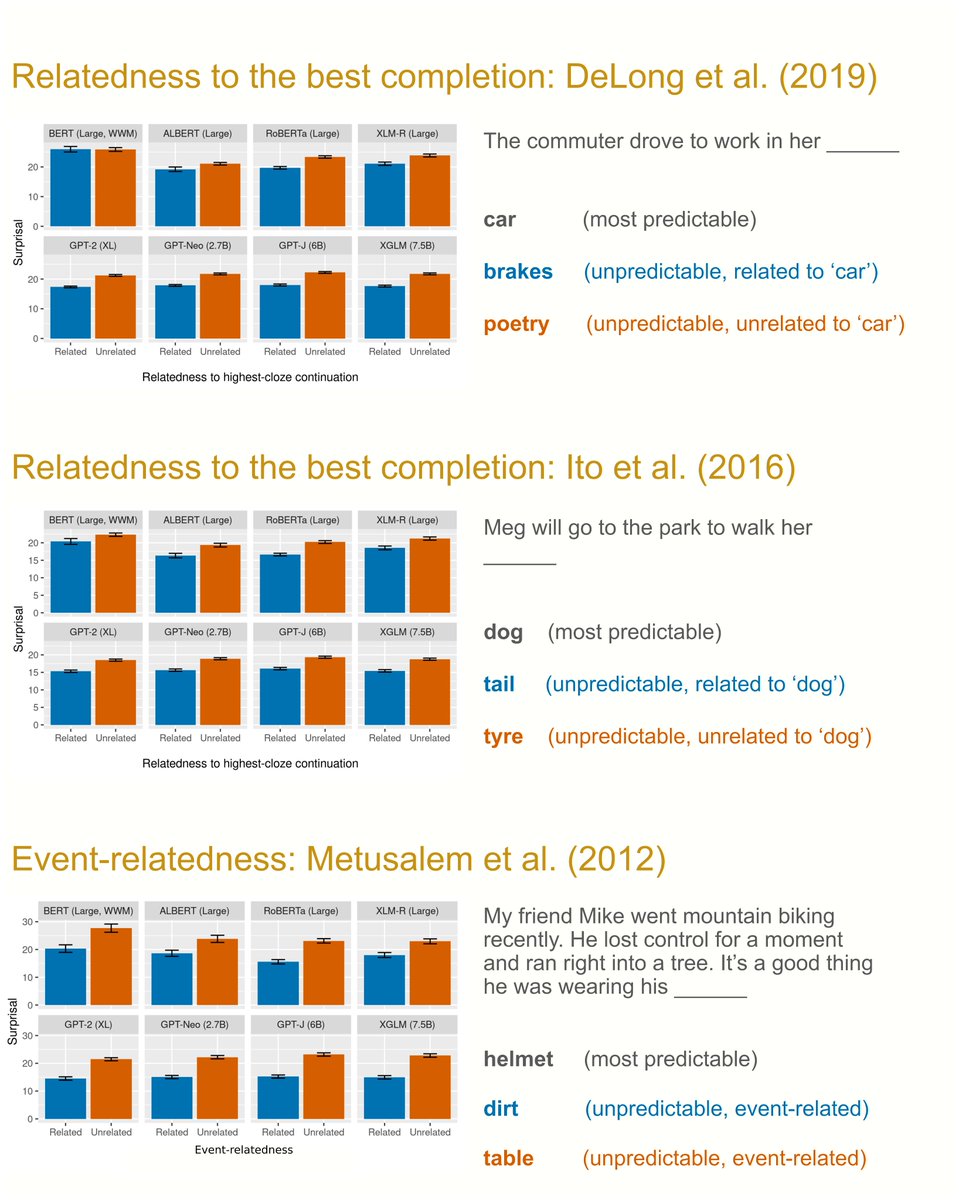

The key takeaway of this study is that compared to the human cloze baseline, language models over-predict words that are either related to the most predictable next word (the 'best completion') or to the event under discussion

First, looking back to our paper at CoNLL 2022: ‘Collateral facilitation in humans and language models’ 🧵:

0

0

3