Hua Shen✨

@huashen218

Followers

2K

Following

4K

Statuses

657

Postdoc @UW, Prev.@UMich, Ph.D @PSU, Research Intern @GoogleAI, @AmazonScience. 🦋@huashen.bsky.social. ✨I’m on the job market this year!

Seattle, WA

Joined August 2018

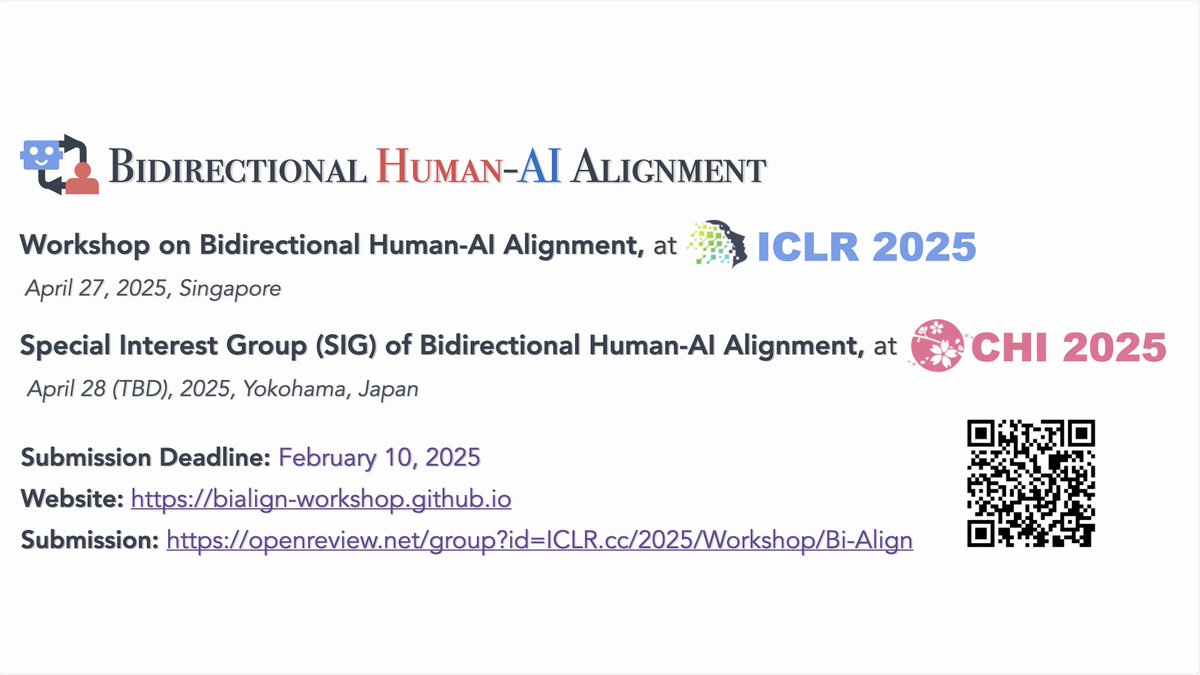

🚀 Are you passionate about #Alignment Research? Exciting news! Join us at the ICLR 2025 Workshop on 👫<>🤖Bidirectional Human-AI Alignment (April 27 or 28, Singapore). We're inviting researchers in AI, HCI, NLP, Speech, Vision, Social Science, and beyond domains to submit their work on alignment-related topics! 📄 Paper Formats: 2-page (tiny), 4-page (short), & 9-page (long) 🗓️ Key Dates: Tentative Submission Deadline: Feb 3, 2025 (AoE). Workshop Day: April 27 or 28, 2025 💠Topics: 🔸Position papers and roadmaps for alignment research 🔸Specifying human values, cognition, and societal norms 🔸RLHF and advanced algorithms, interactive/customizable alignment methods 🔸AI safety, interpretability, scalable oversight, and steerability 🔸Red teaming, benchmarks, metrics, and human evaluation for alignment 🔸Dynamic human-AI co-evolvement and societal impact 🌟Speakers: @_beenkim, @bradamyers, @fraukolos, @HungyiLee2, @RichardMCNgo, @danbohus, @Pavel_Izmailov 👫Organizers: @huashen218, @ziqiao_ma, @reshmigh, @tknearem, @lxieyang, @tongshuangwu, @andresmh, @Diyi_Yang, @ABosselut, @furongh, @tanmit, @sled_ai, Marti A. Hearst, @dawnsongtweets, @yangli169 ✍️ Interested in joining our Program Committee to help review? Apply here! Join us in advancing the Human-AI Alignment research together🌟! #ICLR2025 #AIAlignment #HCI #NLP #Speech #ComputerVision

3

34

150

Our #ICLR workshop has extended the deadline to be Feb/15 AOE for adding the #CHI session. Look forward to your amazing work! 🤗👉

Interested in Human-AI Alignment research and want to workshop the complex and evolving challenges in this space? Consider submitting your work at our interdisciplinary #ICLR2025 workshop @iclr_conf & #CHI2025 SIG. Deadline Feb 15th.

0

1

8

Hi @yuanzhi_zhu, thanks for the question! 😊 "Bidirectional Human-AI Alignment" is a comprehensive framework that captures two interconnected processes: 🔹 Aligning AI to Humans: Incorporating human values and specifications to train, steer, and refine AI systems. 🔹 Aligning Humans to AI: Empowering people to understand, critique, collaborate with, and adapt to AI advancements. This work was derived from the collective intelligence of many co-authors. Pls feel free to check it out here:

0

0

0

Please see our update on the #ICLR2025 Bidirectional Human-AI Alignment workshop’s submission deadline 👉!

Good news if you've been sitting on whether or not to submit to the #ICLR2025 workshop on bi-directional human-AI alignment -- we've extended our submission deadline until February 15th! Visit the workshop website for more information:

0

0

2

RT @tknearem: We have two great opportunities to join the conversation on bi-directional human-AI alignment -- at both @iclr_conf and @acm_…

0

3

0

🤗Thanks to those asking about our CHI session on Bidirectional 👫Human-AI🤖 Alignment! 🔥Exciting news — We’ll host both the 2025 #ICLR workshop & #CHI Special Interest Group (SIG), bringing together brilliant minds from #HCI & #AI experts to collectively explore how humans & AI/LLMs should be aligned.🚀 📢 Submit via OpenReview & you may opt to present at #ICLR or #CHI! The deadline is approaching — can't wait to see your amazing work! ✨ @iclr_conf @acm_chi @uw_ischool @michigan_AI #HumanAl #Alignment

🚀 Are you passionate about #Alignment Research? Exciting news! Join us at the ICLR 2025 Workshop on 👫<>🤖Bidirectional Human-AI Alignment (April 27 or 28, Singapore). We're inviting researchers in AI, HCI, NLP, Speech, Vision, Social Science, and beyond domains to submit their work on alignment-related topics! 📄 Paper Formats: 2-page (tiny), 4-page (short), & 9-page (long) 🗓️ Key Dates: Tentative Submission Deadline: Feb 3, 2025 (AoE). Workshop Day: April 27 or 28, 2025 💠Topics: 🔸Position papers and roadmaps for alignment research 🔸Specifying human values, cognition, and societal norms 🔸RLHF and advanced algorithms, interactive/customizable alignment methods 🔸AI safety, interpretability, scalable oversight, and steerability 🔸Red teaming, benchmarks, metrics, and human evaluation for alignment 🔸Dynamic human-AI co-evolvement and societal impact 🌟Speakers: @_beenkim, @bradamyers, @fraukolos, @HungyiLee2, @RichardMCNgo, @danbohus, @Pavel_Izmailov 👫Organizers: @huashen218, @ziqiao_ma, @reshmigh, @tknearem, @lxieyang, @tongshuangwu, @andresmh, @Diyi_Yang, @ABosselut, @furongh, @tanmit, @sled_ai, Marti A. Hearst, @dawnsongtweets, @yangli169 ✍️ Interested in joining our Program Committee to help review? Apply here! Join us in advancing the Human-AI Alignment research together🌟! #ICLR2025 #AIAlignment #HCI #NLP #Speech #ComputerVision

0

12

72

RT @windx0303: Imagine having a paper about Xiaohongshu (RedNote, 小紅書) accepted at #CHI2025 with the best timing possible. Big congratulat…

0

1

0

Happy New Year, everyone!🌟If you’re interested in our ICLR 2025 workshop of👫<>🤖Bidirectional Human-AI Alignment Workshop, check out our website for more details: Our submission portal is also live now. Can’t wait to see you join us and present your amazing research about the human-AI alignment topic at ICLR 2025!@iclr_conf 🚀

🚀 Are you passionate about #Alignment Research? Exciting news! Join us at the ICLR 2025 Workshop on 👫<>🤖Bidirectional Human-AI Alignment (April 27 or 28, Singapore). We're inviting researchers in AI, HCI, NLP, Speech, Vision, Social Science, and beyond domains to submit their work on alignment-related topics! 📄 Paper Formats: 2-page (tiny), 4-page (short), & 9-page (long) 🗓️ Key Dates: Tentative Submission Deadline: Feb 3, 2025 (AoE). Workshop Day: April 27 or 28, 2025 💠Topics: 🔸Position papers and roadmaps for alignment research 🔸Specifying human values, cognition, and societal norms 🔸RLHF and advanced algorithms, interactive/customizable alignment methods 🔸AI safety, interpretability, scalable oversight, and steerability 🔸Red teaming, benchmarks, metrics, and human evaluation for alignment 🔸Dynamic human-AI co-evolvement and societal impact 🌟Speakers: @_beenkim, @bradamyers, @fraukolos, @HungyiLee2, @RichardMCNgo, @danbohus, @Pavel_Izmailov 👫Organizers: @huashen218, @ziqiao_ma, @reshmigh, @tknearem, @lxieyang, @tongshuangwu, @andresmh, @Diyi_Yang, @ABosselut, @furongh, @tanmit, @sled_ai, Marti A. Hearst, @dawnsongtweets, @yangli169 ✍️ Interested in joining our Program Committee to help review? Apply here! Join us in advancing the Human-AI Alignment research together🌟! #ICLR2025 #AIAlignment #HCI #NLP #Speech #ComputerVision

0

6

57

RT @michigan_AI: 📌 Do you plan to attend #ICLR2025? Interested in human-centered AI alignment? 💡 Sign up for this upcoming workshop to ex…

0

2

0

RT @reshmigh: 📢 Call for Papers Working on finding #alignment gaps in the latest #AI models? Do you have applications that suggest #LLMs &…

0

1

0

RT @ziqiao_ma: Excited to co-organize the #ICLR2025 Workshop on Bidirectional Human-AI Alignment (Bi-Align). Big thanks to an amazing team…

0

7

0

4/ 👫【Workshop Organizing Committee】 Our organizing committees include interdisciplinary researchers across academia and industry. ✍️ If you want to join us as a Program Committee Member to help review the submissions, please apply here: 📚Workshop Proposal: More details are coming, please keep tuned! @huashen218, @ziqiao_ma, @reshmigh, @tknearem, @lxieyang, @tongshuangwu, @andresmh, @Diyi_Yang, @ABosselut, @furongh, @tanmit, @sled_ai, Marti A. Hearst, @dawnsongtweets, @yangli169

0

0

6

RT @pyoudeyer: Human Creativity in the Age of LLMs Randomized Experiments on Divergent and Convergent Thinking "Our findings reveal that…

0

103

0

RT @joon_s_pk: Simulating human behavior with AI agents promises a testbed for policy and the social sciences. We interviewed 1,000 people…

0

256

0

RT @MicahCarroll: 🚨 New paper: We find that even safety-tuned LLMs learn to manipulate vulnerable users when training them further with use…

0

74

0

RT @jaseweston: 🚨 Self-Consistency Preference Optimization (ScPO)🚨 - New self-training method without human labels - learn to make the mode…

0

101

0