Haitao Mao

@haitao_mao_

Followers

962

Following

305

Media

15

Statuses

207

Final-year PhD @MSU , Graph Foundation Models, Network Science, morality in LLMs, LoG 2024 Organizer. looking for a postdoc position

Joined September 2022

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Errejón

• 504156 Tweets

علي محمد

• 236017 Tweets

LINGORM MAJOR FANDOM

• 190920 Tweets

#ALNST

• 124320 Tweets

Ivan

• 102764 Tweets

期日前投票

• 102325 Tweets

Luka

• 89006 Tweets

علي النبي

• 71057 Tweets

JD WITH ISSEY MIYAKE

• 46073 Tweets

Hayırlı Cumalar

• 44975 Tweets

Durk

• 40572 Tweets

セブン社長

• 30209 Tweets

#يوم_الجمعه

• 29232 Tweets

Blink Gone

• 28820 Tweets

下請法違反

• 28619 Tweets

Mizi

• 19261 Tweets

Recep Tayyip Erdoğan

• 17554 Tweets

悪役令嬢の中の人

• 15214 Tweets

結城さく

• 13289 Tweets

Last Seen Profiles

Pinned Tweet

We discuss the potential for the new Graph Foundation Model era in this blog. It is a great pleasure to work with

@michael_galkin

@mmbronstein

@AndyJiananZhao

@zhu_zhaocheng

. See more details in our paper: and reading list

📢 In our new blogpost w/

@mmbronstein

@haitao_mao_

@AndyJiananZhao

@zhu_zhaocheng

we discuss foundation models in Graph & Geometric DL: from the core theoretical and data challenges to the most recent models that you can try already today!

2

39

124

1

3

15

My best research partner Zhikai Chen

@drshadyk98

recently proposed a roadmap to track the recent progress on the graph foundation model. We are still quite far from this goal. Thanks for your contribution in advance!

1

13

61

Position paper: graph foundation model is accepted by

#ICML2024

. We provide a rough description on this direction. A new better version will be wrapped up soon. This topic is still new without consensus. Welcome all your feedback to make it a better one!

3

9

51

Just finish write a new version of our GFM paper . Super happy and passionate along this journey. Many new insights are added with the help of

@michael_galkin

. The revision with an interesting new title will be on board soon!

3

3

25

Check our efforts on incorporating LLM on Graph. Our repository is efficient and easy to use for your next step research. Welcome to star and follow!

0

2

21

Thanks hardy to introduce our recent work graph foundation model check our

vocabulary perspective on gfm design. It helps us to connect network analysis, expressiveness,network stability with gfm design.

1

2

15

I am even more exciting to bid paper on learning on graph

@LogConference

than NeurIPS. The paper quality is so high and attractive!

0

2

15

Graph Foundation Model Workshop

@TheWebConf

was a success. This is my first time leading organization of a workshop, meeting many difficulties than imagination. I have learned many new concepts this time. A more open, exciting GFM-related workshop is coming in the near future

1

0

13

Congrats to all the authors got accepted by

#WWW2024

If you already plan a trip to WWW, welcome to submit papers to our WWW Graph Foundation Model Workshop (GFM) . For more details, please visit the official website: The submission deadline is February 5.

1

1

10

See our new preprint about the first graph transformer in Link Prediction. It is efficient, effective, and adaptive!.

1

0

7

The first in-person LoG conference you cannot miss!!! See you in LA!

(1/2) The

@LogConference

is the leading conference dedicated to graph machine learning. The third edition is not happening this year, but next year (2025). Don't be too sad though, we are preparing something even bigger: there is going to be an in-person main event at

@UCLA

. If

3

15

88

0

0

6

check our new work

Evaluating Graph Neural Networks for Link Prediction: Current Pitfalls and New Benchmarking?

#NeurIPS2023

- We reveal several pitfalls in link prediction.

- We conducted benchmarking to facilitate a fair and consistent evaluation.

Check out:

0

5

20

0

0

6

Learn a lot from the talented work by IR experts, Philipp Hager,

@RDeffayet

, and

@mdr

. The paper finds many pitfalls in our Baidu-ULTR dataset and points out solid new directions. Compared with them, I am only a naive outsider in this domain. Nice work!

3

0

5

@chaitjo

My experience is much simplre, the reviewer just one sentence: he does not believe our method can work. Rating 3 with confidence 5.

0

0

4

Check our paper! one dollar can label a graph of 2.4m+ nodes with 75% accuracy!

0

0

3

📣 my labmate

@weisshelter

on the academic job market this year! He is broadly interested in

#MachineLearning

(ML) and

#DataScience

, especially in graph ML, data-centric AI and trustworthy AI, with applications in computational biology, social good

0

1

1

@YuanqiD

Thanks Yuanqi. I am going to mention this universal structure point in our recent blog. It is still kinda of surprising that the transfer happens as molecular graph is in natural while social graph is manually conducted. There is large room to explore and think about why

0

0

2

@ShuiwangJi

@michael_galkin

@YuanqiD

@mmbronstein

@AndyJiananZhao

@zhu_zhaocheng

Yes, that is an interesting point😂. A strategy I use for reading GFM paper is to first read the experiment setting and major results and then read the abs, intro

0

0

2

welcome to join our wsdm cup competition!

We're launching

#WSDM2023

challenges brought to you by Baidu and MSU.

Task 1: Unbiased Learning for Web Search

Task 2: Pre-training for Web Search:

Top ranks share USD7,000 in prizes and get conference registrations to WSDM-23.

3

7

13

0

0

1

It is LoG 2024!!! We are looking for reviewers!

0

0

1

🙌

Our new call for

#LoG2024

local meetups is out! This "network" of local mini-conferences aims to bring together attendees belonging to the same geographic area, fostering discussions and collaborations.If you are interested in hosting one, please read this

1

11

36

0

0

1

We add discussion on the GFM on algorithm reasoning task from

@PetarV_93

and discuss the algorithm alignment may provide an additional advantage over LLMs. The corresponding CLRS dataset is also included in the dataset summarization.

0

0

1

welcome to join kdd cup 2023

🎉Attention all data enthusiasts and machine learning experts! The Amazon KDD Cup 2023 competition is here with an exciting challenge on session-based recommendation systems.

#KDDCup2023

#RecommendationSystems

2

12

51

0

0

1

@SitaoLuan

Thanks sitao. I notice your work as soon as it is on arxiv. I also have a detailed discussion with your paper in the related work section😂

0

0

1

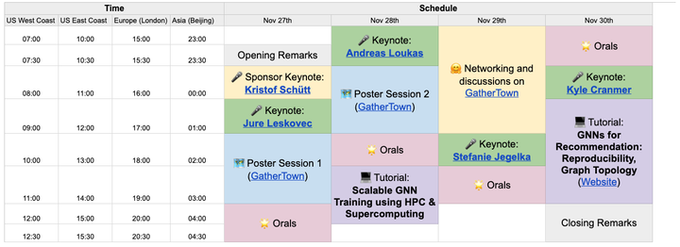

Join the log!

LoG is happening tomorrow!

Highlights of the program:

🎤Exciting keynotes from

@jure

,

@andreasloudaros

, Stefanie Jegelka,

@KyleCranmer

,

@ktschuett

🌟 12 orals

💻 Tutorials on scalability & recommendation

🤗 poster sessions & networking

Join now via

1

32

109

0

0

1