Dora Zhao

@dorazhao9

Followers

576

Following

485

Media

8

Statuses

90

CS PhD @Stanford. Previously @SonyAI_global and @PrincetonCS @VisualAILab. (she/her)

Stanford, CA

Joined February 2018

New #ICML2024 position paper. Many ML datasets report to hold properties such as “diversity” but often fail to properly define or validate these claims. We propose drawing from measurement theory in the social sciences as a framework for diverse dataset collection.

7

23

129

excited that we won Best Paper at #ICML2024! come check out my talk tomorrow morning in the Data and Society session 😄.

New #ICML2024 position paper. Many ML datasets report to hold properties such as “diversity” but often fail to properly define or validate these claims. We propose drawing from measurement theory in the social sciences as a framework for diverse dataset collection.

21

26

205

What visual cues are correlated with gender in image datasets? Basically everything!. In our #ICCV2023 work, we explore where gender artifacts arise in visual datasets, using COCO and OpenImages as a case study.

2

10

95

Excited to be presenting this work as an oral at #NeurIPS Datasets and Benchmarks in Vancouver!! ✌️.

There’s been a significant push to curate fairer and more responsible ML datasets, but what are the practical aspects of this process? 🤔. In our new study, we interviewed 30 ML dataset curators who have collected fair vision, language, or multi-modal datasets. 🧵

8

6

64

Excited that our work on teenager perceptions and configurations of privacy on Instagram will appear at #CSCW2022! . arXiv preprint: Short thread 🧵:.

1

2

17

Excited to be sharing our paper "Men Also Do Laundry: Multi-Attribute Bias Amplification" that I worked on with Jerone Andrews and @alicexiang at #ICML2023. arXiv link:

1

1

15

This work was done in collaboration with Jerone Andrews, @SciOrestis, @alicexiang . I will be in Vienna presenting this paper and hope to chat with anyone interested questions around data collection + sociotechnical systems. 📜:

1

1

11

This work is a culmination of an awesome team effort from @morganklauss, @Pooja_Chitre, Jerone Andrews, @geodotzip, @walkeroh, @khpine, and @alicexiang!. Check out the full preprint here:

0

0

10

@nicole__meister and I presented our poster on "Gender Artifacts in Visual Datasets" @WiCVworkshop. The paper is available on arXiv (.

1

2

9

"Quantifying Societal Bias Amplification in Image Captioning" by @hirota_yusuke, Yuta Nakashima, @noagarciad . Really interesting work extending bias amp metrics to vision + language systems! (

0

1

6

Shout-out to my amazing co-authors @nicole__meister, @ang3linawang, Vikram, @ruthcfong, and @orussakovsky from the @VisualAILab -- some of whom will be presenting this work in Paris next month 🥐🥖!.

1

1

3

late to reposting about @CrazyRichMovie, but AAPI stories are always relevant so. 💁🏻♀️💁🏻♀️💁🏻♀️.

OPINION | ZHAO. For many Asian-Americans, thE sense of statelessness and not belonging depicted in the movie "Crazy Rich Asians" is relatable, @dorazhao9 writes.

0

0

3

@ang3linawang @CornellInfoSci @cornell_tech @StanfordHAI @sanmikoyejo Congrats Angelina 🎉🪩!!!! Excited to see you around Stanford next year.

1

0

2

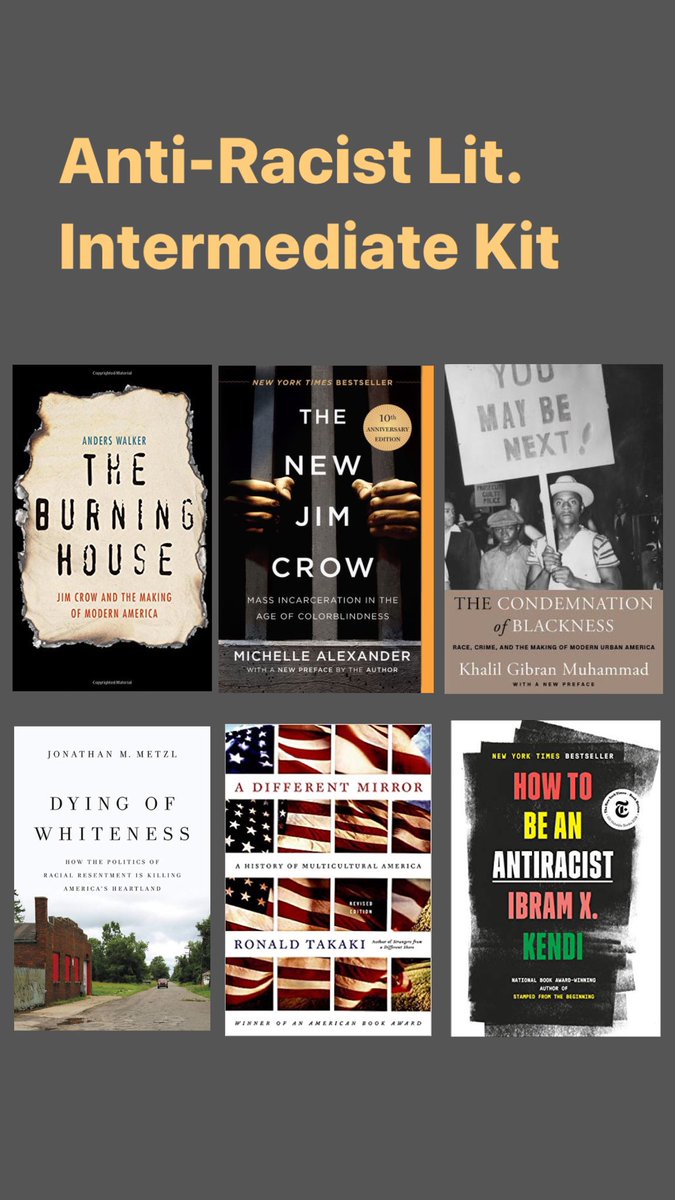

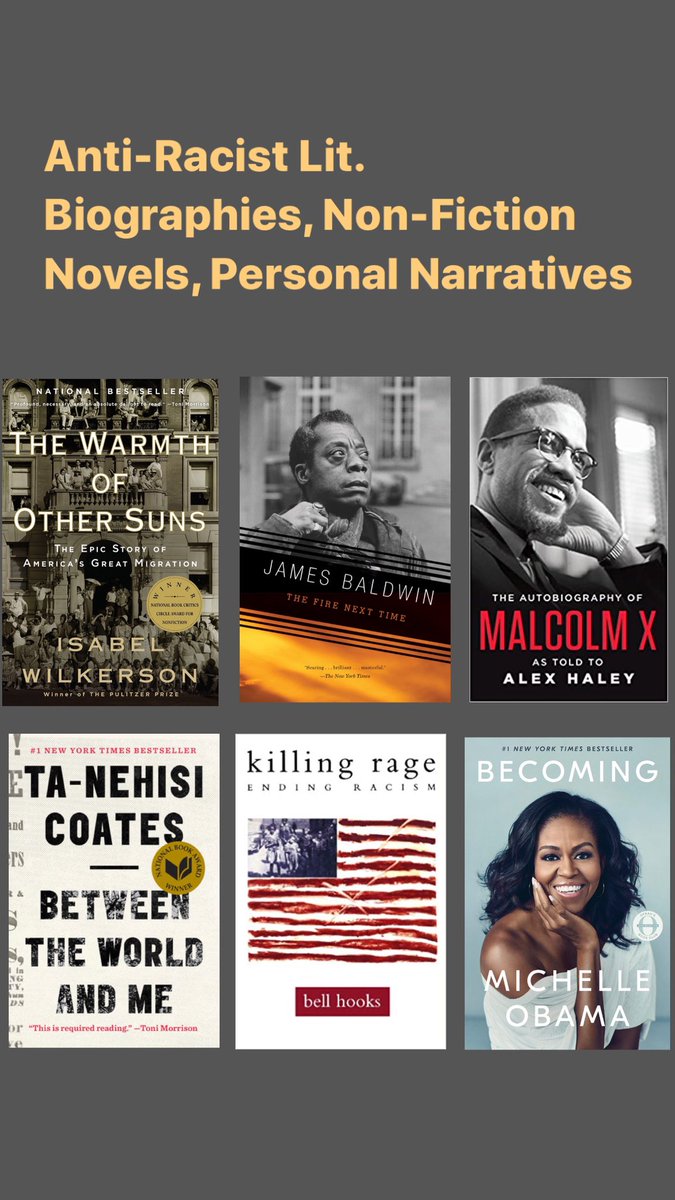

And for anyone interested in technology but especially for computer scientists / developers, two more books to read are Race After Technology by Ruha Benjamin and Algorithms of Oppression by Safiya Noble!!.

I’ve been getting a lot of questions from my non-Black friends about how to be a better ally to Black people. I suggest unlearning and relearning through literature as just one good jumping off point, and have broken up my anti-racist reading list into sections:

0

0

2

Finally, thanks to my collaborator Mikako Inaba, advisor @andresmh, classmates in COS 597I: Social Computing, and of course all of our participants!.

0

0

1

Because saying “you’re pretty for an Asian girl” is not a compliment. A letter to Asian girls via @Honi_Soit.

0

0

1