OpenTrain AI

@OpenTrainAI

Followers

73

Following

0

Statuses

23

The Data Labeling Marketplace | Where AI Builders and AI Trainers Connect to Build the Future | Find, hire, & securely pay data labelers for any annotation tool

Seattle, Washington

Joined September 2023

@natolambert They could hire & pay the same talent from these "data foundries" directly on Savings are often 50%+

0

0

1

@Suhail Here to help! Post your data labeling project and connect with freelancers and/or data labeling agencies. We also offer a fully-managed solution, where we build your own annotation team and manage the whole process. DMs are also open.

0

0

0

RT @martin_casado: At this point I feel like we understand pretty well what's going on with LLMs: - Outputs are roughly equivalent to kern…

0

154

0

RT @fchollet: The perfect quote to describe LLMs can be found in a 1946 Jean Cocteau movie -- "Réfléchissez pour moi, je réfléchirai pour v…

0

95

0

@ylecun We're working on this. Hire & pay data labelers/contributors directly, with only a small transaction fee. But if the resulting dataset will be open sourced, ZERO FEES.

0

0

0

Agreed! But some datasets are just too large/require too much time. This is when many turn to crowdsourcing. The issue with crowdsourcing is it's largely a "black box". You don't get any feedback on issues with the dataset or anomalies. This is becoming even more important as people start building more advanced models. Advanced models require labelers with domain-expertise that is not available through crowdsourcing. Here's the data labeling process we'd recommend: 1) Data scientist/engineer do the initial labeling (10-20 hours recommended) 2) Source & hire INDIVIDUAL data labelers with expertise in your data's domain (on of course 😉) 3) Work closely with the data labelers (daily sync up, open communication channels, etc) This allows for deep understanding of training data, while still being scalable for large datasets.

1

0

1

A big reason for this is the cost of hiring data talent. The big data labeling platforms are prohibitively expenses and openly have a 70% margin (and pay their data labelers poorly). We're working on making it easier & more cost-effective for the open source community to get custom data by allowing people to post a dataset need > Get proposals from freelancers (many of whom also work for the large data providers) > Pay them directly with only a small transaction fee for completed milestones. ...We also wave the transaction fee if the resulting data set will be open sourced

0

0

0

We're working on a solution for this. There are a lot of great tools, like Label Studio, but finding specialized talent to do the actual annotation work can be difficult. With OpenTrain, you post your job and select your labeling software > workers submit proposals > you hire and simply add the workers to your labeling software account. You then pay the talent directly in escrow milestones. Also, if the specialized talent isn't on our platform yet, we recruit them and bring them onboard for your job. We only charge a small % on successful milestone payouts...vs the big data labeling companies who charge a massive markup and long term contracts. We support 20+ data labeling tools and hiring in 110+ countries.

0

0

0

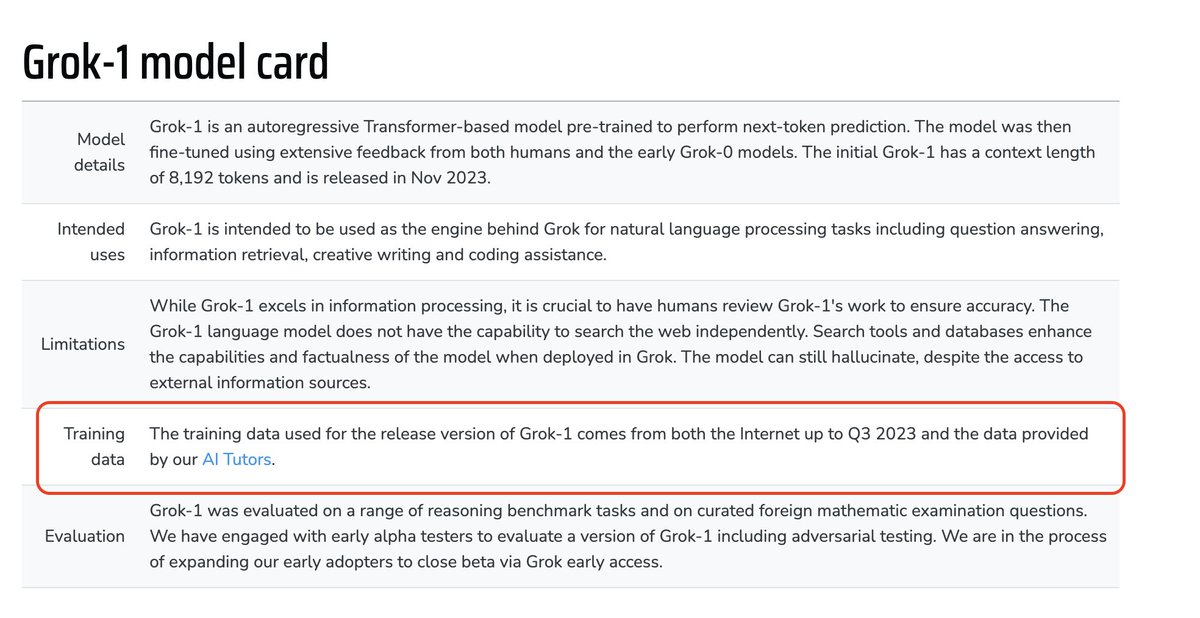

Grok-1 by @xai utilized "AI Tutors", or human subject matter experts to create custom training data & provide RLHF. We're making this easy for anybody to do this with The data labeling marketplace to find, hire, & pay training data experts for ANY data labeling software. Simply setup the data workflows on your data labeling tool of choice, & use OpenTrain to find the subject matter experts to work from that tool. Like UpWork, but for "AI Tutoring" Post jobs & collect applicants 100% free! #rlhf #llm #datalabeling #trainingdata

0

0

5

RT @lmsysorg: 🔥Excited to introduce LMSYS-Chat-1M, a large-scale dataset of 1M real-world conversations with 25 cutting-edge LLMs! This da…

0

88

0

An in-depth look at RLHF by @natolambert from @huggingface. The need for high-quality, task-specific data in RLHF is crucial. With OpenTrainAI, you can find, hire, & pay the human experts essential for responsible and effective RLHF. Post your job today! #RLHF #MachineLearning

Reinforcement Learning from Human Feedback (RLHF) is gaining traction. This field aims to make AI more responsible by including human values and preferences. In this video, @natolambert, a research scientist and RLHF team lead at @huggingface explores its inner workings, applications and industry impact. RLHF has gained the spotlight in recent years. The growth of language models like Anthropic’s Claude and OpenAI's ChatGPT have increased interest in human-feedback integration. "There are some rumors that Open AI had two teams; one was doing RLHF and the other instruction fine-tuning. And the RLHF team kept getting more and more performance." Understanding RLHF The RLHF process has three main steps: Pre-training: Much like with GPT models, the journey starts with pre-training on a large corpus of data. This can range from text data, web scrapes, to specialized datasets. Reward Modeling: This is the RLHF counterpart of supervised fine-tuning in large language models. This stage involves creating a reward model that resonates with human values and preferences. RL Optimization: This stage parallels reward modeling and reinforcement learning in traditional AI models. The AI system fine-tunes itself based on the reward model, employing reinforcement learning algorithms for that extra layer of optimization. The Data Challenge Data collection and curation in RLHF closely resemble the challenges you'd encounter in large language model training. Datasets from organizations like OpenAI can serve as a useful foundation. However, the need for high-quality, task-specific data cannot be overstated. Implementing RLHF: A Practical Guide If you’re someone who loves getting hands-on with AI libraries like Hugging Face, implementing RLHF is right way to do. It’s essential to understand its limitations. Think about model stability, over-optimization, and exploration strategies, much like you would when prompt engineering. Ongoing Research and Next Steps While he suggests that some basics figured out, there are layers of complexity that still need to be unraveled: 1. New Benchmarks: How do we measure the effectiveness of RLHF? 2. Preference Modeling: How can the model be made to understand human preferences better? 3. Interpreting RLHF: Much like explainability in traditional models, how do we make RLHF more interpretable? 4. System-Wide Evaluation: Going beyond individual performance, how does RLHF affect an entire system? The Transformative Power of RLHF Whether you're an AI developer, a business analyst, or a marketer, RLHF promises to revolutionize your domain. Imagine customer service chatbots that understand human emotions better, or content generators that align more closely with human values. RLHF is an emerging field that focuses on enhancing machine learning models through human feedback. While it tackles important issues like bias and ethics, its broader goal is to improve system performance across various applications. Whether you're deeply invested in the ethics of AI or simply curious about advancements in machine learning, RLHF offers valuable insights. If you're interested in the next wave of AI development, this area is definitely one to watch.

0

0

2