Miles Cranmer

@MilesCranmer

Followers

13K

Following

5K

Statuses

3K

Assistant Prof @Cambridge_Uni, works on AI for the physical sciences. Previously: Flatiron, DeepMind, Princeton, McGill.

Cambridge, UK

Joined September 2011

@renegadesilicon @cursor_ai Yeah the API limits on Claude are definitely a big annoyance. In the end I ended up caving and decided to triple my cursor subscription

0

0

1

@NMcGreivy Yes but DeepMind was not formed for protein folding. My intent is more to point out that this style of science — searching broadly for established challenges that a new tool would be useful for — is an often undervalued approach.

1

0

2

[You Are Here] ↓ Agent ↓ LLM ↓ High-level language ↓ Low-level language ↓ Assembly ↓ Machine code "AI will replace programmers!" No – each level up this abstraction stack is still programming. AI won't eliminate coding; it's just another evolution in how we express specifications in more efficient ways.

4

5

55

@PatrickKidger pictured – me dodging the expert nerd snipe 😜 Maybe one day though. It would of course be quite nice to pass pure-Python loss functions...

0

0

7

@PatrickKidger I think the first part should already be possible with `warm_start=True` and `niterations=1` and repeated calls to fit. The second part sounds out-of-scope - I think the ROI on a Python-to-Julia converter is low. Maybe with sympy (?) but it would greatly limit the flexibility

1

0

2

I think "interleaving" depends on implementation here. If you just want something like {expression(X)} + {neural_net(X)} as your forward model, then the gradients can just be concatenated (one w.r.t. expression constants, and one w.r.t, neural net). Or you could just compute the gradients of each piece in separate steps as I'm not sure it would matter much. For anything else, I think the best approach is to let PyTorch/JAX do all of the AD and simply write up a custom autograd function[1] for the expression valuation that calls DynamicExpression.jl's hand-rolled gradient function via juliacall (which itself is pretty battle tested, as it's one of the primary tools we use in PySR/SymbolicRegression.jl!). But need to verify that passing Tensor/DeviceArray to DynamicExpression.jl is ok (passing numpy arrays to it seems to work... gotta love multiple dispatch). Note that the Julia-formatted string is not notation but rather actual code that is evaluated via juliacall. The function object is passed to SymbolicRegression.jl. I don't do any parsing of that string myself or even use a Julia macro – the user provides an arbitrary Julia function. If wanting to use PyTorch/JAX autograd for all of this, the user would need to write a regular PyTorch/JAX function instead – which I guess would even be better? It might just work! The hard part would be Python's GIL which would cause a massive hit in performance. This makes me curious if torch.compile or jax.jit can compile C++ symbols that could be routed to Julia, sidestepping the GIL completely? Darn GIL!! [1] When I say custom autograd I am just thinking these directly: and juliacall lets you pass Python arrays directly to Julia functions, so in principle you might (??) not even need to convert the array because of multiple dispatch

1

0

0

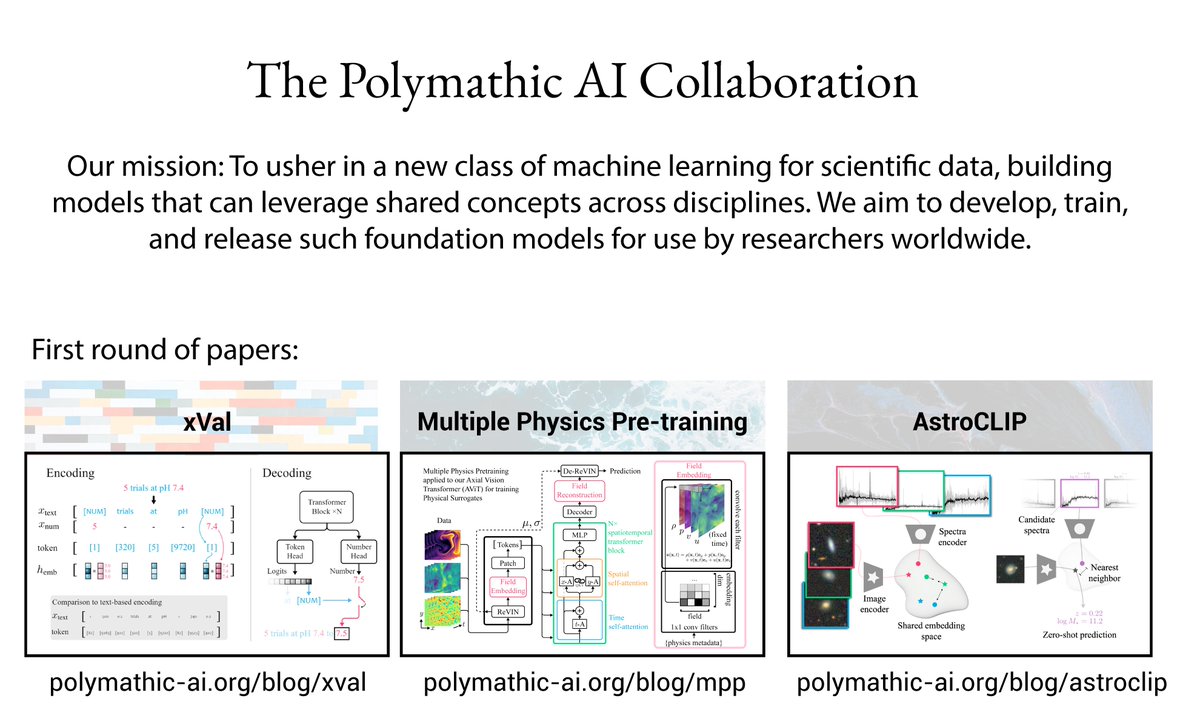

RT @MilesCranmer: 🚨 FINAL REMINDER 🚨: Multiple Postdoc and PhD positions in our AI + Physics cluster in DAMTP, Cambridge! Deadlines: - Pos…

0

11

0