Michael Black

@Michael_J_Black

Followers

73K

Following

10K

Media

535

Statuses

3K

Director, Max Planck Institute for Intelligent Systems (@MPI_IS). Chief Scientist @meshcapade. Building 3D digital humans using vision, graphics, and learning.

Tübingen, Deutschland

Joined April 2010

Code and data are now online for CameraHMR, our state-of-the-art parametric 3D human pose and shape (HPS) estimation method that will appear at hashtag#3DV2025. There are 4 key contributions that make it so accurate and robust:. 1. To get accurate 3D

5

38

213

I asked #Galactica about some things I know about and I'm troubled. In all cases, it was wrong or biased but sounded right and authoritative. I think it's dangerous. Here are a few of my experiments and my analysis of my concerns. (1/9).

85

762

3K

The Max Planck Society has pledged to support Ukrainian scientists who have to flee and need a place to work. If you are a computer vision scientist leaving #Ukraine, reach out to me.

8

335

1K

With LLMs for science out there (#Galactica) we need new ethics rules for scientific publication. Existing rules regarding plagiarism, fraud, and authorship need to be rethought for LLMs to safeguard public trust in science. Long thread about trust, peer review, & LLMs. (1/23).

28

124

473

Stepping outside my area of expertise, I’m frustrated that Europeans are not being asked to reduce their energy use. In addition to showing solidarity with #Ukraine, it would enable the EU to impose stronger sanctions against Russia. 1/4.

14

49

458

Now you can try out BITE (#CVPR2023) on @huggingface and create a 3D model of your dog from a single image. Have fun!

7

125

455

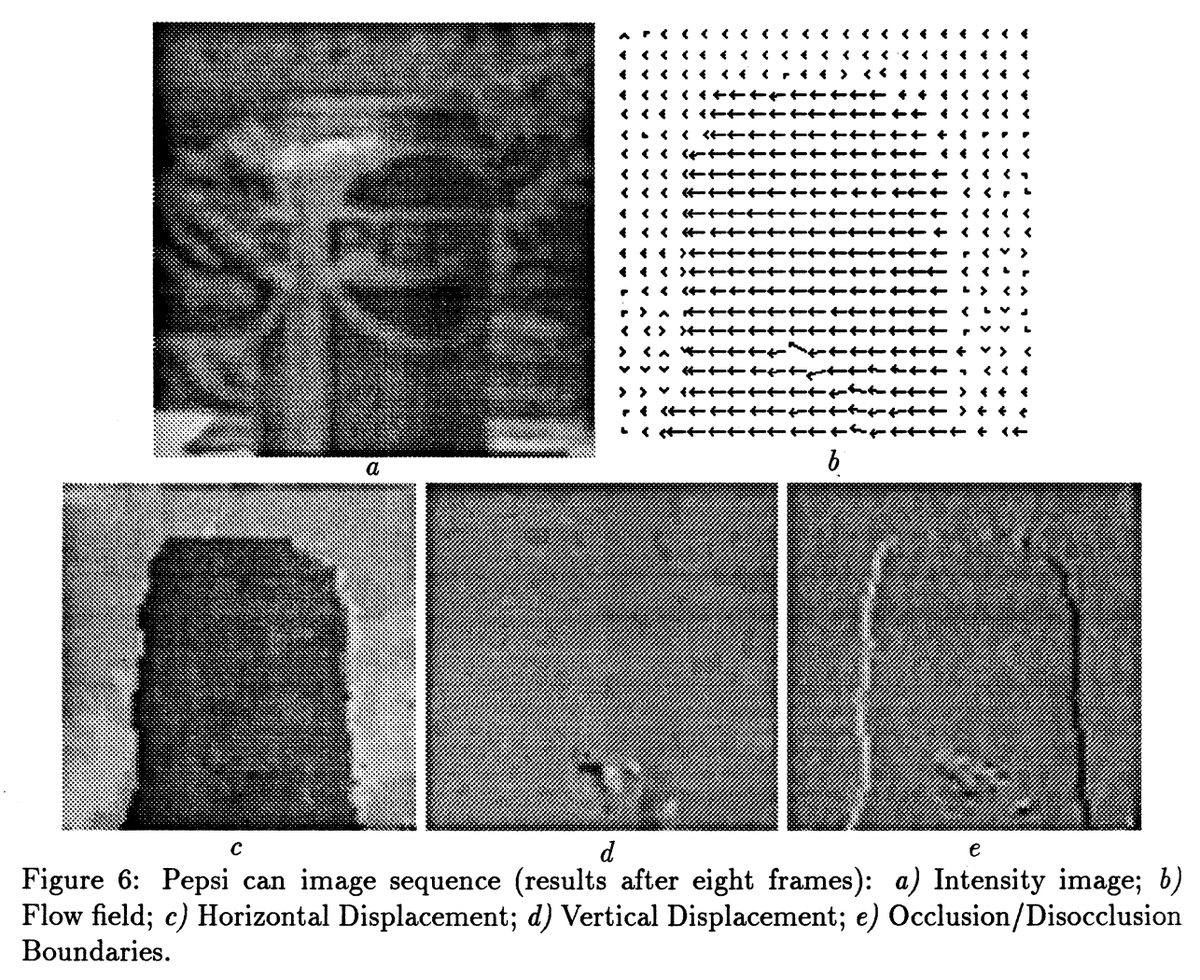

I’m honored to share the 2020 Longuet-Higgins prize with @DeqingSun and Stefan Roth. It is given at #CVPR2020 for work from #CVPR 2010 that has withstood the test of time. I’ve written a blog post about the secrets behind “The Secrets of Optical Flow”:

19

76

429

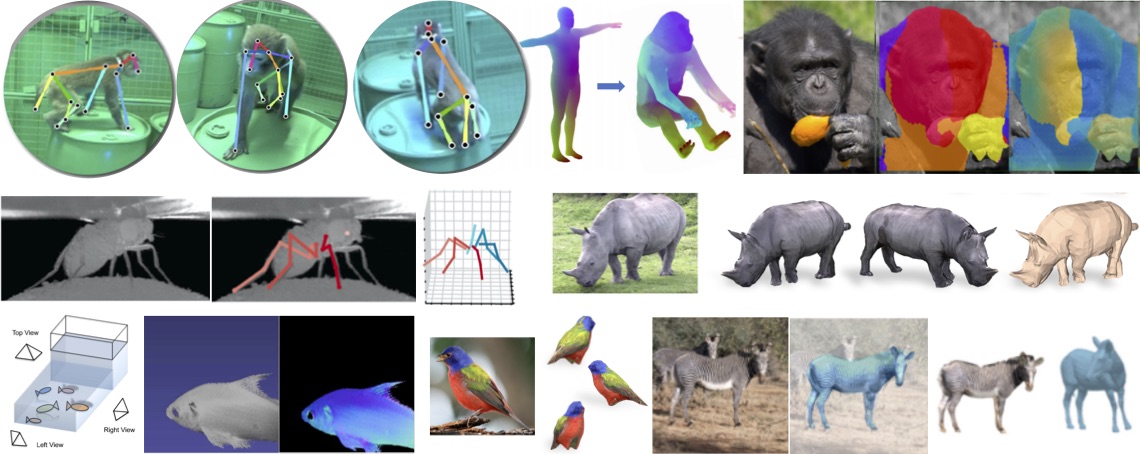

Understanding human behavior requires understanding 3D human contact with the world. To study this, we introduce a dataset (RICH) and a method (BSTRO) that infers 3D contact on the body from a single image. #CVPR2022 (1/8)

7

81

396

There's a problem with 3D human pose & shape (HPS) estimation methods. You either get good 3D accuracy or good alignment with the image, but not both. Why? The current top methods use the wrong camera model. TokenHMR at #CVPR2024 analyzes the issue and presents a solution. (1/8)

9

65

403

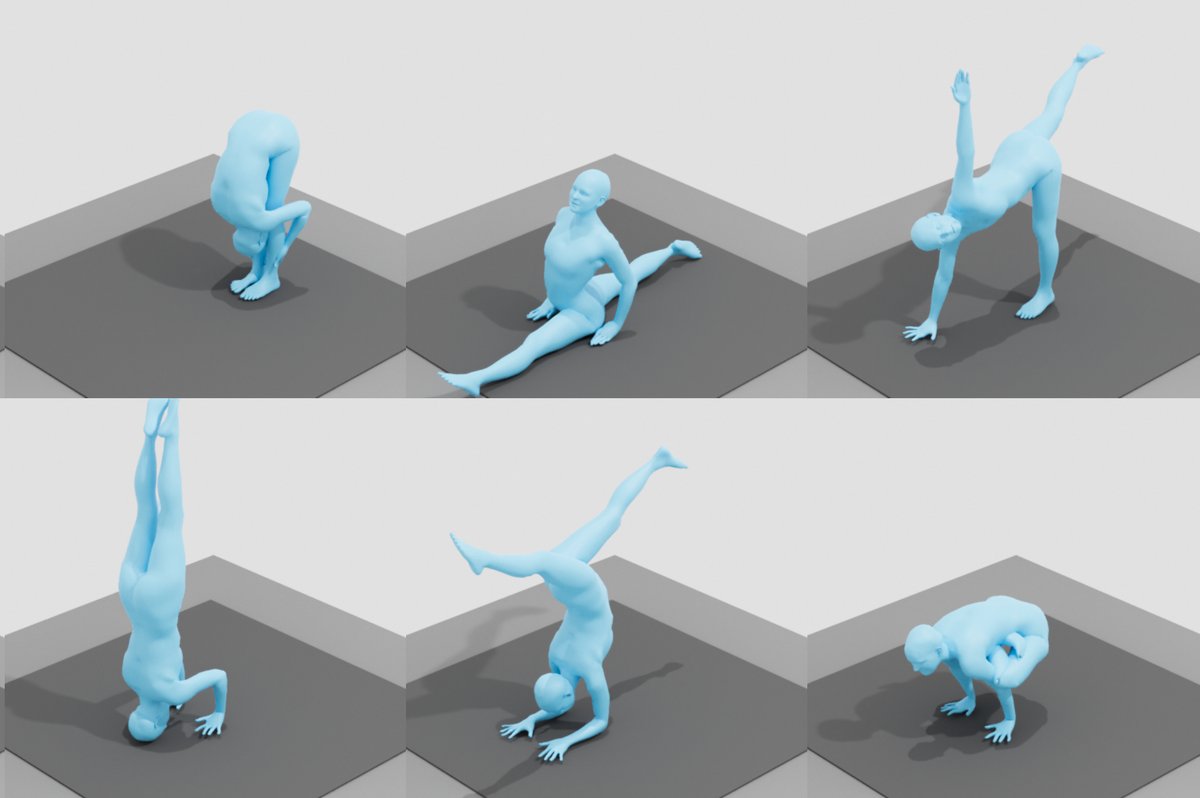

Train your avatars to interact with 3D scenes. We use adversarial imitation learning and reinforcement learning to train physically-simulated characters that perform scene interaction tasks in a natural and life-like manner. Today at #SIGGRAPH2023.

5

60

390

This is why I find AR more exciting than VR. It’s the ability to extend human perception beyond the visible spectrum that will literally let us understand the world in new ways.

Finally, you can literally "grab" your #wifi signal and find your #network dead spots in #mixedreality. Special thanks to the @BadVR_Inc team for putting together such a handy app. Link below 👇. #technology #QuestPro

7

48

379

The #CVPR2024 deadline is approaching and it's time to “Dance with the one who brung ya” - this is the phrase I use before a deadline when your results fall short of your dream (as they often do). It means to accept what you have and make the most of it. 1/4.

4

43

380

Today marks my 10th anniversary of living on Germany. It was my honor to co-found the @MPI_IS together with @bschoelkopf and to build the @PerceivingSys department together with amazing students, postdocs, and staff, past and present (. My deepest thanks.

11

5

378

A critical skill for scientists is to know what you know and to know what you don't know. And then admit what you don't know to yourself and others. LLMs like #ChatGPT are hugely impressive but to make them useful for science, the ability to say "I don't know" is necessary.

24

39

362

What’s the key enabling technology of the #metaverse?. It’s not #VR headsets, #AR glasses, or #avatars with legs. It’s computer vision (CV) and @Meta clearly understands this. We focus on headsets & avatars because they're tangible, visible, artifacts in a way that CV isn’t. 🧵.

7

38

355

Given the outside surface of the human body, can we peer inside and infer the bones? Many methods predict a “skeleton” that is not realistic. With OSSO (#CVPR2022), for the first time, we learn to predict a detailed skeleton from external observations. (1/7)

5

64

336

Upgrade your expressive 3D human avatars from #SMPL-X to #SUPR, our latest and greatest body model. SUPR is trained from 1.2M 3D scans, is more expressive, and includes feet with articulation and compression. Code by @NeelayShah8, video by @AYiannakidis.

13

54

338

New @CVPR2020. VIBE: Video Inference for Human Body Pose and Shape Estimation. SOTA 3D human pose and shape from video. @mkocab_ & @athn_nik at @PerceivingSys .Code etc.: Video:

1

80

329

This was my first CVPR and nobody told us we were getting an award. Anandan and I skipped the banquet where the prize was awarded. David Kriegman accepted it on our behalf and for the rest of the conference people were congratulating him. Lesson: never skip the awards session!.

CVPR 1991 Best Paper Award. Robust Dynamic Motion Estimation over Time. @Michael_J_Black and P. Anandan. #TBThursday

4

21

325

I was honored to accept the Koenderink Prize at @eccvconf 2022 on behalf of my coauthors Dan Butler, Jonas Wulff, and Garrett Stanley. The prize recognizes the Sintel optical flow dataset paper for standing the test of time. Behind the scenes blog post:

17

20

313

This is an interesting, timely, and important paper. The takeaway is that "recent self-supervised models such as DINOv2 learn representations that encode depth and surface normals, with StableDiffusion being a close second". This contrasts with vision-language models like CLIP,.

Google announces Probing the 3D Awareness of Visual Foundation Models. Recent advances in large-scale pretraining have yielded visual foundation models with strong capabilities. Not only can recent models generalize to arbitrary images for their training task, their

8

40

312

Poster practice session for #cvpr2022 was a ton of fun. My job was to pretend to be all sorts of poster visitors. They are ready for New Orleans!

6

4

310

I repeat: Easily produced science text that's wrong does not advance science, improve science productivity, or make science more accessible. I like research on LLMs but the blind belief in their goodness does a disservice to them and science. Here is an example from #ChatGPT 1/5

7

29

293

I don't usually respond to obvious trolling because it just gives more attention to the troller. But if you're curious about what I actually wrote about #Galactica in Nov 2022, you can read it for yourself here: I concluded with: "I applaud the ambition.

Will @Grady_Booch, @GaryMarcus, @Michael_J_Black, and others ever rescind their prophecies of LLM-powered doom for the scientific community. It seems that the release of ChatGPT, a mere few weeks after Galactica, and the groundswell of public interest in it made them hold their.

12

15

290

.@thiemoall publishes in the area (excellent work BTW) so it's on the right track but it has made up this reference. Based on these few tests, I think #Galactica is 1) an interesting research project, 2) not useful for doing science (stick with wikipedia), 3) dangerous. (4/9).

4

25

279

While most existing methods that estimate 3D human pose and shape (HPS) do so in camera coordinates, many applications require 3D humans in global coordinates. At #CVPR2023, we introduce TRACE, which addresses this using a novel 5D representation. 1/6

4

62

292

I think about the field of 3D human pose, shape, and motion estimation as having three phases. 1: Optimization. 2: Regression. 3: Reasoning. With #PoseGPT, we are just entering phase 3. I summarize the coming paradigm shift in this blog post:

2

53

268

Today is my last day at #Amazon. I joined over 4 years ago as a Distinguished Amazon Scholar (20% time) through the acquisition of Body Labs. It has been an amazing experience, I’ve learned a lot, and will always be grateful for the opportunity. (1/8).

3

3

281

Thanks to everyone at #CVPR2024 who's come up and thanked me for my blog and social media posts. Knowing they've been useful to you encourages me to keep sharing. One thing I love about CVPR is meeting new people so don't be shy -- come say hi. And, yes, we can take a selfie :).

5

5

275

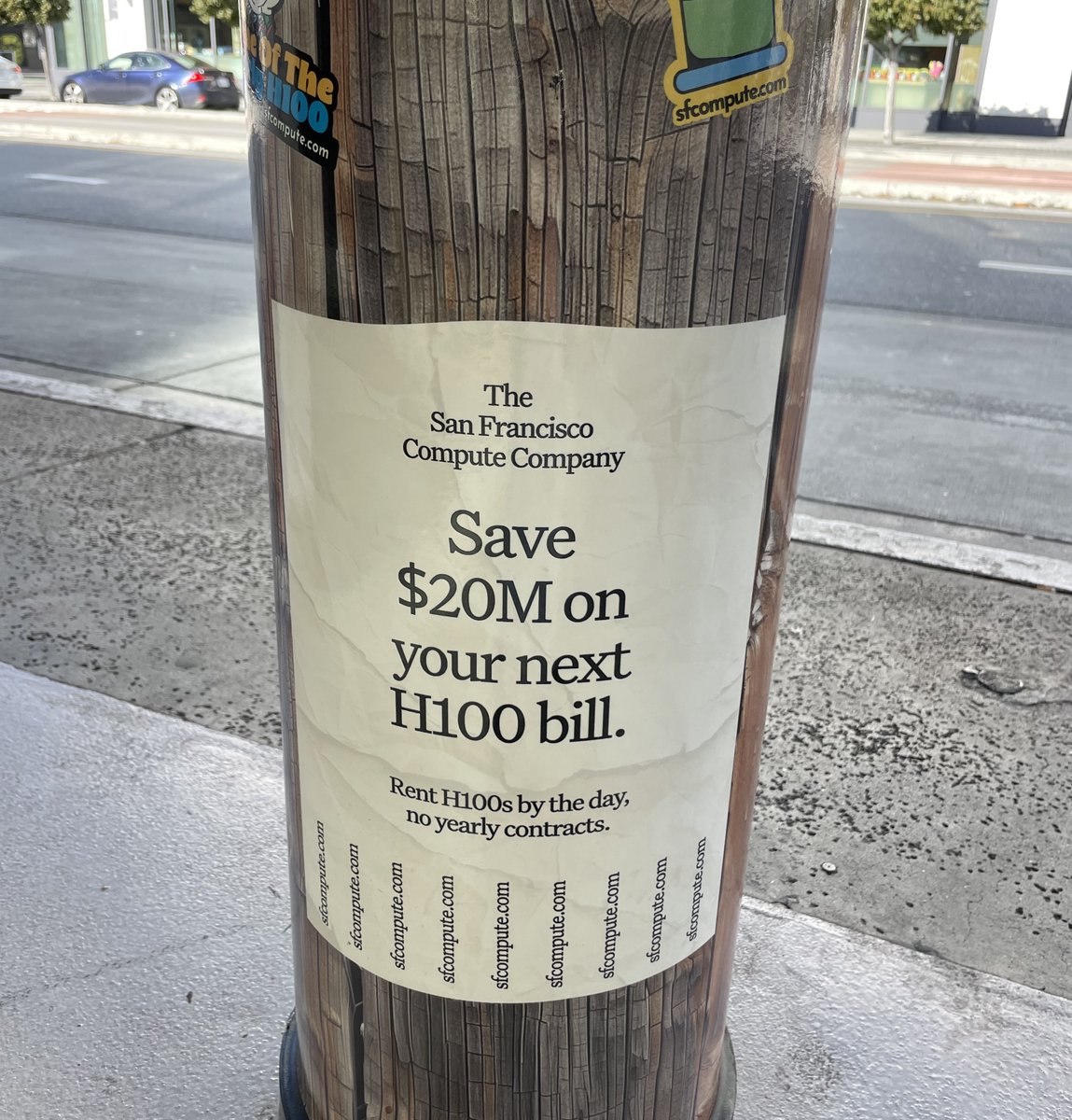

Only in #SanFrancisco does this ad make sense on a bullock at the train station. A key rule of advertising is to know your customer. Nailed it. I'm thinking, "yes, indeed, I do want to save $20M on my next H100 bill".

12

26

268

The German Chancellor, Angela Merkel, visited @MPI_IS today to hear about @Cyber_Valley - virtually of course. Here she is getting a tour of the @PerceivingSys capture hall. It was fun, she asked great questions, and the team was awesome!

3

31

258

@jbhuang0604 All good points but let me add one more. Use the work yourself. Build on it. In this way you teach others how it is useful. If it isn’t foundational for you, why would it be for others?.

2

13

262

The name has changed but it remains the world's first 3D human foundation model. ChatPose (formerly PoseGPT) has a new name at the request of the #CVPR2024 reviewers. Same great work from @meshcapade and @PerceivingSys. Final #CVPR2024 version on arXiv:

4

57

261

Should you do research in a AI startup? Does it burn someone else's money and your equity? Or is it the key to success? If you do it, how do you manage it? Drawing on experiences at Xerox PARC, Amazon, Body Labs, & @meshcapade, I try to shed some light:

5

38

255

BEV (#CVPR2022) computes all the 3D people in an image in one shot, placing them all appropriately in depth. The key novelty is an imaginary 2D “Bird’s-Eye-View” (BEV) representation that reasons about the body centers in depth. (1/8)

2

52

245

Is it my imagination or are the ads longer than the talks at #ECCV2020? It changes the character of the scientific meeting. I love an expo, but let the science be ad-free. Let's call this a worthwhile experiment that failed and let's not do it again.

9

16

247

I wanted to build a 4D body scanner at Brown and wrote an NSF proposal with a colleague to fund it. We got high scores for everything but the final analysis was that they didn't think anyone needed 4D body scans. That gave me the kick to move to MPI where I could pursue my dream.

Our highlight in 2013 was the @PerceivingSys new state-of-the-art 4D body scanner. @Michael_J_Black & his team set up the first version that year. Since then, many prominent people have stood in it. Only recently, we captured the motion of the Vice-President of the EU Commission

3

9

249

I entered "Estimating realistic 3D human avatars in clothing from a single image or video". In this case, it made up a fictitious paper and associated GitHub repo. The author is a real person (@AlbertPumarola) but the reference is bogus. (2/9)

3

32

236

Is synthetic data “all you need” to train 3D human pose and shape (HPS) regressors? Is the field making progress? What algorithmic decisions matter? To address these questions, we present BEDLAM (#CVPR2023 highlight paper), a synthetic dataset of 3D humans. 1/11

2

49

238

@OHilliges My wife has had #MECFS since the 1990's. One doctor told her "You're tired? We're all tired. Go home and have babies." On the other hand, the head of rheumatology at Stanford told her "Don't let anyone tell you that you're not sick." More research is desperately needed.

6

13

214

Avatars are central to the success of the #metaverse and #metacommerce. We need different #avatars for different purposes: accurate #3D digital doubles for shopping, realistic looking for #telepresence, stylized for fun, all with faces & hands. @meshcapade makes this easy. (1/8)

9

48

224

BITE (#CVPR2023) reconstructs 3D dog shape and pose from one image, even with challenging poses like sitting and lying. Such poses result in occlusion and deformation. Key idea: leverage ground contact to better estimate pose and shape. 1/6

6

30

219

Realistic 3D human animation is hard. Goal: automate it using only speech. Given a speech signal as input, TalkSHOW (#CVPR2023) generates realistic, coherent, and diverse holistic 3D motions, that is, the body motion together with facial expressions and hand gestures. (1/8)

2

34

216

Yesterday was my birthday and the folks at @meshcapade made me this wonderful movie. I love fonts and this is the best font ever! I'm going to call it "Avatar". It's #SMPL to make me happy.

11

7

215

Congratulations @YaoFeng1995 for successfully defending your PhD thesis on "Learning Digital Humans from Vision and Language"! DECA, PIXIE & SCARF advanced the capture of faces, full bodies, & bodies in clothing, while ChatPose connects LLMs with 3D humans for the first time.

12

10

217

We've seen rapid progress on generating human motion from text descriptions. But to be really useful, animators need timeline control. With our new work, one can control when multiple actions occur and these actions can even overlap. Great #Nvidia internship by @MathisPetrovich.

Multi-Track Timeline Control for Text-Driven 3D Human Motion Generation. paper page: Recent advances in generative modeling have led to promising progress on synthesizing 3D human motion from text, with methods that can generate character animations from

6

39

204

Thirty years ago I dreamt of computers that could see us. Understand us. Move like us. Look like us. And ultimately be like us. So I started building. And I haven’t stopped. @meshcapade is that dream come true. We’re building the future. And the future is now.

10

25

206