Greg Kamradt

@GregKamradt

Followers

35K

Following

12K

Media

771

Statuses

6K

President @arcprize —Founder https://t.co/XK3ITFuCZe —builder/engineer

San Francisco, CA

Joined January 2011

We verified the o3 results for OpenAI on @arcprize . My first thought when I saw the prompt they used to claim their score was. "That's it?". It was refreshing (impressive) to see the prompt be so simple. "Find the common rule that maps an input grid to an output grid"

50

139

2K

"Chief Automation Officer" - A scrappy semi-technical generalist. they've been 10x'd with LLMs, cursor, zapier ai, etc. @stephsmithio called it out back in '23. who's doing this as a service?

97

114

2K

The OpenAI team was amazing. Great support from @sama and @markchen90 . The main message from them was “we want more”. ARC-AGI-2 coming Q1 ‘25

45

53

2K

need some alpha feedback on a weekend app. inspired by @deedydas 's tweet exploring a repository with an LLM. what should I cut?.

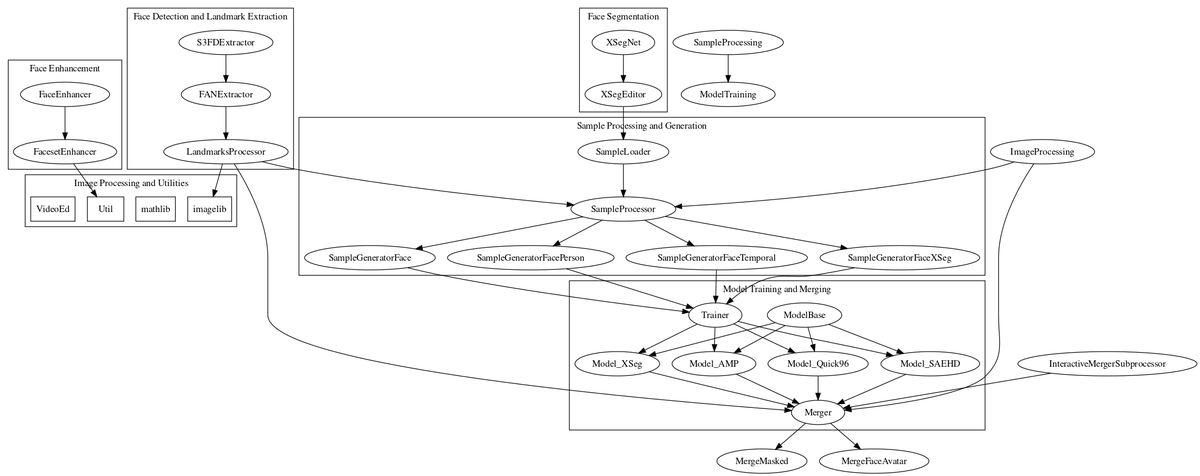

How to understand Github code repos with LLMs in 2 mins. Even after 10yrs of engineering, dissecting a large codebase is daunting. 1 Dump the code into one big file.2 Feed it to Gemini-1.5Pro (2M context).3 Ask it anything. Here's, I dissect DeepFaceLab, a deepfake repo:. 1/11

20

19

272

OpenAI allows themselves to be #2 on the leaderboard for a max of ~3 weeks. GPT-5 by July 11.

100

51

1K

Notes from the conversation between @sama and @kevinweil . "With o1 (and it's predecessors) 2025 is when agents will work.". * How close are we to AGI? After finishing a system they would ask, "in what way is this not an AGI?" The word is over loaded. o1 is level two AGI. *

37

130

1K

"Give instructions to a computer on how to do this task". This single line from @deedydas is all you need for meta-prompting. Before you write a godzilla prompt, ask claude to break it down into a set of instructions. That's how you split your task into pieces

14

51

945

The LangChain Cookbook: Part 1 - The Fundamentals. This @LangChainAI tutorial will ramp you up to the 7 core concepts of building apps powered by language models. You’ll learn LC's Schema, Models, Prompts, Indexes, Memory, Chains, Agents. 150K views on YouTube. Code below!

30

128

912

Details on @OpenAI's new assistants RAG. *Hard* creep into vectorstore territory. Thoughts:.* Default chunk overlap of 50%, super interesting.* Metadata filtering, super interesting how this dips into vectorstore territory.* Unsure about what chunking method they use - 800 tokens

40

121

915

DeepSeek @arcprize results - on par with lower o1 models, but for a fraction of the cost, and open. pretty wild.

Verified DeepSeek performance on ARC-AGI's Public Eval (400 tasks) + Semi-Private (100 tasks). DeepSeek V3:.* Semi-Private: 7.3% ($.002).* Public Eval: 14% ($.002). DeepSeek Reasoner:.* Semi-Private: 15.8% ($.06).* Public Eval: 20.5% ($.05). (Avg $ per task).

31

99

909

Very excited to represent @arcprize on OpenAIs live stream . It’s been a WILD past few weeks. Tell ya the story later

46

23

817

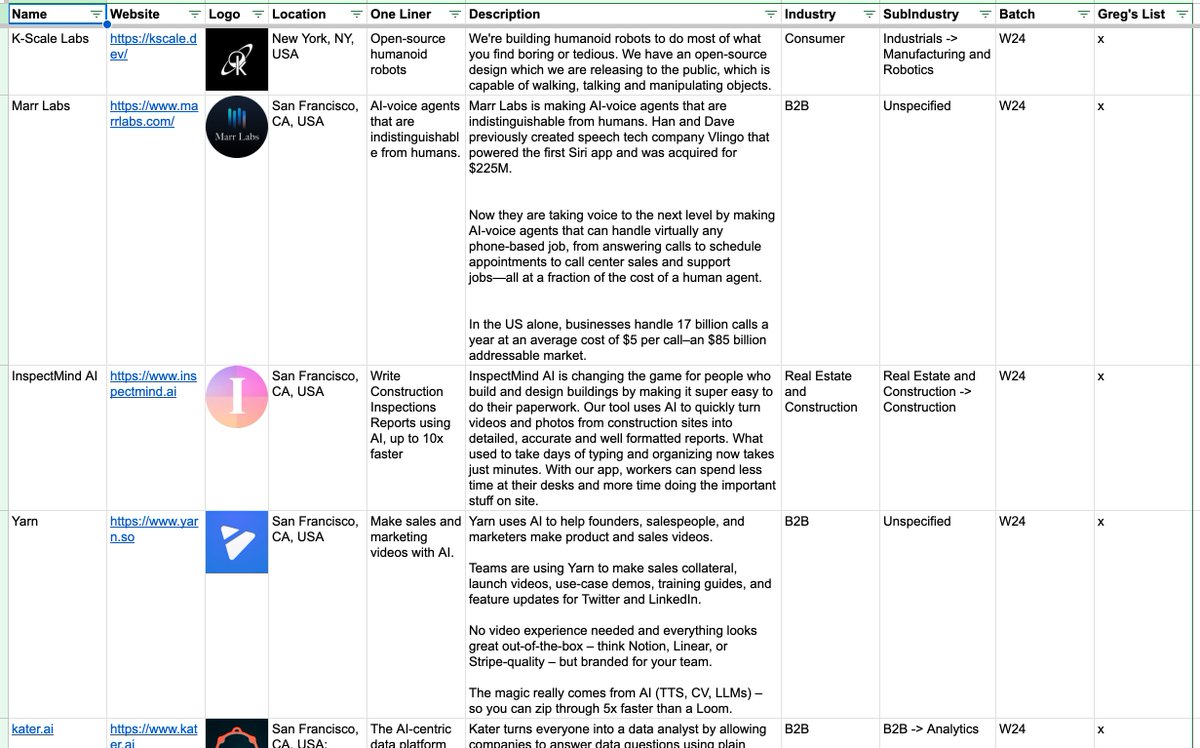

YC Batch W24 - What're the AI trends?. 247 companies just presented at demo day, I looked at them all to see where AI is going. My favorites at the end. Link to full list below. Popular Categories: .* Voice Agents (6): @marrlabs, @retellai, @OpenCall_AI, @usearini, @hemingway,

21

73

523

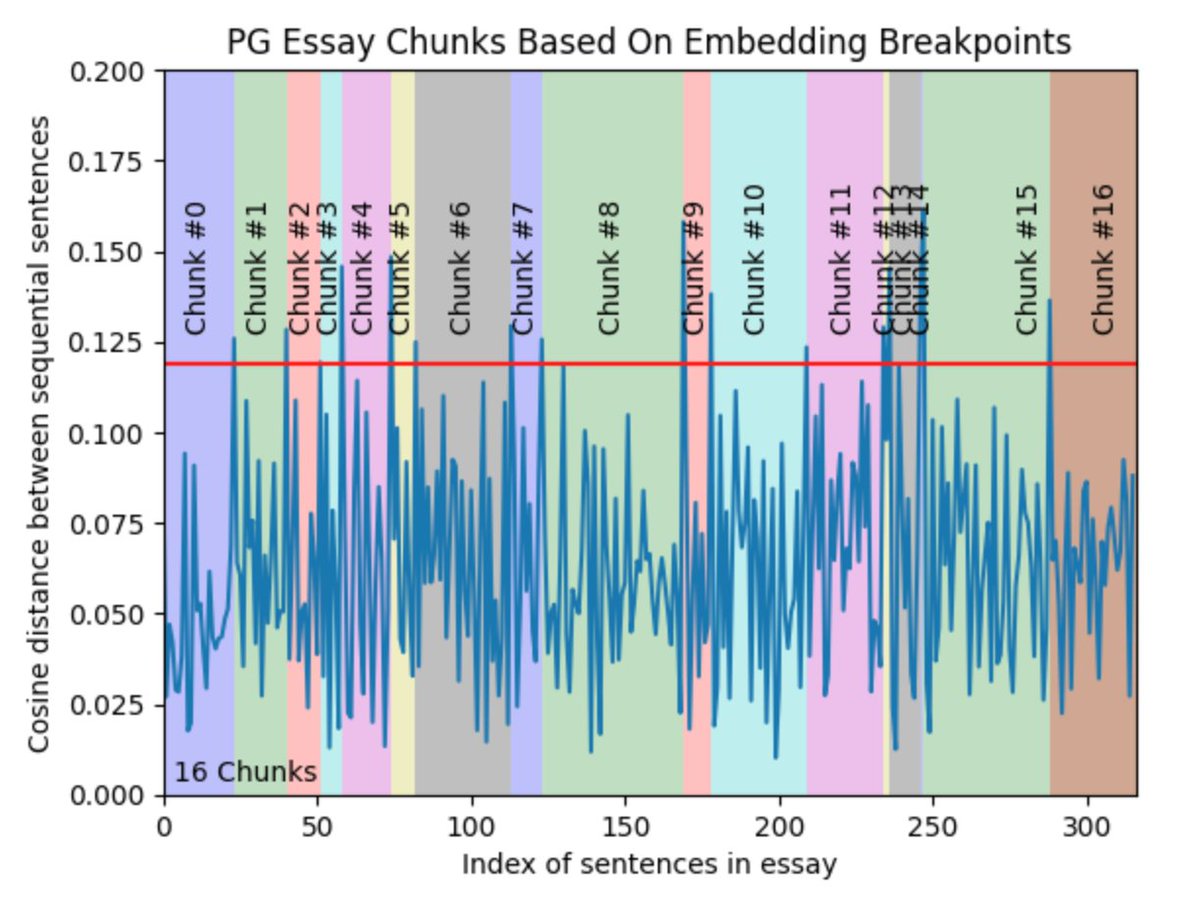

Semantic Chunking?. I was inspired by this tweet and wanted to try and embedding-based chunking. Hypothesis: Using embeddings of individual sentences, you can find semantic "break points" by measuring distances of sequential sentences. TLDR: It's not perfect, but some signal

Weird idea: chunk size when doing retrieval-augmented generation is an annoying hyperparam & feels naive to tune it to a global constant value. Could we train an e2e chunking model? i.e. system that takes in a long passage, and outputs a sequence of [span, embedding] pairs?.

40

56

491

working on a demo of a bot you talk to. the flow:.1. speech to text (@DeepgramAI).2. LLM (@GroqInc).3. text to speech (early access w/ @DeepgramAI). Groq speed is a bit variable, but overall really quick. Deepgram is super fast when you stream results (time to first data <280ms)

38

53

431

The LangChain Cookbook: Part 2 - The Use Cases. This @LangChainAI video will cover the 9 use cases & lego blocks to build your own AI applications. You’ll learn Summarization, Q&A, Extraction, Evaluation, Querying Data, Code Understanding, APIs, Chatbots + Agents. Code Below!

15

56

419

Just got done testing o1-preview and mini on @arcprize . tbh the results are surprising. sharing tomorrow.

23

11

418

I recently had a project to parse a ~1hr podcast for topics, ideas, sections etc. ~12K tokens. Then generate a few sentences to summarize each section. 300+ episodes. How would you approach this problem while keeping tokens down?. I did it in a few passes with @LangChainAI, cont.

34

41

398

Just wrote a massive notebook on 5 @LangChainAI advanced retrieval methods. You need to pick the right one but massively helpful in the right situations. Here's the TLDR:. 1. Multi Query - The Question Panel.Given a single user query, use an LLM to synthetically generate multiple.

27

35

400

Extract the tools & technologies a company is using from their career page. Using @LangChainAI and @veryboldbagel’s Kor, I was easily able to scale this to 1.5K tech companies (20K openings parsed)

16

41

390

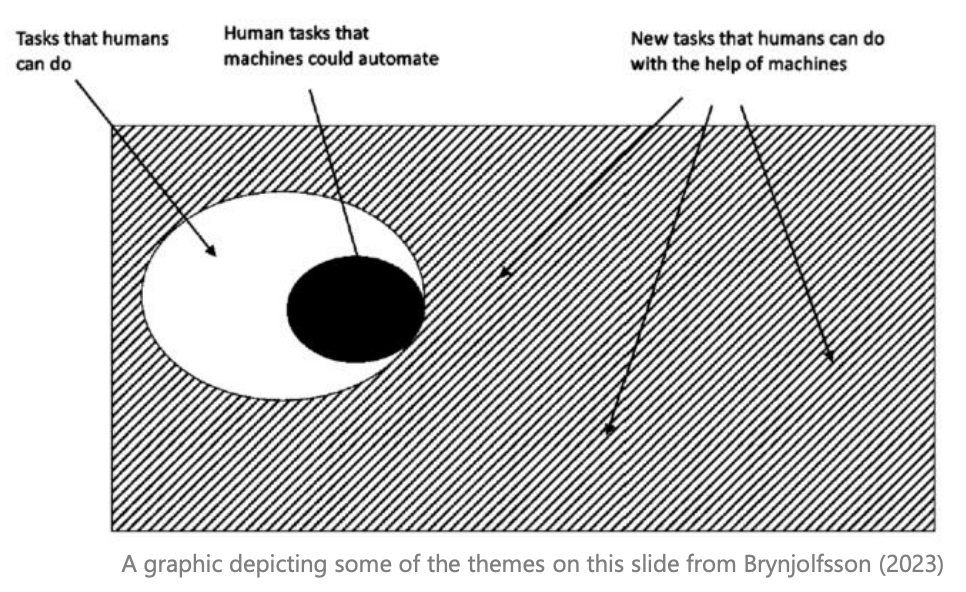

I thought this was a cool question/tweet from @yoheinakajima . Then I saw this diagram which made me think of it. As the dark area grows (more tech is created). 1. The dark area consumes more white space (it eats up jobs).2. The white space grows into the grey (more jobs get

I don’t usually share random musings I throw at ChatGPT but this was kinda interesting, it’s a list of technologies, jobs it killed, and jobs it created:. 1.Agricultural Machinery.•Jobs Lost: Traditional farming labor, such as manual crop harvesting. •Jobs Created: Engineering,.

12

76

383

If you're using LLMs to write code (like 90% of us). Prompt it to write the test *first*, then the actual code. @SullyOmarr shares why it works for him

9

29

362

visualizing text splitting & chunking strategies. ChunkViz .com . I thought I remembered a tool to visualize text chunking, but I couldn't find it, so I built one. I didn't realize it would be so visually pleasing to tinker with. 4 different @LangChainAI splitters featured

I remember seeing a chunk visualizer a while back. It would highlight the chunks found in your text according to an algorithm, chunk size, overlap you specified. Can't find the link - anyone have it?. (not token visualizer, chunks). The end result would look like this picture

19

61

357

I did 30 interviews of the "AI With Work Data" industry and distilled the 5 Levels LLM Features I've seen. (Level 5 is the hardest to get right). 1. General Chat Bot - Give employees a way to chat with a naive LLM. Basically bring ChatGPT to Slack. 2. Question Answer & Better.

I did 30 interviews with founders and end-users on "Chat-With-Your-Internal-Business-Data". Why? It was super difficult pre-LLMs. Now. massive opportunity, and really cool tech. 100+ hours of research going into a report. Most reports are boring, scripted a trailer for this one.

17

39

338

AI Trends I'm interested in 4/5/2023: . 1. Managed Retrieval Engines - Getting the *right* context to your AI is tougher than it sounds. @Metal_io announced a @LangChainAI integration. I'll be watching. 2. Plugin Developer Monetization. (full thoughts in a notion doc below).

10

40

308

Made a chunk visualizer in 10 minutes w/ gpt-4 help. Hope I find a link to save some work but I'm still blown away at the time-to-value for quick ideas. I'm building this to help people visualize how different chunking algorithms + parameters work

I remember seeing a chunk visualizer a while back. It would highlight the chunks found in your text according to an algorithm, chunk size, overlap you specified. Can't find the link - anyone have it?. (not token visualizer, chunks). The end result would look like this picture

19

32

293

Writing markdown in @cursor_ai feels like. what writing should be like?. even blog posts, cursor decreases the time between thought and words on a page. word suggestions, link autofill, bullet point formatting autofill, outline suggestions, chat in blog post, many good things.

25

11

289

I absolutely love how model price reductions are material. GPT-3.5 Pricing (per 1K tokens):.* Mar '23: $0.002.* Jul '23: $0.0015 (-25%).* Nov '23: $0.001 (-33%).* Jan '24: $0.0005 (-50%). GPT 3.5 4x cheaper than 10 months ago.

Great news for @OpenAIDevs, we are launching:. - Embedding V3 models (small & large).- Updated GPT-4 Turbo preview.- Updated GPT-3.5 Turbo (*next week + with 50% price cut on Input tokens / 25% price cut on output tokens).- Scoped API keys.

17

32

288

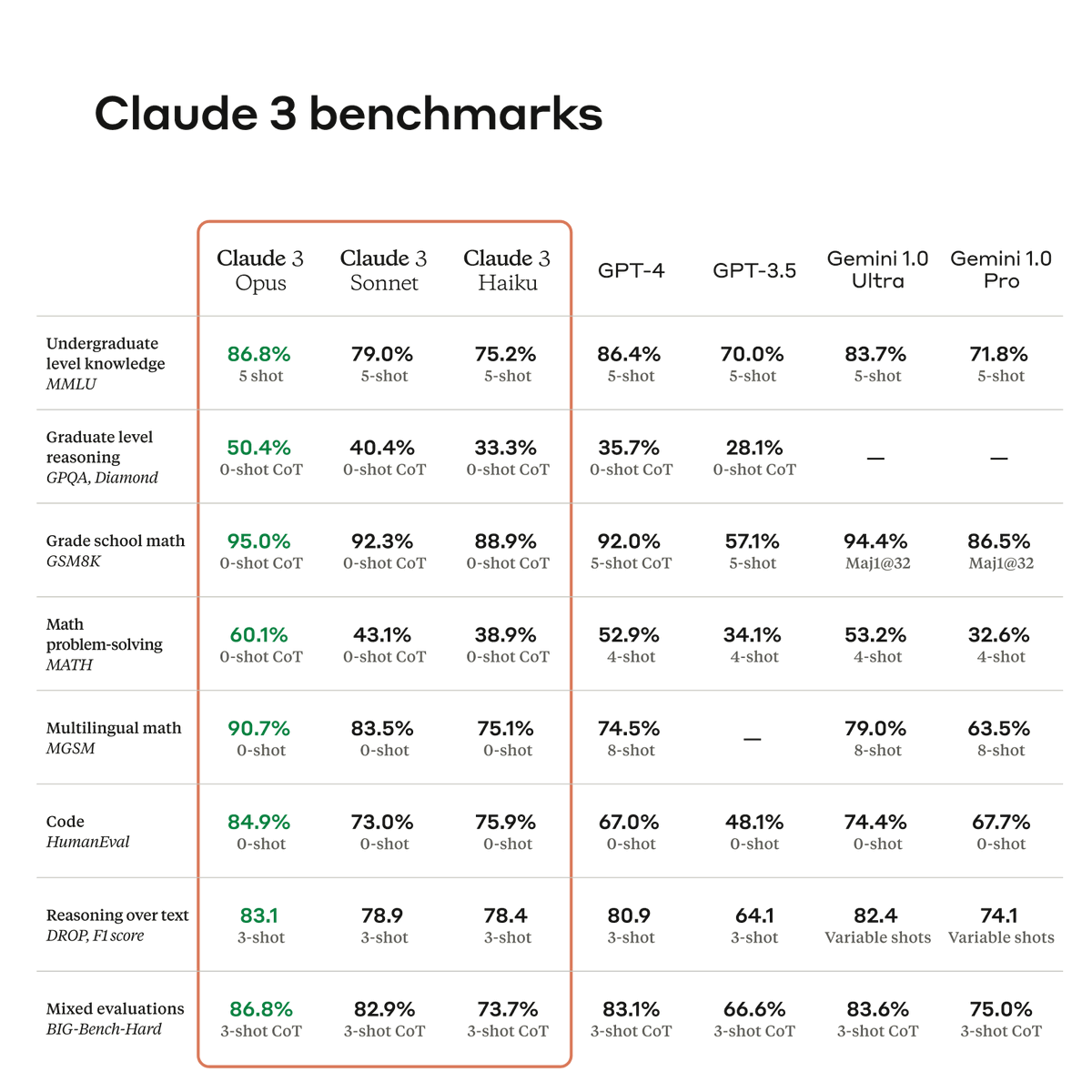

Anthropic says every benchmark is better than gpt-4. Can someone confirm their performance increases?. Both eval and vibe test.

Today, we're announcing Claude 3, our next generation of AI models. The three state-of-the-art models—Claude 3 Opus, Claude 3 Sonnet, and Claude 3 Haiku—set new industry benchmarks across reasoning, math, coding, multilingual understanding, and vision.

39

22

280

“Is coding dead?”. I’ve had the opposite thing happen to me. The more I use AI to code, the more I want to learn new frameworks and techniques . My technical confidence is (naively) at an time high right now.

the reason I'd love to know how to code is to understand the code that ai writes for me. understanding what the ai-written code does *exactly* is huge for debugging and customization. can always ask ai to explain it to you - but just so much easier to just get it right away.

37

5

256

Agentic Chunking?. Ok taking semantic chunking further, I asked myself how would I chunk a document by hand?. 1. Get propositions (cool concept).2. For each proposition, ask the LLM, should this be in an existing chunk? or a make a new one?. Results are slow/expensive, but cool

Semantic Chunking?. I was inspired by this tweet and wanted to try and embedding-based chunking. Hypothesis: Using embeddings of individual sentences, you can find semantic "break points" by measuring distances of sequential sentences. TLDR: It's not perfect, but some signal

12

28

250

this thread blew up more than I thought. i collected 31 different companies, tools and oss projects all in the slack + knowledge management space. working on synthesizing this for a project. but happy to share in the in progress spreadsheet - shoot me a DM if you want it

Who's doing Slack as a knowledge base + LLMs?. Organizing tribal knowledge in slack history for q&a, summaries, etc.?.

15

31

246

I've done 1000s hours manual data gathering in my day. This time I needed start dates of 100 universities for Fall '24. This was 3 lines of code with @perplexity_ai + @AnthropicAI sonnet. We're truly in a golden era of productivity

18

20

245

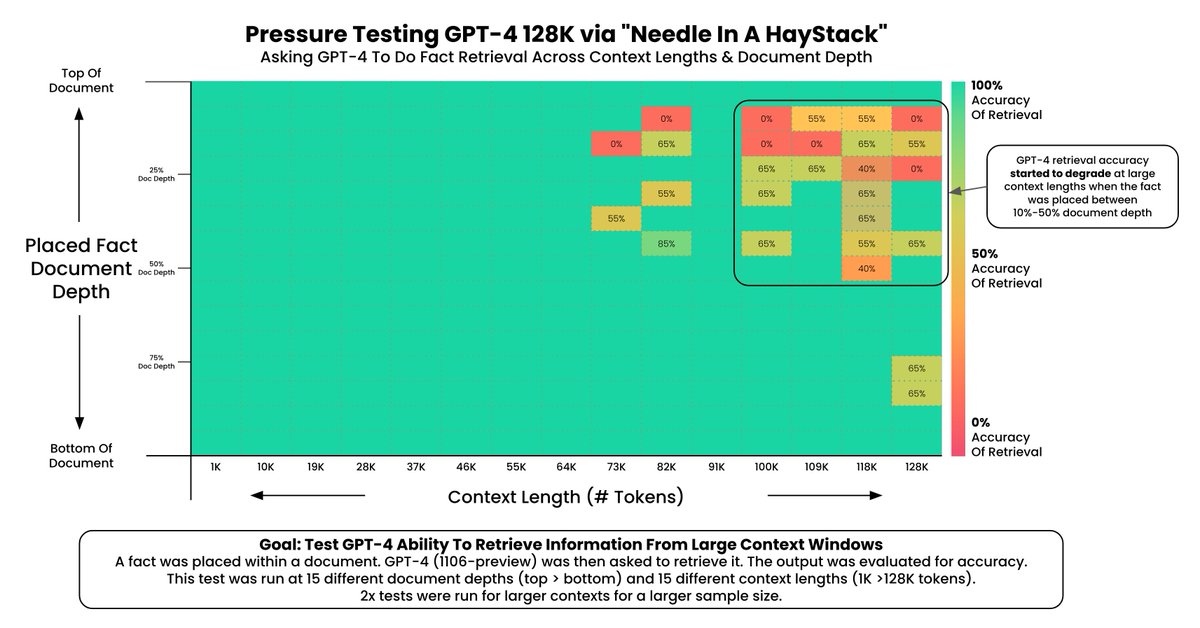

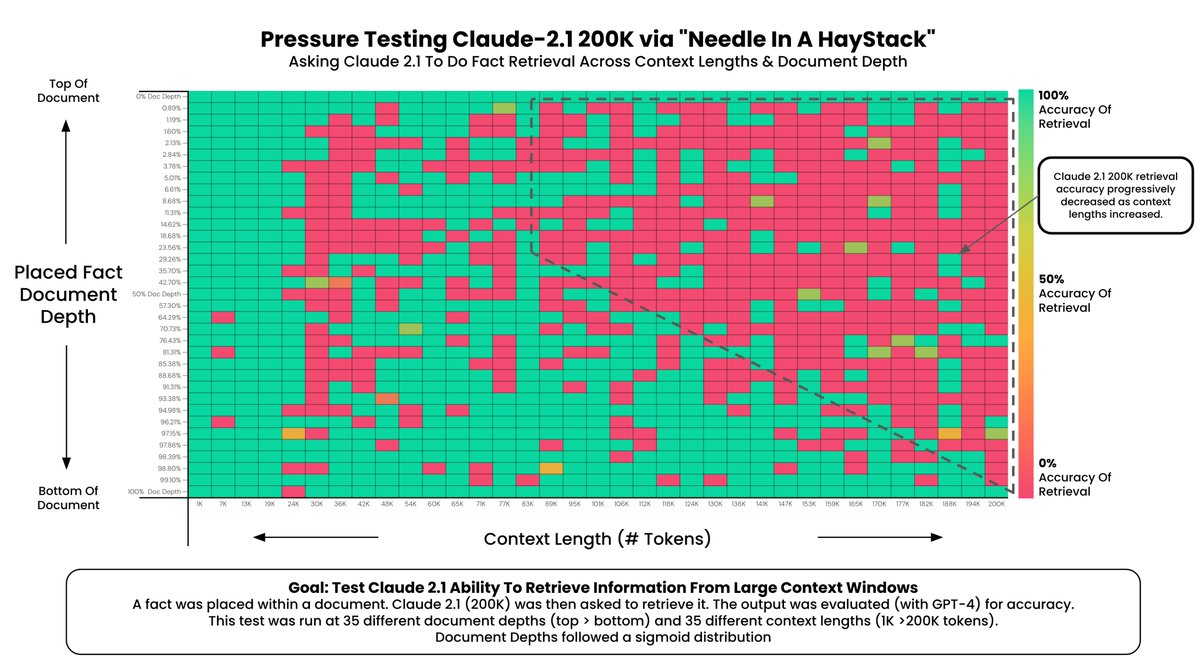

The Needle In A Haystack visualizations ended up getting 2.5M views. @DrJimFan asked for the code that created them. Here's an overview of the code (linked below), viz, and design decisions that went into them.

@GregKamradt Great job Greg! Could you also share code that generates the chart?.

4

24

245

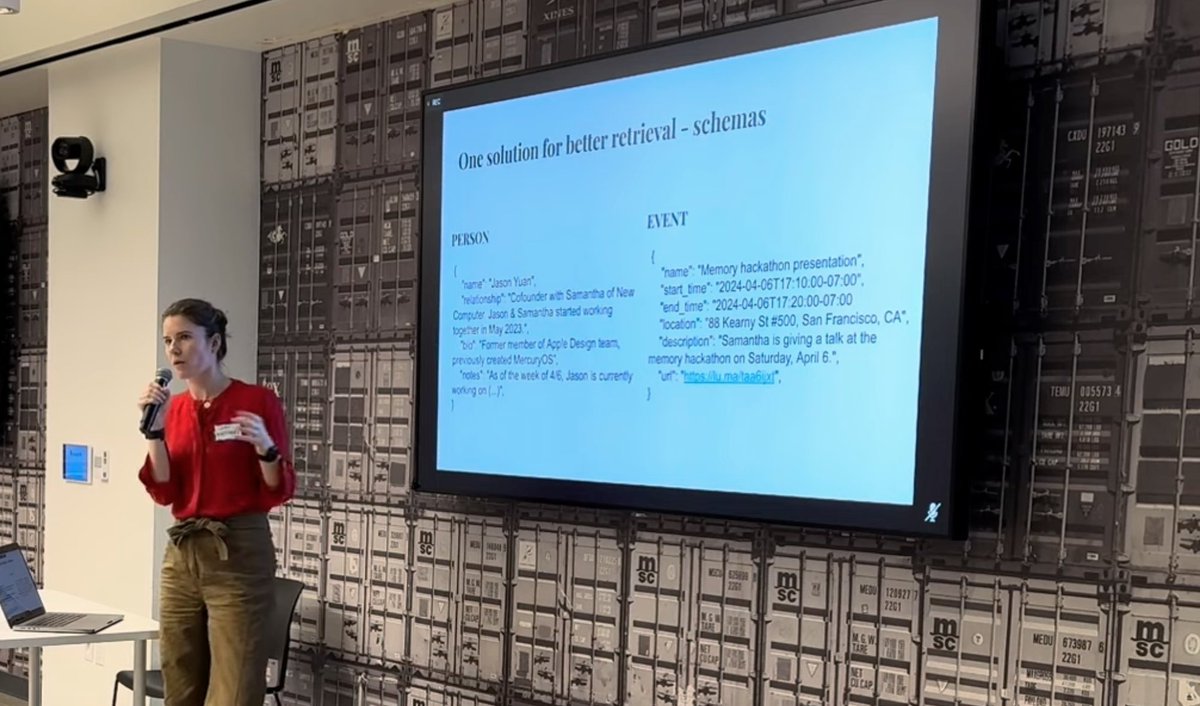

Just listened to @sjwhitmore opener at the memory hackathon. She is one of the top experts on this so there was high signal:noise - I could have listened to another 2 hours. Hard part with memories and LLMs:.* What do you store?.* How do you store it?.* How do you keep low

here's my talk from the Memory Hackathon today - i spoke a bit about some of ways we think about developing memory for Dot. always down to chat if this is something you're thinking lots about also!.

6

19

232

I needed a playground app I could test a bunch of AI Engineering on. So I pulled 700 episodes from @myfirstmilpod and extracted 10K business idea, stories, quotes, and products. I called it MFMVault. I woke up to it being featured on MFM with @dharmesh so cool!

17

11

236

my stack right now. @nextjs .@Railway.@supabase (db and vectorstore).@LangChainAI / smith.@DeepgramAI .@AnthropicAI / @OpenAI.@cursor_ai.@meilisearch - new favorite

18

13

222

After doing 15 interviews - one thing is coming clear. The most impactful AI workflows aren't a result of a overly technical, weeks to build, monster application. It's a simple LLM helping in the right place at the right time for the right person.

I'm on the hunt for *actual* AI use cases that make an impact. We don't hear enough about the tangible impact AI adoption has in the workplace. Like Mike Knoop saying Zapier earns $100K ARR per month with AI + CRM. I want to highlight 10 more use cases this month. Survey below

17

17

221

I demo'd a method to summarize an entire book w/o sending 100% of the tokens to an LLM. The results weren't bad!. Then @musicaoriginal2 DM'd saying he was open to adding it to @LangChainAI. @RLanceMartin merged today. So cool to see this go full circle.

How to summarize a book without sending 100% of your tokens to a LLM. I tested out a fun method on "Into Thin Air" (~140K tokens). The results were surprising good. Definitely enough to refine and keep working Here's a speed run though process:

10

28

219