Bálint Mucsányi

@BalintMucsanyi

Followers

244

Following

278

Statuses

62

ELLIS & IMPRS-IS PhD Student

Tübingen, Germany

Joined May 2013

RT @BlackHC: Have you wondered why I've posted all these nice plots and animations? 🤔 Well, the slides for my lectures on (Bayesian) Activ…

0

63

0

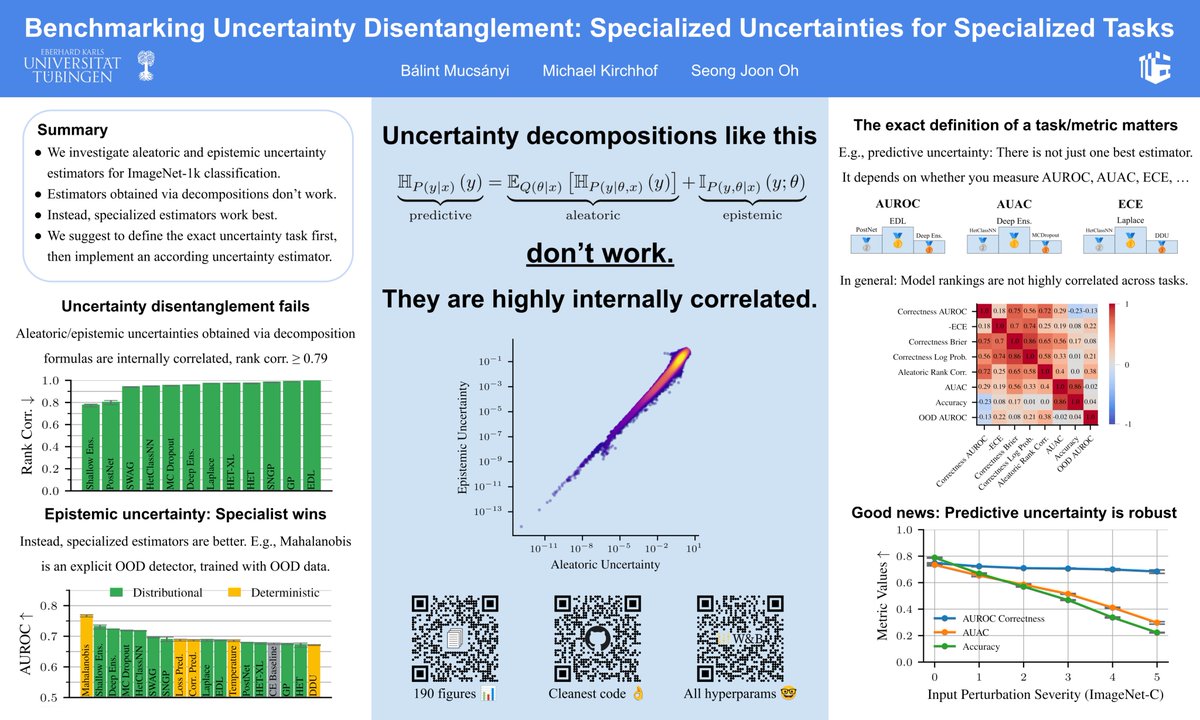

Excited to present our spotlight paper on uncertainty disentanglement at #NeurIPS! Drop by today between 11 am and 2 pm PST at West Ballroom A-D #5509 and let's chat!

Thrilled to share our NeurIPS spotlight on uncertainty disentanglement! ✨ We study how well existing methods disentangle different sources of uncertainty, like epistemic and aleatoric. 🧵👇 1/7 📖: 💻:

0

4

59

RT @mkirchhof_: Throughout my PhD, I've found one basic trick to read papers in less than 30 minutes but with maximum utility. It boils dow…

0

158

0

@BlackHC @mkirchhof_ @coallaoh ... latter acts on a pre-trained net, so there's no early stopping criterion to choose. During eval, we try all estimators on all tasks.

1

0

1

@BlackHC @mkirchhof_ @coallaoh The GMM neg. log density is also used as an estimate for DDU during eval, just like the max prob. or softmax of the predictive

0

0

0

@joh_sweh @mkirchhof_ @coallaoh We consider a classification setting where the predictive entropy is a natural notion of the total uncertainty in a distribution over probability vectors. I recommend the PhD thesis of @BlackHC ( for a nice overview

0

0

2

@BlackHC @mkirchhof_ @coallaoh ... training, when models are more underfit and the disagreement signal might be stronger. I think it's really interesting - are you in Vienna right now by any chance?

1

0

1

@BlackHC @mkirchhof_ @coallaoh ... in our setup on a fully trained model. But yes, our findings agree on the predictive vs aleatoric question, they perform very similarly

0

0

1