Brian McCullough

@brianmcc

Followers

8K

Following

16K

Statuses

14K

Techmeme Ride Home (podcast) guy. GP: Ride Home Fund. Author: How The Internet Happened. Co-founder of Ride Home Media. Maybe just listen to the Techmeme show.

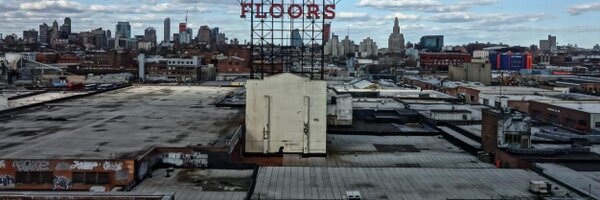

Brooklyn, NY

Joined July 2006

RT @AlexAndBooks_: This is fascinating: Barnes & Noble is adding 60 new stores in 2025 and one major factor in the bookstore revival is pe…

0

84

0

RT @pronounced_kyle: Blake gained 10,000 Twitter followers overnight by using this ONE SIMPLE TRICK: he built the first civilian supersoni…

0

151

0

If you want to take advantage of this, get in touch with me and I’ll put you in touch with them. #proudinvestor

Hey friends, we're excited to announce that an additional 2,000 H100s will be added @sfcompute's on-demand market. It's the largest* interconnected cluster, from any provider (including hyperscalers), that you can get on a per hour basis. You're not locked in with San Francisco Compute. If DeepSeek can compete with OpenAI using 2,000 H800s, you too can train a state of the art RL model without ever having to sign a long-term contract that you can't exit. You could have trained DeepSeek-v3 for $4.5m for 1.5mo on SFC or $35m if you could only buy a 1 year contract off market. This was the dream Alex & I had since our audio model company (Junelark) died because it couldn't procure enough GPUs, and it's what we've been working towards for nearly two years. Long-term contracts are a trap; they make it so only the biggest of the big can compete in AI. They force startup founders to raise at massive valuations pre-revenue, which dilutes founders and employees and sets them up to fail when they can't raise their next round. This cluster will roll out over the next few weeks as we scale our infrastructure. Soon you'll be able to access it via our managed Kubernetes service or by reaching out to set up a custom solution. We're also exploring other ways of partnering with service providers to let them offer GPU-based services, like workers and inference endpoints, without being forced into a long-term contract with a hyperscaler. You no longer need to bet your company on GPU prices to offer GPU-based services. * We think! If you know of a larger, please correct us!

0

0

2

RT @evanjconrad: Hey friends, we're excited to announce that an additional 2,000 H100s will be added @sfcompute's on-demand market. It's…

0

38

0

Shameless request for any journalists out there that follow me. The @TechmemePodcast is about to celebrate 7 years this March, and we're approaching 2,000 episodes at exactly the same time. Anyone out there want to do a profile me/the show? I said this was shameless. brian@techmeme.com

0

2

9