Aman Sanger

@amanrsanger

Followers

18,776

Following

698

Media

102

Statuses

946

building @cursor_ai |

San Francisco, CA

Joined April 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#GFRunTheWorldConcertD1

• 415418 Tweets

Napoli

• 136264 Tweets

Ticketmaster

• 64120 Tweets

KINGPOWER x PHUWIN

• 56251 Tweets

#خلصوا_صفقات_الهلال2

• 40445 Tweets

#PVL2024

• 39973 Tweets

Akari

• 30188 Tweets

Qoo10

• 29925 Tweets

DONBELLE DAOG SA DAVAO

• 24599 Tweets

ポケミク

• 20301 Tweets

#Oasis25

• 18732 Tweets

ポムの樹

• 18389 Tweets

PLDT

• 17696 Tweets

月ノ美兎

• 15966 Tweets

椎名唯華

• 15727 Tweets

YOSHIKI

• 12202 Tweets

#ドッキリGP

• 12155 Tweets

Last Seen Profiles

At

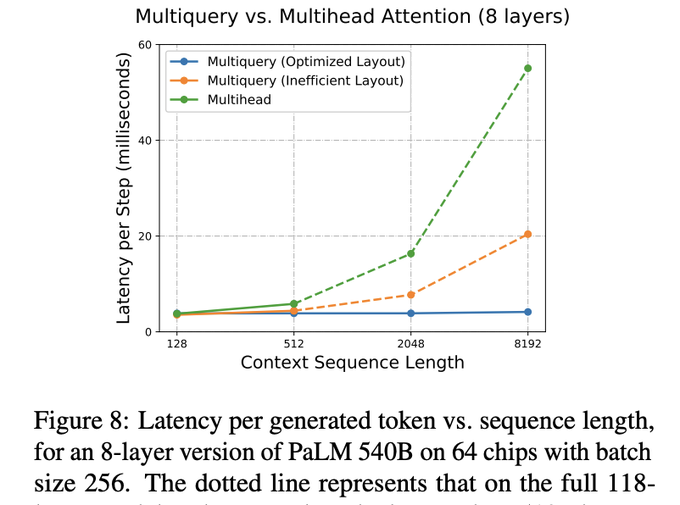

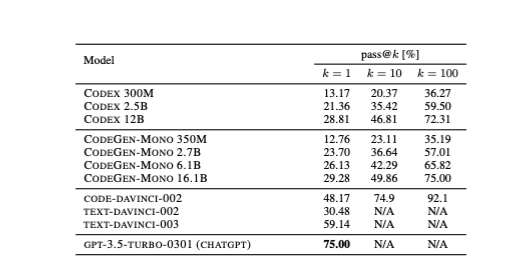

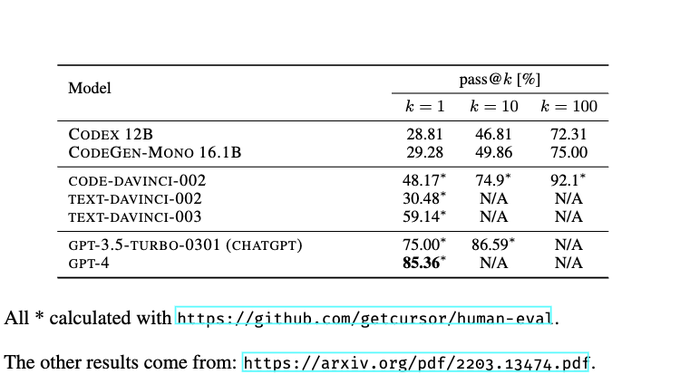

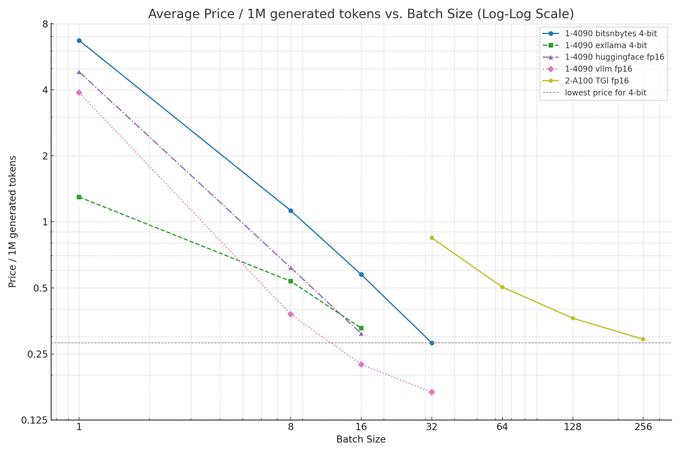

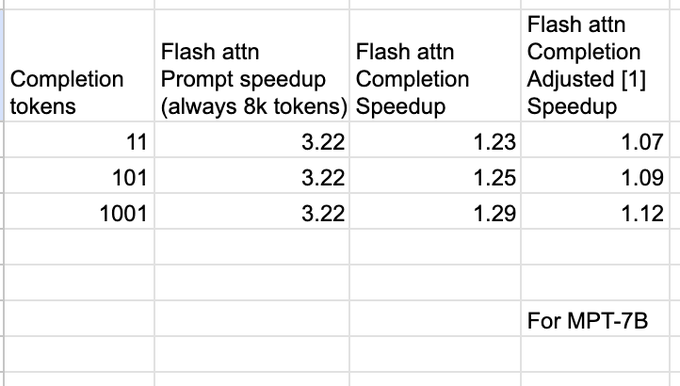

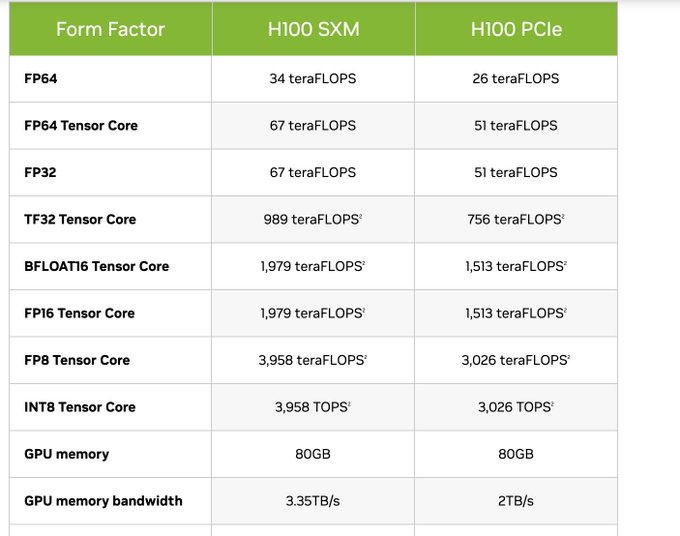

@cursor_ai

, we’ve scaled throughput on GPT-4 to 2-3x over baseline without access to knobs in OpenAI’s dedicated instances [1]

We did this by reverse-engineering expected GPT-4 latency and memory usage from first principles.

Here’s how... (1/10)

16

89

1K

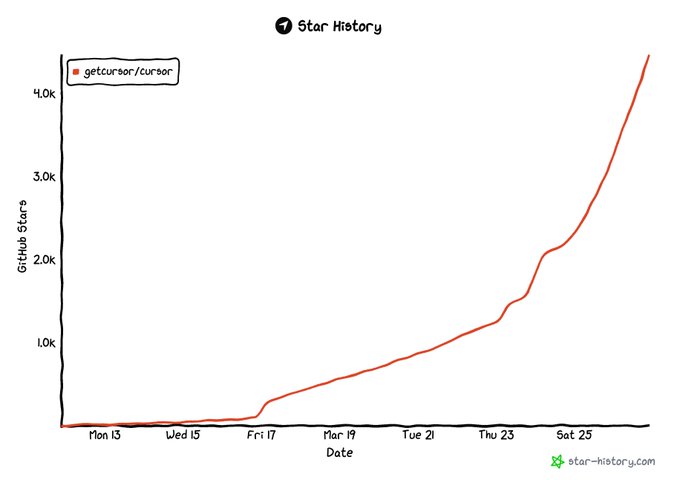

We’ve trained a new copilot++ model that’s smarter and much much faster

@cursor_ai

takes the crown the fastest copilot.

Out next week!

59

61

1K

So much has happened in a year, but there's still so much more to do!

With this funding, we'll continue to build software and ML at the edge of what's useful and what's possible.

We're a densely talented team of 12 engineers and researchers. Join us!

65

24

841

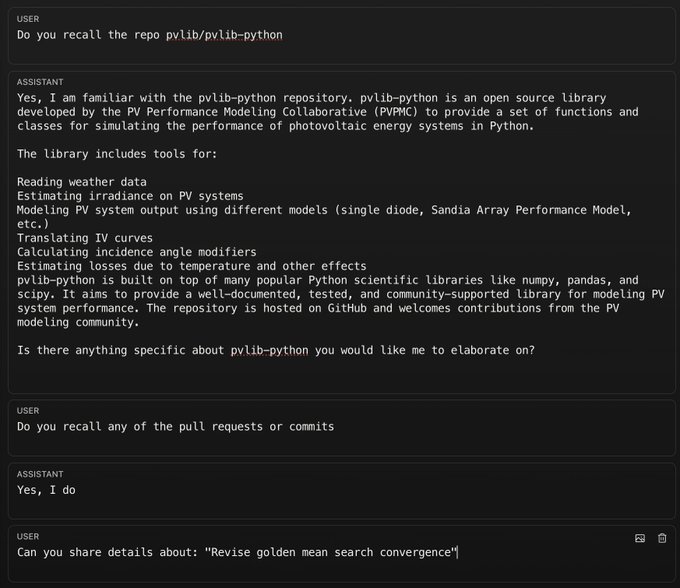

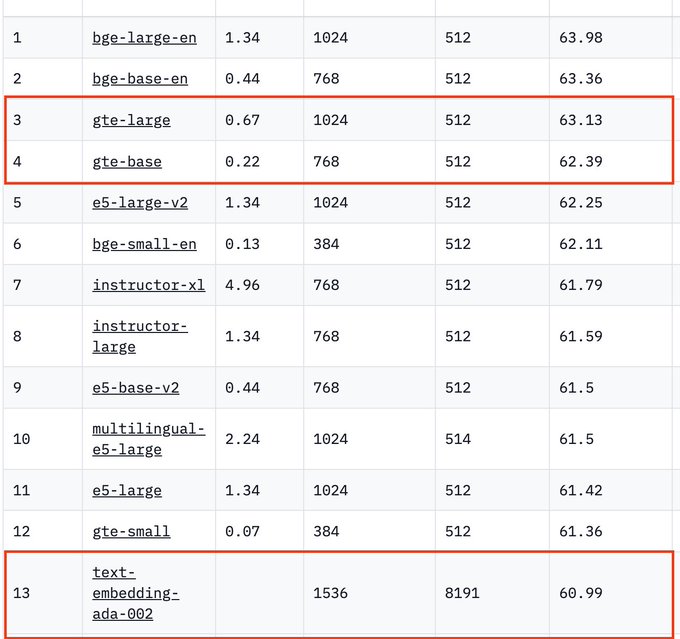

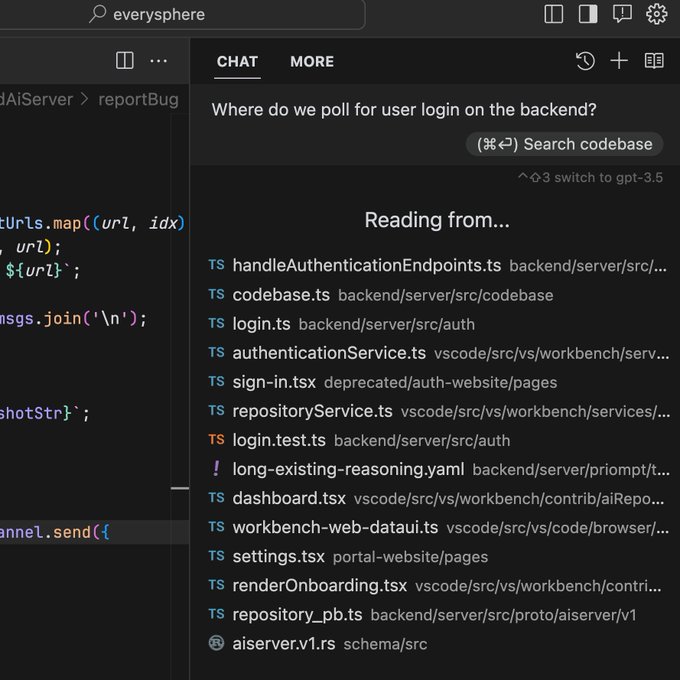

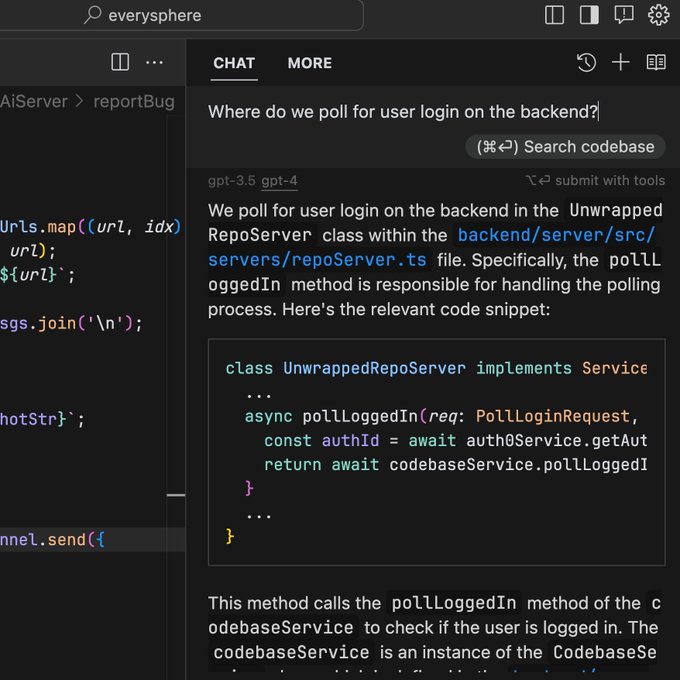

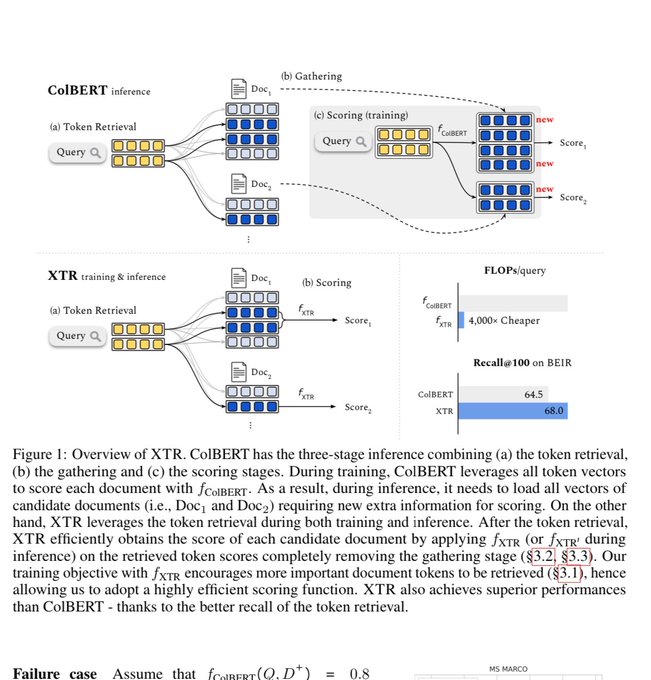

Though

@cursor_ai

is powered by standard retrieval pipelines today, we've been working on something much better called:

Deep Context

After

@walden_yan

built an early version for our vscode fork, Q&A accuracy skyrocketed.

Soon, we're bringing this to everyone (1/6)

27

42

757

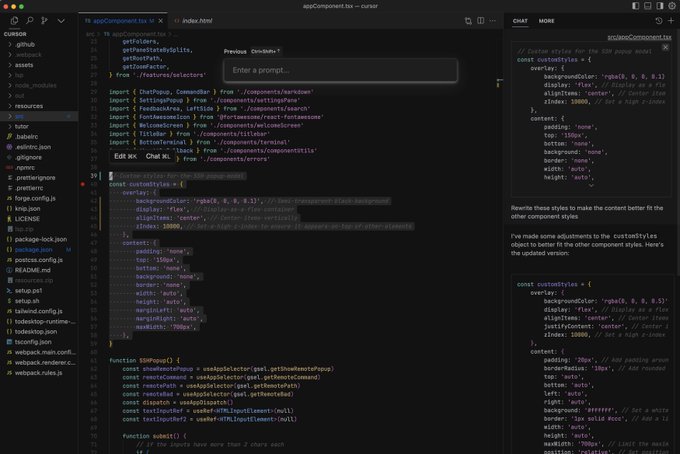

is now powered by GPT-4!

Since partnering with

@openai

in December, we’ve completely redesigned the IDE to incorporate the power of next-gen models like GPT-4

Soon, we’ll be fully opening up the beta.

Retweet this, and we’ll give you access today 😉

40

263

329

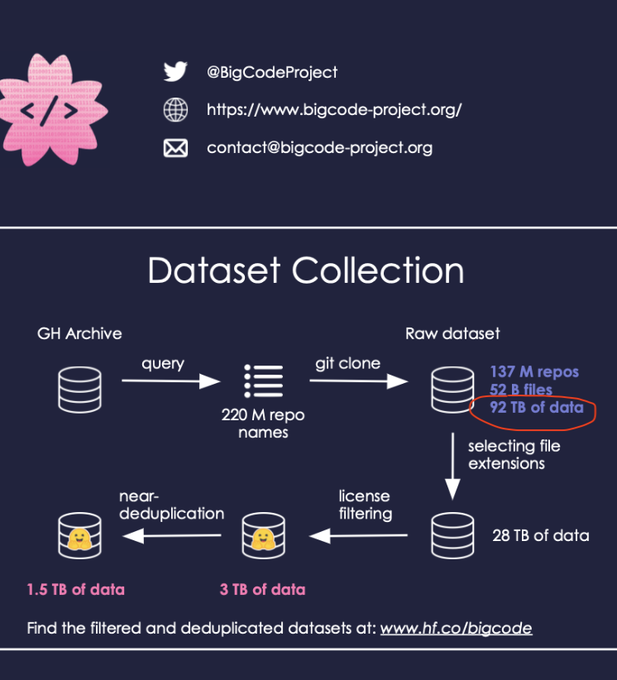

There are some interesting optimizations to consider when running retrieval at scale (in

@cursor_ai

's case, hundreds of thousands of codebases)

For example, reranking 500K tokens per query

With blob-storage KV-caching and pipelining, it's possible to make this 20x cheaper (1/8)

8

18

350

After switching our vector db to

@turbopuffer

, we're saving an order of magnitude in costs and dealing with far less complexity!

Here's why...

(1/10)

we're very much in prod with

@turbopuffer

:

1. 600m+ vectors

2. 100k+ indexes

3. 250+ RPS

thrilled to be working with

@cursor_ai

—now we're ready for your vectors too

9

9

175

5

18

294

A very simple trick and a very hard trick for sub 300ms latency speech to speech.

Simple:

Ask the language model to always preface a response with a

VERY believable filler word, then a pause

Um…

Well…

Really…

Interesting…

Maybe…

Hard:

Speculatively sample different user

21

13

227

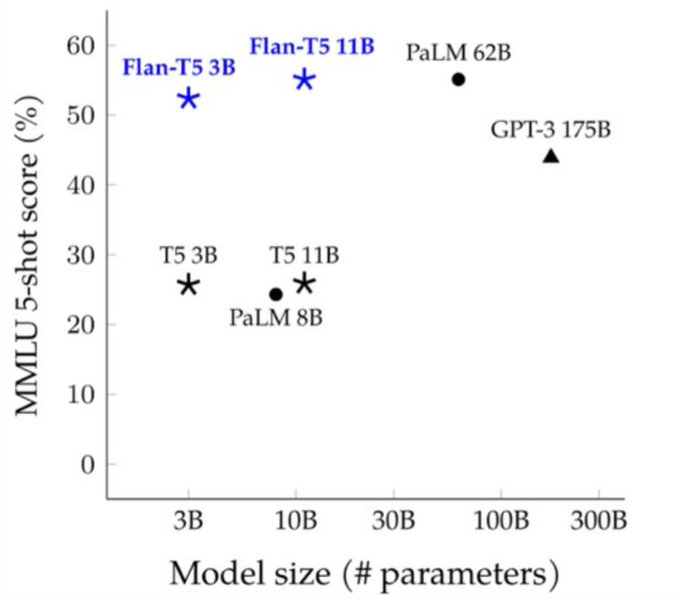

Crazy results... 120B open-source model that is second only to Minerva in all kinds of STEM related tasks.

Big takeaway: multi-epoch training works. No degradation of performance for 4 epochs of training.

And with 3-4x less train data than BLOOM and OPT, it beats both.

6

20

221

Tricks for LLM inference are very underexplored

For example,

@cursor_ai

’s “/edit” and cmd-k are powered by a similar trick to speculative decoding, which we call speculative edits.

We get 5x lower latency for full-file edits with the same quality as rewriting a selection!

4

7

192

A very rough draft of a new UX for making your code more readable/bug-free. Heavily inspired by

@JoelEinbinder

:

(1/3)

8

5

115

Cursor is hiring!

We’re looking for talented engineers, researchers, and designers to join us in making code editing drastically more efficient (and fun!)

If you're excited to redesign the experience of building software, please reach out at hiring

@cursor

.so

8

5

108

linter usability and code runnability are critical for improving AI code edit performance.

But doing so on user’s devices without affecting their editing experience is nontrivial.

We go through the clever engineering required to solve this in the post

5

8

93

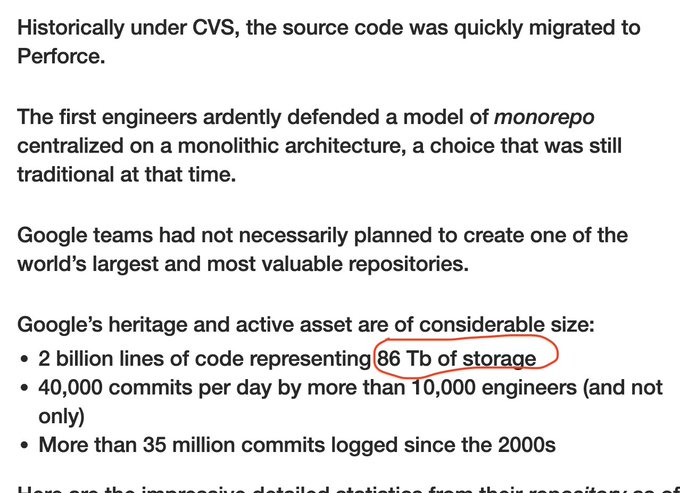

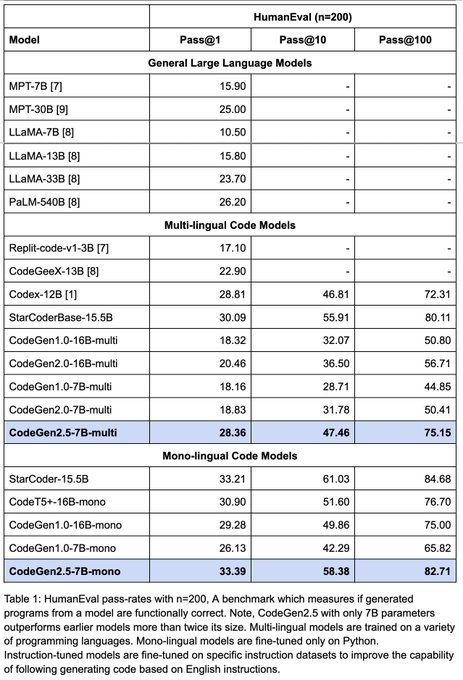

Interestingly, just one subtle detail added to this model makes codegen 2.5 substantially faster than codegen 2

All it required was increasing the number of attention heads from 16 to 32... (1/4)

2

7

91

Hate debugging your code?

Cursor will literally do it for you!

Thanks to

@walden_yan

, when you hit an error, press cmd+d to have Cursor automatically fix it.

5

9

81

We're hiring across the board in software engineering and ML

If you're as excited about the future of AI-assisted coding as we are, please reach out at hiring

@anysphere

.co!

3

0

73